- Docker Desktop for Mac user manual

- Preferences

- General

- Resources

- Advanced

- File sharing

- Proxies

- Network

- Docker Engine

- Command Line

- Kubernetes

- Reset

- Software Updates

- Dashboard

- Add TLS certificates

- Add custom CA certificates (server side)

- Add client certificates

- Directory structures for certificates

- Getting Started with Docker Desktop for Mac

- The easiest way to create containerized applications and leverage the Docker Platform from your desktop

- Overview

- Thank you for installing Docker Desktop. Here is a quick 5 step tutorial on how to use. We will walk you through:

- Running your first container

- Creating and sharing your first Docker image and pushing it to Docker Hub

- Create your first multi-container application

- Learning Orchestration and Scaling with Docker Swarm and Kubernetes

- Step 1: Local Webserver

- Run NGINX without installing it

- Step 2: Customize and Push to Docker Hub

- Step 3: Run a Multi-Service App

- Easily connect multiple services together

- Step 4: Orchestration: Swarm

Docker Desktop for Mac user manual

Estimated reading time: 16 minutes

Welcome to Docker Desktop! The Docker Desktop for Mac user manual provides information on how to configure and manage your Docker Desktop settings.

For information about Docker Desktop download, system requirements, and installation instructions, see Install Docker Desktop.

Preferences

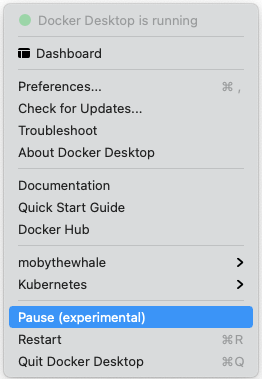

The Docker Preferences menu allows you to configure your Docker settings such as installation, updates, version channels, Docker Hub login, and more.

Choose the Docker menu

General

On the General tab, you can configure when to start and update Docker:

Automatically check for updates: By default, Docker Desktop is configured to check for newer versions automatically. If you have installed Docker Desktop as part of an organization, you may not be able to update Docker Desktop yourself. In that case, upgrade your existing organization to a Team plan and clear this checkbox to disable the automatic check for updates.

Start Docker Desktop when you log in: Automatically starts Docker Desktop when you open your session.

Include VM in Time Machine backups: Select this option to back up the Docker Desktop virtual machine. This option is disabled by default.

Use gRPC FUSE for file sharing: Clear this checkbox to use the legacy osxfs file sharing instead.

Send usage statistics: Docker Desktop sends diagnostics, crash reports, and usage data. This information helps Docker improve and troubleshoot the application. Clear the check box to opt out.

Show weekly tips: Displays useful advice and suggestions about using Docker.

Open Docker Desktop dashboard at startup: Automatically opens the dashboard when starting Docker Desktop.

Use Docker Compose V2: Select this option to enable the docker-compose command to use Docker Compose V2. For more information, see Docker Compose V2.

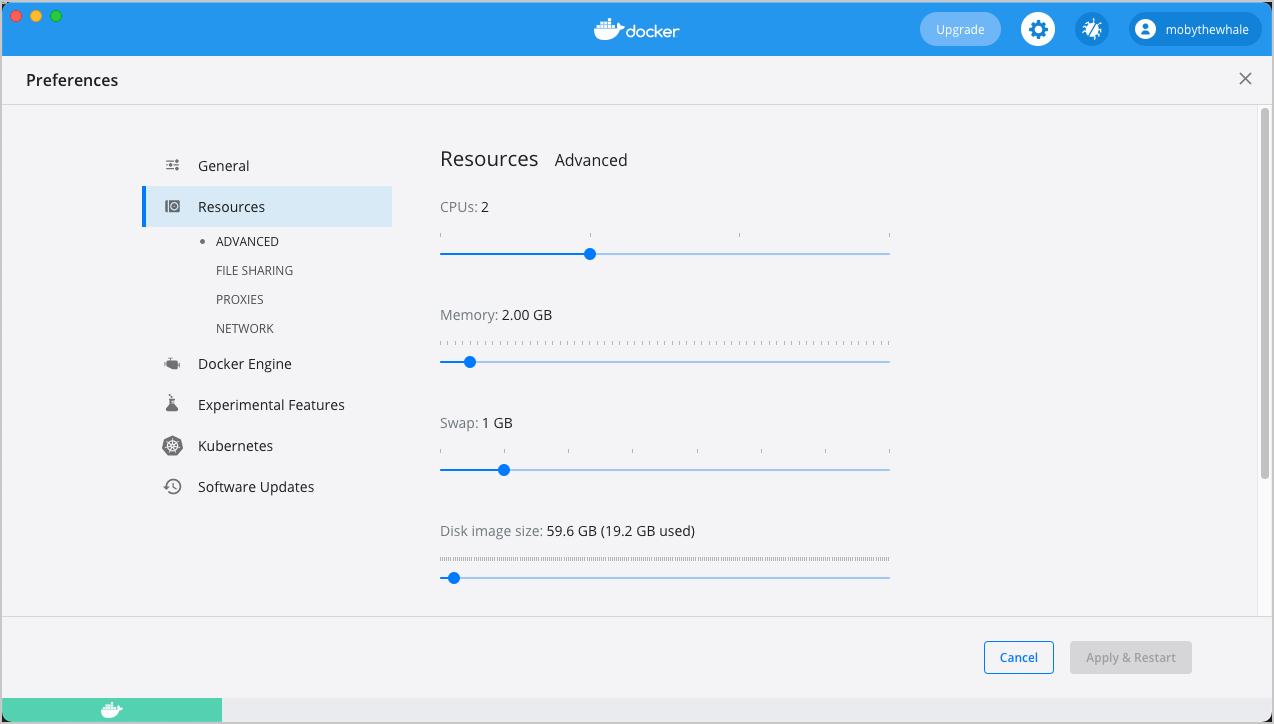

Resources

The Resources tab allows you to configure CPU, memory, disk, proxies, network, and other resources.

Advanced

On the Advanced tab, you can limit resources available to Docker.

Advanced settings are:

CPUs: By default, Docker Desktop is set to use half the number of processors available on the host machine. To increase processing power, set this to a higher number; to decrease, lower the number.

Memory: By default, Docker Desktop is set to use 2 GB runtime memory, allocated from the total available memory on your Mac. To increase the RAM, set this to a higher number. To decrease it, lower the number.

Swap: Configure swap file size as needed. The default is 1 GB.

Disk image size: Specify the size of the disk image.

Disk image location: Specify the location of the Linux volume where containers and images are stored.

You can also move the disk image to a different location. If you attempt to move a disk image to a location that already has one, you get a prompt asking if you want to use the existing image or replace it.

File sharing

Use File sharing to allow local directories on the Mac to be shared with Linux containers. This is especially useful for editing source code in an IDE on the host while running and testing the code in a container. By default the /Users , /Volume , /private , /tmp and /var/folders directory are shared. If your project is outside this directory then it must be added to the list. Otherwise you may get Mounts denied or cannot start service errors at runtime.

File share settings are:

Add a Directory: Click + and navigate to the directory you want to add.

Apply & Restart makes the directory available to containers using Docker’s bind mount ( -v ) feature.

Tips on shared folders, permissions, and volume mounts

Share only the directories that you need with the container. File sharing introduces overhead as any changes to the files on the host need to be notified to the Linux VM. Sharing too many files can lead to high CPU load and slow filesystem performance.

Shared folders are designed to allow application code to be edited on the host while being executed in containers. For non-code items such as cache directories or databases, the performance will be much better if they are stored in the Linux VM, using a data volume (named volume) or data container.

If you share the whole of your home directory into a container, MacOS may prompt you to give Docker access to personal areas of your home directory such as your Reminders or Downloads.

By default, Mac file systems are case-insensitive while Linux is case-sensitive. On Linux, it is possible to create 2 separate files: test and Test , while on Mac these filenames would actually refer to the same underlying file. This can lead to problems where an app works correctly on a Mac (where the file contents are shared) but fails when run in Linux in production (where the file contents are distinct). To avoid this, Docker Desktop insists that all shared files are accessed as their original case. Therefore, if a file is created called test , it must be opened as test . Attempts to open Test will fail with the error No such file or directory . Similarly, once a file called test is created, attempts to create a second file called Test will fail. For more information, see Volume mounting requires file sharing for any project directories outside of /Users .)

Proxies

Docker Desktop detects HTTP/HTTPS Proxy Settings from macOS and automatically propagates these to Docker. For example, if you set your proxy settings to http://proxy.example.com , Docker uses this proxy when pulling containers.

Your proxy settings, however, will not be propagated into the containers you start. If you wish to set the proxy settings for your containers, you need to define environment variables for them, just like you would do on Linux, for example:

For more information on setting environment variables for running containers, see Set environment variables.

Network

You can configure Docker Desktop networking to work on a virtual private network (VPN). Specify a network address translation (NAT) prefix and subnet mask to enable Internet connectivity.

Docker Engine

The Docker Engine page allows you to configure the Docker daemon to determine how your containers run.

Type a JSON configuration file in the box to configure the daemon settings. For a full list of options, see the Docker Engine dockerd commandline reference.

Click Apply & Restart to save your settings and restart Docker Desktop.

Command Line

On the Command Line page, you can specify whether or not to enable experimental features.

Experimental features provide early access to future product functionality. These features are intended for testing and feedback only as they may change between releases without warning or can be removed entirely from a future release. Experimental features must not be used in production environments. Docker does not offer support for experimental features.

For a list of current experimental features in the Docker CLI, see Docker CLI Experimental features.

You can toggle the experimental features on and off in Docker Desktop. If you toggle the experimental features off, Docker Desktop uses the current generally available release of Docker Engine.

You can see whether you are running experimental mode at the command line. If Experimental is true , then Docker is running in experimental mode, as shown here. (If false , Experimental mode is off.)

Kubernetes

Docker Desktop includes a standalone Kubernetes server that runs on your Mac, so that you can test deploying your Docker workloads on Kubernetes. To enable Kubernetes support and install a standalone instance of Kubernetes running as a Docker container, select Enable Kubernetes.

For more information about using the Kubernetes integration with Docker Desktop, see Deploy on Kubernetes.

Reset

On Docker Desktop Mac, the Restart Docker Desktop, Reset to factory defaults, and other reset options are available from the Troubleshoot menu.

For information about the reset options, see Logs and Troubleshooting.

Software Updates

The Software Updates section notifies you of any updates available to Docker Desktop. You can choose to download the update right away, or click the Release Notes option to learn what’s included in the updated version.

If you are on a Docker Team or a Business subscription, you can turn off the check for updates by clearing the Automatically Check for Updates checkbox in the General settings. This will also disable the notification badge that appears on the Docker Dashboard.

Dashboard

The Docker Desktop Dashboard enables you to interact with containers and applications and manage the lifecycle of your applications directly from your machine. The Dashboard UI shows all running, stopped, and started containers with their state. It provides an intuitive interface to perform common actions to inspect and manage containers and existing Docker Compose applications. For more information, see Docker Desktop Dashboard.

Add TLS certificates

You can add trusted Certificate Authorities (CAs) (used to verify registry server certificates) and client certificates (used to authenticate to registries) to your Docker daemon.

Add custom CA certificates (server side)

All trusted CAs (root or intermediate) are supported. Docker Desktop creates a certificate bundle of all user-trusted CAs based on the Mac Keychain, and appends it to Moby trusted certificates. So if an enterprise SSL certificate is trusted by the user on the host, it is trusted by Docker Desktop.

To manually add a custom, self-signed certificate, start by adding the certificate to the macOS keychain, which is picked up by Docker Desktop. Here is an example:

Or, if you prefer to add the certificate to your own local keychain only (rather than for all users), run this command instead:

Note: You need to restart Docker Desktop after making any changes to the keychain or to the

/.docker/certs.d directory in order for the changes to take effect.

For a complete explanation of how to do this, see the blog post Adding Self-signed Registry Certs to Docker & Docker Desktop for Mac.

Add client certificates

You can put your client certificates in

When the Docker Desktop application starts, it copies the

/.docker/certs.d folder on your Mac to the /etc/docker/certs.d directory on Moby (the Docker Desktop xhyve virtual machine).

You need to restart Docker Desktop after making any changes to the keychain or to the

/.docker/certs.d directory in order for the changes to take effect.

The registry cannot be listed as an insecure registry (see Docker Engine. Docker Desktop ignores certificates listed under insecure registries, and does not send client certificates. Commands like docker run that attempt to pull from the registry produce error messages on the command line, as well as on the registry.

Directory structures for certificates

If you have this directory structure, you do not need to manually add the CA certificate to your Mac OS system login:

The following further illustrates and explains a configuration with custom certificates:

You can also have this directory structure, as long as the CA certificate is also in your keychain.

To learn more about how to install a CA root certificate for the registry and how to set the client TLS certificate for verification, see Verify repository client with certificates in the Docker Engine topics.

Источник

Getting Started with Docker Desktop for Mac

The easiest way to create containerized applications and leverage the Docker Platform from your desktop

Overview

Thank you for installing Docker Desktop. Here is a quick 5 step tutorial on how to use. We will walk you through:

Running your first container

Creating and sharing your first Docker image and pushing it to Docker Hub

Create your first multi-container application

Learning Orchestration and Scaling with Docker Swarm and Kubernetes

Step 1: Local Webserver

Run NGINX without installing it

If you haven’t run Docker before, here’s a quick way to see the power of Docker at work. From the terminal, type

Then open http://localhost:8080 in your browser and see the default nginx 403 page. That’s because NGINX, which is running in the container, is looking locally for the index.html file. In your favorite text editor, create an index.html file in the current directory. Something as simple as

Refresh http://localhost:8080 and see your new content. You have created a web server without installing the web server. Docker took care of the dependencies.

Last, stop your container and remove it to clean up your environment.

Step 2: Customize and Push to Docker Hub

The last step used an official Docker image. Next step, create your own custom image. You should have a Docker ID, you probably created it to download Docker Desktop.

In your favorite text editor create a file called Dockerfile in the same directory you used in step 1. No extension, just Dockerfile. Paste in this code and save the file:

This tells Docker to use the same nginx base image, and create a layer that adds in the HTML you created in the last step. Instead of creating a volume that accesses the file directly from the host you are running on, it adds the file to the image. To build the image, in your terminal, type:

Two things, first replace YourDockerID > with your Docker ID. Also notice the “.” at the end of the line. That tells Docker to build in the context of this directory. So when it looks to COPY the file to /usr/share/nginx/html it will use the file from this directory.

And go to http://localhost:8080 to see the page.

Next login to Docker Hub. You can do this directly from Docker Desktop. Or you can do it from the command line by typing.

Finally push your image to Docker Hub:

You may be asked to login if you haven’t already. Then you can go to hub.docker.com, login and check your repositories

To clean up before moving to the next section, run

Step 3: Run a Multi-Service App

Easily connect multiple services together

Docker Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration. Docker Compose installs automatically with Docker Desktop.

A multi-container app is an app that has multiple containers running and communicating with each other. This sample uses a simple Java web app running in Tomcat with a MySQL database. You can check out the app in our dockersamples GitHub repo. We’ve pushed two images to the Docker Hub under the dockersamples repo. Docker Compose handles service discovery directly, allowing the app to reference the service directly and Docker will route traffic to the right container. To try it out, open a text editor and paste the text from this file. Then save it as docker-compose.yml.

There’s a lot of details in there but basically you can see that it specifies the images to be used, the service names, the ports available, and networks the different services are on. It also specifies the password, which you wouldn’t want to do in a real world situation.

To run it, open a command line and navigate to the same directory as the docker-compose.yml file. At the command line, type

You will see a bunch of commands go by as it pulls images from Docker Hub and then starts them up. When it has finished running, navigate to http://localhost:8080/java-web/. You should see a sign-up page for a newsletter. If you sign up, the app will put your info into the database. A simple app, but you can see it is running.

To clean up before moving to the next section type,

Step 4: Orchestration: Swarm

While it is easy to run an application in isolation on a single machine, orchestration allows you to coordinate multiple machines to manage an application, with features like replication, encryption, load balancing, service discovery and more. If you’ve read anything about Docker, you have probably heard of Kubernetes and Docker swarm mode. Docker Desktop is the easiest way to get started with either Swarm or Kubernetes.

A swarm is a group of machines that are running Docker and joined into a cluster. After that has happened, you continue to run the Docker commands you’re used to, but now they are executed on a cluster by a swarm manager. The machines in a swarm can be physical or virtual. After joining a swarm, they are referred to as nodes.

Swarm mode uses managers and workers to run your applications. Managers run the swarm cluster, making sure nodes can communicate with each other, allocate applications to different nodes, and handle a variety of other tasks in the cluster.

Swarm uses the Docker command line or the Docker Compose file format with a few additions. Give it a try with a few simple steps.

First, make sure you are using Swarm as your orchestrator. Open Docker Desktop and select Preferences-> Kubernetes. Make sure Swarm is selected and hit Apply if needed.

Then, copy the contents of this file into a file called docker-stack-simple.yml.

From the command line in the same directory as that file, type the following commands.

You should see a command you could copy and paste to add another node to the swarm. For our purposes right now don’t add any more nodes to the swarm.

Docker will tell you that it is creating networks and services. And all connected to a stack called “vote”. You can see all stacks running on your Swarm by typing

Probably you have just the one, with 5 services. Next you can see all the services by typing

This will show you the 5 services, all with 1 replica. All with a name vote_ plus something like vote_db. You can run this a few times until all the replicas say 1/1.

So what happened? With the simple compose file format you created an application that has 5 components:

- A voting page in Flask that pushing results to redis.

- A redis instance to store key value pairs.

- A worker that goes into the redis instance, pulls out data and pushes it into the database.

- A postgres database.

- A results page running in Node.js that draws from the database.

Now, click on localhost:5000 to vote. You can vote for cats or dogs, whichever you like better. On localhost:5001 you can see the results of the vote. Open up different browsers to add in additional votes if you want.

The code for all these components is in our Example Voting App repo on GitHub. There’s a lot going on here but here are some points to highlight:

- The services all refer to each other by name. So when the result app calls on the database, it connects to `postgres@db` and the Swarm takes care of directory the service to `db`.

- In a multi-node environment, Swarm will spread out the replicas however you want.

- Swarm will also do basic load balancing. Here’s how you can see this in action:

Load localhost:5000 again. Note the “Processed by container ID “ at the bottom of the page. Now add a replica:

Once it is done verifying, reload the page a few times and see the container ID rotates between three different values. Those are the three different containers. Even if they were on different nodes, they would share the same ingress and port.

To clean up before moving to the next section, type

Источник