How to Set up Teaming with an Intel® Ethernet Adapter in Windows® 10 1809?

Validated. This solution has been verified by our customers to fix the issue with these environment variables

Content Type Install & Setup

Article ID 000032008

Last Reviewed 08/27/2019

Teaming configuration is not available anymore in Device Manager after upgrading to or installing Windows® 10 October 2018 Update (also known as version 1809 and codenamed Redstone 5 or simply RS5).

Windows® 10 supported Intel® Ethernet Adapters.

Follow the steps below in the given order:

1. From Device Manager, disable the NIC device.

2. Remove all drivers and Intel® PROSet software as follows:

2.1. Access Device Manager*.

2.2. Expand Network Adapters.

2.3. Right-click each entry of an Intel® Ethernet Adapter.

2.4. Click Uninstall Device.

3. Once the adapter is uninstalled, cold boot the system (power off and then back on completely).

4. If the device is still disabled, enable it.

5. Download and install the latest driver for your Intel® Ethernet Network Adapter just doing double click on the file. If instead of the dedicated driver you downloaded the Complete Driver Pack, please install it as follows (if you downloaded an installer, which usually is a file with the extension .exe, you can skip these steps and continue from Step 6):

5.1. Open the Command Prompt as an Administrator.

5.2. Access the folder that is made after having unzipped the Intel® Ethernet Adapter driver package.

5.3. Within the Command prompt, go to APPS > PROSETDX > Winx64. In this folder, run the following command to install the driver along with PROSet and the Advanced Network Settings: DxSetup.exe BD=1 PROSET=1 ANS=1

6. Reboot the computer.

7. Open the Windows* PowerShell, as Administrator, and proceed as follows:

7.1. Run the following command (see below), which should take a few seconds, and it would not show any confirmation; it would just go back to the blinking cursor:

Import-Module -Name «C:\Program Files\Intel\Wired Networking\IntelNetCmdlets\IntelNetCmdlets»

7.2. Proceed adding team(s) by running this other command: New-IntelNetTeam

* This is an interactive tool. It will start by asking the Team Member Names. These names can be confirmed by the names of the ports in Device Manager > Network Adapters. For example, port 1 might be called Intel(R) Ethernet Server Adapter I350-T2V2 and port 2 might be Intel(R) Ethernet Server Adapter I350-T2V2 #2 (just an example of setting up a team using two ports within a single adapter). Alternatively, one can follow the following command line: New-IntelNetTeam -TeamMemberNames «», «» -TeamMode AdapterFaultTolerance -TeamName » «

* As for the different team types/modes (see the list below), please enter only the exact text that comes before each explanation, without spaces, bullets or dashes:

- AdapterFaultTolerance — Provides automatic redundancy for a server’s network connection. If the primary adapter fails, the secondary adapter takes over. Adapter Fault Tolerance supports two to eight adapters per team. This teaming type works with any hub or switch. All team members must be connected to the same subnet.

- SwitchFaultTolerance — Provides failover between two adapters connected to separate switches. Switch Fault Tolerance supports two adapters per team. Spanning Tree Protocol (STP) must be enabled on the switch when you create a Switch Fault Tolerance (SFT) team. When SFT teams are created, the Activation Delay is automatically set to 60 seconds. This teaming type works with any switch or hub. All team members must be connected to the same subnet.

- AdaptiveLoadBalancing — Provides load balancing of transmit traffic and adapter fault tolerance. In Windows* operating systems, you can also enable or disable receive load balancing (RLB) in Adaptive Load Balancing teams (by default, RLB is enabled).

- VirtualMachineLoadBalancing — Provides transmit and receive traffic load balancing across virtual machines bound to the team interface, as well as fault tolerance in the event of switch port, cable, or adapter failure. This teaming type works with any switch.

- StaticLinkAggregation — Provides increased transmission and reception throughput in a team of two to eight adapters. This team type replaces the following team types from prior software releases: Fast EtherChannel*/Link Aggregation (FEC) and Gigabit EtherChannel*/Link Aggregation (GEC). This type also includes adapter fault tolerance and load balancing (only routed protocols). This teaming type requires a switch with Intel® Link Aggregation, Cisco* FEC or GEC, or IEEE 802.3ad Static Link Aggregation capability. All adapters in a Link Aggregation team running in static mode must run at the same speed and must be connected to a Static Link Aggregation capable switch. If the speed capability of adapters in a Static Link Aggregation team are different, the speed of the team is dependent on the lowest common denominator.

- IEEE802_3adDynamicLinkAggregation — Creates one or more teams using Dynamic Link Aggregation with mixed-speed adapters. Like the Static Link Aggregation teams, Dynamic 802.3ad teams increase transmission and reception throughput and provide fault tolerance. This teaming type requires a switch that fully supports the IEEE 802.3ad standard.

- MultiVendorTeaming — Adds the capability to include adapters from selected other vendors in a team.

8. Finally, it is just required to set any chosen name for the new team. Device Manager will confirm that the system is detecting the new team.

Cause & More Information:

Related topic

THE INFORMATION IN THIS ARTICLE HAS BEEN USED BY OUR CUSTOMERS BUT NOT TESTED, FULLY REPLICATED, OR VALIDATED BY INTEL. INDIVIDUAL RESULTS MAY VARY. ALL POSTINGS AND USE OF THE CONTENT ON THIS SITE ARE SUBJECT TO THE TERMS AND CONDITIONS OF USE OF THE SITE.

Is Windows 10 Software NIC Teaming now possible?

Windows Server 2012 brought with it NIC teaming of adapters by different manufacturers.

I mean teaming using 1 NIC from say Intel and the other from Realtek. It has been possible to do teaming or bonding at the driver level, but what was introduced in Windows Server 2012 is at the operating system level. I appreciate Linux has been doing this for years 🙂

It didn’t make it onto Windows 8/8.1. I’ve seen some article where people reported it was working on Windows 10 Preview but no longer working.

Is NIC Teaming supported on Windows 10 Pro? Or another edition.

4 Answers 4

This has been disabled in the most recent version of Windows 10 as well as the insider build 14295. The powershell command will error out or say that LBFO is not supported on the current SKU depending on the versin of Windows you are running. Hopefully MS will re-enable this feature sometime soon.

— Original Post Below —

Yes, This is possible! To anyone else who found this post by Googling:

I haven’t found a way to access this though a GUI, but running the following PowerShell command will create a team for you. Just replace the Ethernet names with your NIC names.

New-NetLbfoTeam TheATeam «Ethernet»,»Ethernet 6″

You should then get a 2GBs Switch Independent team. From there you can use the Network Connections screen to set it up how you want.

It seems this feature is coming back, at least for Intel NICs:

Intel mentions teaming support for Windows 10 in driver versions 22.3 or newer. Currently 23.5 is available.

This version comes with ANS (advanced network services, installed by default) which should allow teaming via powershell commands.

I havent tried it yet — the only mainboard I have with two intel nic’s is a bit bios upgrade stubborn.

If anyone could get this to work with the latest windows creator update mentioned in the release notes, let me know 🙂

Update: tried link aggregation on Windows 10 — so currently it works (Jan 2019)

An iperf3 run from two clients shows it seems to work:

No it is not possible to get NIC teamin in Windows 10 client SKUs. But available for Server SKUs.

From 14393 version (Anniversary update) this NIC teaming feature had been blocked or removed forever. It is seemed that the feature mistakenly added to client Windows 10 SKUs. When you put New-NetLbfoTeam command in PowerShell e.g. New-NetLbfoTeam -Name «NewTeam» -TeamMembers «Ethernet», «Ethernet2» , the error shows as follows

New-NetLbfoTeam : The LBFO feature is not currently enabled, or LBFO is not supported on this SKU. At line:1 char:1 + New-NetLbfoTeam -Name «NewTeam» -TeamMembers «Ethernet», «Ethernet2» +

+ CategoryInfo : NotSpecified: (MSFT_NetLbfoTeam:root/Standa rdCimv2/MSFT_NetLbfoTeam) [New-NetLbfoTeam], CimException + FullyQualifiedErrorId : MI RESULT 1,New-NetLbfoTeam

The main reason was given in Social.TechNet.Microsoft: Nic Teaming broken in build 10586 as follows (quoted):

«There are no native LBFO capabilities on Win10. Microsoft does not support client SKU network teaming.

It was a defect in Windows 10 build 10240 that “New-NetLbfoTeam” wasn’t completely blocked on client SKUs. This was an unintentional bug, not a change in the SKU matrix. All our documentation continued to say that NIC Teaming is exclusively a feature for Server SKUs.

While the powershell cmdlet didn’t outright fail on client, LBFO was in a broken and unsupported state, since the client SKU does not ship the mslbfoprovider.sys kernel driver. That kernel driver contains all the load balancing and failover logic, as well as the LACP state machine. Without that driver, you might get the appearance of a team, but it wouldn’t really do actual teaming logic. We never tested NIC Teaming in a configuration where this kernel driver was missing.

In the 10586 update (“Fall update”) that was released a few months later, “New-NetLbfoTeam” was correctly blocked again.

In the 14393 update (“Anniversary update”), we continued blocking it, but improved the error message.»

Teaming with Intel® Advanced Network Services

Content Type Install & Setup

Article ID 000005667

Last Reviewed 04/01/2020

Adapter teaming with Intel® Advanced Network Services (Intel® ANS) uses an intermediate driver to group multiple physical ports. You can use teaming to add fault tolerance, load balancing, and link aggregation features to a group of ports.

| Note |

|

Click or the topic for details:

Download Intel® ANS teaming software

Drivers and software for Intel® Ethernet Adapters lists driver downloads for Intel® Ethernet Adapters that include Intel® ANS teaming software. A separate download for teaming software isn’t required or available.

The Drivers and Software for Intel® Ethernet Adapters includes drivers for the following operating systems:

- Windows® 10 and Server 2016 / 2019

- Windows 8.1* and Server 2012 R2* drivers

- Windows 8* and Server 2012 drivers

- Windows 7* and Server 2008* R2 drivers

- Older Windows and Linux* drivers, utilities, and other downloads

Install Intel ANS teaming software

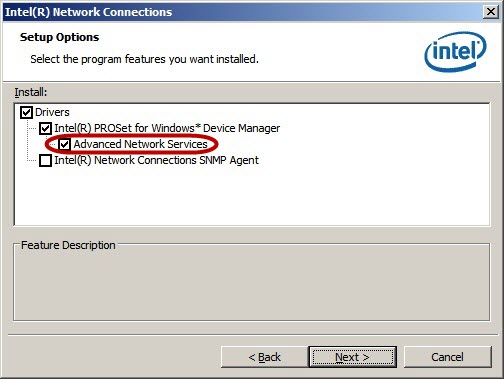

Intel ANS is installed by default along with Intel® PROSet for Windows* Device Manager. See the image below.

When you run the installation from the CD included with your software or from downloaded software, the Setup Option gives you the choice to install Intel ANS. The default selection is Intel ANS, so no special action is required during installation.

If you uncheck Intel ANS during installation, you need to modify the installation and select Intel ANS as an installation option.

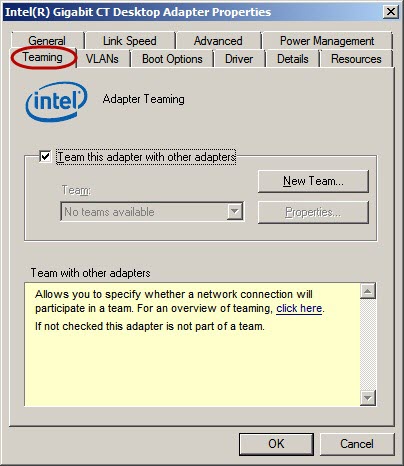

If your adapter supports teaming, then a Teaming tab displays in Windows Device Manager after installing the software. Use the New Team option and follow the wizard to create a team.

Teaming options are supported for Windows versions where the following Intel adapters receive full software support:

- Supported Operating Systems for Retail Intel® Ethernet Adapters provides information on adapters with full support for Windows* 7 and later.

- Supported Operating Systems for Intel® Ethernet Controllers (LOM) provides information on adapters with full support for Windows* 7 and later.

When creating a team on a supported adapter, ports on non-supported adapters can display in the Intel® PROSet teaming wizard. Any port that displays in the Intel PROSet teaming wizard can be included in a team including older Intel adapters and non-Intel adapters.

Intel® PRO/100 Adapters and PRO/1000 adapters that plug into PCI or PCI-X* slots don’t support Intel® ANS teaming in any Windows versions later than Windows Vista and Windows Server 2008.

Some advanced features, including hardware offloading, are automatically disabled when non-Intel adapters are team members to assure a common feature set.

TCP Offload Engine (TOE) enabled devices can’t be added to a team and don’t display in the list of available adapters.

Microsoft Windows Server 2012* NIC teaming

Windows Server 2012 adds support for NIC teaming, also known as Load Balancing and Failover (LBFO). Intel ANS teaming and VLANs aren’t compatible with Microsoft LBFO teams. Intel® PROSet blocks a member of an LBFO team from being added to an Intel ANS team or VLAN. Don’t add a port that is part of an Intel ANS team or VLAN to an LBFO team. Adding a port can cause system instability.

If you use an Intel ANS team member or VLAN in an LBFO team, use the following procedure to restore your configuration:

- Reboot the server.

- Remove LBFO team. Even though LBFO team creation failed, after a reboot the Server Manager reports that LBFO is enabled. The LBFO interface is present in the NIC Teaming GUI.

- Remove the Intel ANS teams and VLANS involved in the LBFO team and recreate them. This step is optional but strongly recommended. All bindings are restored when the LBFO team is removed.

| Note | If you add an Intel® Active Management Technology (Intel® AMT) enabled port to an LBFO team, don’t set the port to Standby in the LBFO team. If you set the port to standby, you can lose Intel AMT functionality. |

Teaming features

Teaming features include failover protection, increased bandwidth throughput aggregation, and balancing of traffic among team members. Teaming Modes are AFT, SFT, ALB, Receive Load Balancing (RLB), SLA, and IEEE 802.3ad Dynamic Link Aggregation.

Features available using Intel ANS include:

- Fault Tolerance

If the primary adapter, its cabling, or the link partner fails, Intel ANS uses one or more secondary adapters to take over. Designed to guarantee server availability to the network. - Link Aggregation

Combines multiple adapters into a single channel to provide greater bandwidth. Bandwidth increase is only available when connecting to multiple destination addresses. ALB mode provides aggregation for transmission only while RLB, SLA, and IEEE 802.3ad dynamic link aggregation modes provide aggregation in both directions. Link aggregation modes require switch support, while ALB and RLB modes can be used with any switch. - Load Balancing

The distribution of the transmission and reception load among aggregated network adapters. An intelligent adaptive agent in the Intel ANS driver repeatedly analyzes the traffic flow from the server and distributes the packets based on destination addresses. In IEEE 802.3ad modes, the switch provides load balancing on incoming packets.

Note Load Balancing in ALB mode can only occur on Layer 3 routed protocols (IP and NCP IPX). Load Balancing in RLB mode can only occur for TCP/IP. Non-routed protocols are transmitted only over the primary adapter.

Teaming modes

- Adapter Fault Tolerance (AFT)

Allows mixed models and mixed connection speeds as long as there is at least one Intel® PRO server adapter in the team. A ‘failed’ primary adapter passes its MAC and Layer 3 address to the failover (secondary) adapter. All adapters in the team should be connected to the same hub or switch with Spanning Tree Protocol (STP) set to Off. - Switch Fault Tolerance (SFT)

Uses two adapters connected to two switches. It provides a fault tolerant network connection if the first adapter, its cabling, or the switch fails. Only two adapters can be assigned to an SFT team.

Note - Don’t put clients on the SFT team link partner switches. They don’t pass traffic to the partner switch at fail.

- Spanning Tree Protocol (STP) must be running on the network to make sure that loops are eliminated.

- Turn off STP on the incoming ports of the switches directly connected to the adapters in the team, or configure ports for PortFast.

- Only 802.3ad DYNAMIC mode allows failover between teams.

Diagram of Switch Fault Tolerance (SFT) Team with Spanning Tree Protocol (STP)

Adaptive Load Balancing (ALB)

Offers increased network bandwidth by allowing transmission over two to eight ports to multiple destination addresses. It incorporates AFT. Only the primary adapter receives incoming traffic. Broadcasts/multicasts and non-routed protocols are only transmitted via the primary adapter in the team. The Intel® ANS software load balances transmissions, based on Destination Address, and can be used with any switch. Simultaneous transmission only occurs at multiple addresses. This mode can be connected to any switch. - Receive Load Balancing (RLB)

- Offers increased network bandwidth by allowing reception over two to eight ports from multiple addresses.

- Can only be used in conjunction with ALB.

- RLB is enabled by default when an ALB team is configured unless you’re using Microsoft Hyper-V*.

- RLB mode isn’t compatible with Microsoft Hyper-V*. Use Virtual Machine Load Balancing mode if you want to balance both transmit and receive traffic.

- Only the adapters connected at the fastest speed are used to load balance incoming TCP/IP traffic. Regardless of speed, the primary adapter receives all other RX traffic.

- Can be used with any switch. Any failover increases network latency until ARPs are resent. Simultaneous reception only occurs from multiple clients.

- Virtual Machine Load Balancing (VMLB)

VMLB teaming mode was created specifically for use with Microsoft Hyper-V*. VMLB provides transmit and receive traffic load balancing across Virtual Machines bound to the team interface. The LMLB team also provides fault tolerance in the event of switch port, cable, or adapter failure. This teaming type works with any switch.

The driver analyzes the transmit and receive load on each member adapter and balances the traffic across member ports. In a VMLB team, each Virtual Machine is associated with one team member port for its TX and RX traffic.

For example: If you have three virtual machines and two member ports, and if VM1 has twice as much traffic as the combination of VM2 and VM3, then VM1 is assigned to team member port 1, and VM2 and VM3 share team member port 2.

If only one virtual NIC is bound to the team, or if Hyper-V is removed, then the VMLB team acts like an AFT team.

Note - VMLB doesn’t load balance non-routed protocols such as NetBEUI and some IPX* traffic.

- VMLB supports from two to eight ports per team.

- You can create a VMLB team with mixed-speed adapters. The load is balanced according to the lowest common denominator of adapter capabilities, and the bandwidth of the channel.

Link Aggregation (LA), Cisco* Fast EtherChannel (FEC), and Gigabit EtherChannel (GEC)

- Modes replaced by Static Link Aggregation mode.

- See IEEE 802.3ad Static Link Aggregation mode below.

IEEE 802.3ad

This standard has been implemented in two ways:- Static Link Aggregation (SLA):

- Equivalent to EtherChannel or Link Aggregation.

- Must be used with an 802.3ad, FEC/GEC, or Link Aggregation capable switch.

- DYNAMIC mode

- Requires 802.3ad DYNAMIC capable switches.

- Active aggregators in software determine team membership between the switch and the ANS software (or between switches).

- There is a maximum of two aggregators per server and you must choose either maximum bandwidth or maximum adapters.

Both 802.3ad modes include adapter fault tolerance and load balancing capabilities. However in DYNAMIC mode load balancing is within only one team at a time.

Available features and modes:

Features Modes Dynamic 802.3ad AFT ALB RLB SLA Fault Tolerance X X X X X Link Aggregation X X X X Load Balancing Tx Tx/Rx Tx/Rx Tx/Rx Layer 3 Address Aggregation X IP only X X Layer 2 Address Aggregation X X Mixed Speed Adapters* X X X X - You can mix different adapter types for any mode. But you must run all the adapters in the team at the same speed when using Link Aggregation mode. Mixed Speed connections are possible in AFT, ALB, RLB, SFT, and 802.3ad modes.

- Multi-vendor teaming (MVT) is applicable to all modes in Microsoft Windows.

Settings (roles)

For AFT, SFT, ALB, and RLB modes you can choose a primary and secondary role for selected adapters.

- The primary adapter is the adapter that carries the most traffic.

- With AFT and SFT, it’s the only adapter used until that link fails.

- With ALB and non-routable protocols (anything other than IP or Novell IPX), it’s the only adapter used. It’s also the only adapter used for broadcast and multicast traffic.

- With RLB, all traffic other than IP traffic, is passed on the primary adapter, regardless of its speed.

- If you set the primary adapter instead of the software, and it’s active at fail time, it allows failback to the chosen primary adapter.

Note When a primary is removed from a team, its MAC address stays with the team until the server is rebooted. Don’t add the primary back on that network until the server it was removed from is rebooted.

- The secondary adapter becomes the primary (if possible) at failure of the primary, or its cable or link partner.

- Multi-vendor teaming (MVT) requires the Intel adapter to be set as primary adapter of the team.

Test switch configuration

A utility in Intel® PROSet for Windows* Device Manager on the Advanced Settings Team page allows Intel® ANS software to query the switch partner for configuration settings. If the switch is configured differently than necessary for the team mode, a troubleshooting page lists possible corrective actions. When you run this test, the team temporarily loses network connectivity.

See Intel PROSet for Windows Device Manager Help for limitations.

Implementation considerations, including throughput and resource issues

- You can use static IP addresses for most servers including a server with a team of NICs. You can also use DHCP for Server 2012 configuration. If you have no DHCP, you need to manually assign IP addresses to your teams when they’re created.

- For all team types except SFT, disable Spanning Tree Protocol (STP) on switch ports connected to teamed adapters. Disabling STP prevents data loss when the primary adapter is returned to service (failback). You can also configure an activation delay on the adapters to prevent data loss when STP is used. Set the activation delay on the advanced tab of team properties.

- Not all team types are available on all operating systems and all adapters.

- Hot Plug operations with non-Intel adapters that are part of a team cause system instability. Restart the system or reload the team after doing Hot Plug operations with a team that includes a non-Intel adapter.

- You can add Intel® Active Management Technology (Intel® AMT) enabled devices to Adapter Fault Tolerance (AFT), Switch Fault Tolerance (SFT), and Adaptive Load Balancing (ALB) teams. All other team types aren’t supported. The Intel AMT enabled device must be designated as the primary adapter for the team.

- Teams display as Virtual Adapters in Windows. Use the remove team option in Intel PROSet for Windows* Device Manager to disable or remove virtual adapters. Deleting virtual teams directly in Windows Device Manager can cause severe consequences.

- To avoid repeated unnecessary failovers, disable Spanning Tree Protocol (STP) for all modes except SFT.

- Some OS require reboots with any system configuration change.

- Configure team member features similarly or failover and team functionality are affected with possible severe consequences.

- Virtual Adapters require memory resources beyond the physical adapters. The physical adapter buffers or descriptors might need to be increased when set into a team. If the system is used more heavily, consider tuning both the base adapter and the virtual adapter settings for either RX or TX traffic.

- You can have up to eight ports in a team. You can mix onboard and PCIe ports, but environmental factors, such as OS, CPUs, RAM, bus, or switch capabilities, can limit the benefits of more adapters and determine your total throughput capability. SFT can only use two adapters.

- For Link Aggregation/FEC/GEC/802.3ad, you must match the switch capabilities for aggregation. Only use 802.3ad DYNAMIC mode with switches capable of DYNAMIC 3ad active aggregation.

- For AFT and SFT modes, only one adapter is active at a time. With ALB, only one adapter receives while all transmit IP or NetWare 1 IPX packets, but never to the same destination address simultaneously.

- Throughput is always higher to multiple addresses than to a single address, regardless of the number of adapters.

- In Windows NT* 4.0, there’s a timer for each adapter to prevent a non-working teamed adapter from holding up the boot to desktop. If you are using mixed speed adapters (Intel® PRO/100 with PRO/1000), and using teaming or a large number of VLANs, you can encounter a load time longer than the timer’s limit. If this happens, disable the timer for each adapter in the team in the registry under the DWORD BindTimerTimeout:

\parameters\iansprotocol\BindTimerTimeout

set value to 0

where N = the card instanceYou must repeat the steps whenever Intel PROSet for Windows Device Manager changes.

- To ensure optimal functionality when using Multi-vendor Teaming (MVT), make sure drivers are up to date on your Intel adapter and Non-Intel adapter. Contact the manufacturer of the non-Intel adapter and see if they support teaming with other manufacturers.

- Jumbo Frames, Offloading Engines and QoS priority tagging aren’t supported in Multi-vendor Teaming (MVT).

- VLAN over Multi-vendor Teaming (MVT) isn’t supported on Windows* XP/2003.

Linux* Ethernet Bonding (channel bonding or teaming) in Linux

Find channel bonding documentation can be found in the Linux kernel source, Linux Ethernet Bonding Driver HOWTO.