- Замена стандартного Linux Bridge на Open vSwitch

- OVS vs Linux Bridge: Who’s the Winner?

- OVS vs Linux Bridge: What Are They?

- OVS vs Linux Bridge: Advantages And Disadvantages of OVS

- OVS vs Linux Bridge: Strengths And Limitations of Linux Bridge

- OVS vs Linux Bridge: Who’s the Winner?

- linux bridge vs ovs Bridge

- vikozo

- narrateourale

- vikozo

- hvisage

- spirit

- hvisage

- spirit

- kcallis

- spirit

- kcallis

- spirit

- kcallis

- spirit

- hvisage

- kkjensen

- bridge-utils vs OVS

- Open vSwitch

- Contents

- Installation

- Configuration

- Overview

- Bridges

- Bonds

- VLANs Host Interfaces

- Rapid Spanning Tree (RSTP)

- Note on MTU

- Examples

- Example 1: Bridge + Internal Ports + Untagged traffic

- Example 2: Bond + Bridge + Internal Ports

- Example 3: Bond + Bridge + Internal Ports + Untagged traffic + No LACP

- Example 4: Rapid Spanning Tree (RSTP) — 1Gbps uplink, 10Gbps interconnect

- Multicast

- Using Open vSwitch in Proxmox

Замена стандартного Linux Bridge на Open vSwitch

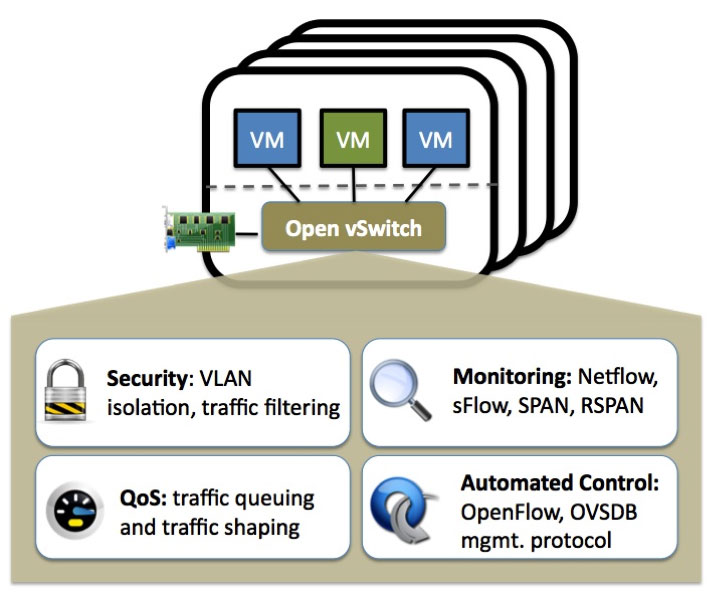

На сегодняшний день Open vSwitch (далее OvS) стандарт де факто при построении SDN. Он используется в таких проектах как OpenStack или Xen Cloud Platform. Возможностей у OvS довольно много. В этой заметке я хочу рассмотреть довольно скромную тему, использование OvS как замену стандартного linux bridge в libvirt.

Для выполнения описанной ниже конфигурации требуется локальное подключение к консоли сервера, так как в процессе конфигурации сетевое подключение будет не доступно. Установка рассмотрена на примере Fedora Linux

Установка осуществляется средствами штатного пакетного менеджера:

К сожалению данная конфигурация является экзотической и не может сосуществовать с NetworkManager, отключаем NetworkManager:

При условии, что у нашего сервера один физический интерфейс enp0s31f6, базовая конфигурация Open vSwitch выглядит следующим образом:

Изменения в network-scripts не описываю сознательно, так как они достаточно тривиальны.

Настроить libvirt для работы с OvS можно только через редактирование конфигурационного XML виртуальной машины. В GUI такой возможности нет.

Необходимо найти секцию конфигурации сетевого интерфейса, которая выглядит примерно так:

И скорректировать ее к примерно такому виду:

После сохранения конфигурации автоматически будет добавлен параметр interfaceid:

И после запуска виртуальной машины можно наблюдать успешное добавление порта в конфигурацию OvS:

Источник

OVS vs Linux Bridge: Who’s the Winner?

The battle between the OVS (Open vSwitch) and Linux Bridge has lasted for a while in the field of virtual switch technologies. Someone hold that the Open vSwitch owns more functions and better performance, which plays the most important role in virtual switch now. While others consider that the Linux Bridge has been used for years, which is matured than OVS. So OVS vs Linux Bridge: who’s the winner?

OVS vs Linux Bridge: What Are They?

Open vSwitch (OVS) is an open source multilayer virtual switch. It usually operates as a software-based network switch or as the control stack for dedicated switching hardware. Designed to enable effective network automation via programmatic extensions, OVS also supports standard management interfaces and protocols, including NetFlow, sFlow, CLI, IPFIX, RSPAN, LACP, 802.1ag. In addition, Open vSwitch can support transparent distribution across multiple physical servers. This function is similar to the proprietary virtual switch solutions such as the VMware vSphere Distributed Switch (vDS). In short, OVS is used with hypervisors to interconnect virtual machines within a host and virtual machines between different hosts across networks.

As mentioned above, the Open vSwitch is a multilayer virtual switch, which can work as a Layer 2 or Layer 3 switch. While the Linux bridge only behaves like a Layer 2 switch. Usually, Linux bridge is placed between two separate groups of computers that communicate with each other, but it communicates much more with one of the computer groups. It consists of four major components, including a set of network ports, a control plane, a forwarding plane, and MAS learning database. With these components, Linux bridge can be used for forwarding packets on routers, on gateways, or between VMs and network namespaces on a host. What’s more, it also supports STP, VLAN filter, and multicast snooping.

OVS vs Linux Bridge: Advantages And Disadvantages of OVS

Compared to Linux Bridge, there are several advantages of Open vSwitch:

- Easier for network management – With the Open vSwitch, it is convenient for the administrator to manage and monitor the network status and data flow in the cloud environment.

- Support more tunnel protocols – OVS supports GRE, VXLAN, IPsec, etc. However, Linux Bridge only supports GRE tunnel.

- Incorporated in SDN – Open vSwitch is incorporated in software-defined networking (SDN) that it can be driven by using an OpenStack plug-in or directly from an SDN Controller, such as OpenDaylight.

Despite these advantages, Open vSwitch have some challenges:

- Lacks stability – Open vSwitch has some stability problems such as Kernetl panics, ovs-switched segfaults, and data corruption.

- Complex operation – Open vSwitch itself is a complex solution, which owns so many functions. It is hard to learn, install and operate.

OVS vs Linux Bridge: Strengths And Limitations of Linux Bridge

Linux Bridge is still popular mainly for the following reasons:

- Stable and reliable – Linux Bridge has been used for years, its stability and reliability are approved.

- Easy for installation – Linux Bridge is a part of standard Linux installation and there are no additional packages to install or learn.

- Convenient for troubleshooting – Linux Bridge itself is a simple solution that its operation is simpler than that of Open vSwitch. It is convenient for troubleshooting.

However, there are some limitations:

- Fewer functions – Linux Bridge doesn’t support the Neutron DVR, the newer and more scalable VXLAN model, and some other functions.

- Fewer supporters – Many enterprises wanted to ensure that there was an open model for integrating their services into OpenStack. However, Linux Bridge can’t ensure the demand, so it has fewer users than that of Open vSwitch.

OVS vs Linux Bridge: Who’s the Winner?

OVS vs Linux Bridge: who’s the winner? Actually, both of them are good network solutions and each has its appropriate usage scenarios. OVS has more functions in centralized management and control. Linux Bridge has good stability that is suitable for Large-scale network deployments. All in all, The winner is the right one that meets your demands.

Источник

linux bridge vs ovs Bridge

vikozo

Active Member

hello

linux bridge vs ovs Bridge

in which situation should be used which of this bridge?

is mixing the Bridge wise?

have a nice day

vinc

narrateourale

Member

I bet that in 99.9% you will be happy with the regular linux bridge.

Can you tell a bit more what you actually need?

vikozo

Active Member

hvisage

Active Member

REason for Using OpenVSwitch: fancy VLAN support

ie. I have VLAN 666 that is the native-untagged VLAN on the vRack/BAckEnd. So the VMs that needs Interne access I bind to Vlan 666, while the rest communicates on their own VMs.

I have the need to map another interface, to another vlan on another host. that physical interface was originally bound to a linux bridge and I didn’t want to disturb it as yet. I used a veth link between the linux bridge and the open vswitch. the port on the open vSwitch was assigned a vlan that was trunked via the native-untagged above on another vlan to the remote host’s vlan.

*lately* Linux Brdiges have better VLAN 802.1q tagging support, but that wasn’t the case long time ago, with only OpenVSwitch being the «real» alternative for vlans and trunking in the same virtual switch.

I still have some setup I haven’t migrated yet to vlans, where I have multiple Linux bridges to simulate vlans.

spirit

Famous Member

well, vlan support exist since kernel 3.8, so 2013

the only advantage of ovs could be dpdk , but it’s not supported by proxmox currently.

Maybe netflow,sflow support too (but can be done with external daemon with linux bridge too)

you can do vlan, qinq, vxlan, bgp evpn, gre tunnel, ipip tunnel. with linux bridge without any problem.

(Cumulus linux has done a great job to implement all the things this last years, as they use linux bridge for their switch os)

«Are you looking for a French Proxmox training center?

hvisage

Active Member

2000, 3.8 is quite «new»

spirit

Famous Member

2000, 3.8 is quite «new»

2010) if you compare about the vlan feature.

Personally, I still prefer linux bridge than ovs. (if ovs deamon is killed or oom, you don’t have network anymore :/ . and I have see a lot of bug report recently with packet drop and strange stack traces)

«Are you looking for a French Proxmox training center?

kcallis

Member

spirit

Famous Member

«Are you looking for a French Proxmox training center?

kcallis

Member

spirit

Famous Member

«Are you looking for a French Proxmox training center?

kcallis

Member

spirit

Famous Member

«Are you looking for a French Proxmox training center?

hvisage

Active Member

2010) if you compare about the vlan feature.

If the OOM kicked in, then you are screwed on the Hypervisor in anycase. The OOM is well. let’s say I have a preference for proper OS’s like Solaris and not debate that part.

That said: Which ever rocks your dingy

FOr me, OVS just «worked» way back since before ProxMox moved away from the RedHAt/Centos kernels when they still had OpenVZ (Yet another NIHS issue w.r.t. Linux Kernel devs to move to LXCs) which was I recall a 2.4/2.6 kernel, thus still before 3.8 when I started to use OVS extensively.

So, yes the typical would be something like:

create the OVS bridge, «delete» the physical’s config, and attached that physical as an OVSPort to the bridge.

Then you add a OVSintPort and put the IP of the proxmox on that, and attach that to thebridge (I has a bit complexer setup on my «production» servers where I have the physical as a native-untagged with a VLAN tag like 666, then I attach the OVSintPort to that.)

For OPNsense/pfSense:

1) use VirtIO and not e1000 — I’ve had lock ups on those e1000 in FreeBSD ;(

2) disable the hardware acceleration — it breaks UDP and thus DHCP

just when you attach the OPNsense/pfSense interface, attach it as a tagless, then OVS will «default» to trunk capable, and you can create the vlan interfaces inside the opnsense/pfSense interface.

The VMs themselves you add the relevant VLAN tag.

The screenshot of my lab/test server’s network setup inside ProxMox:

kkjensen

New Member

I’m just in the middle of trying to implement OVS after reinstalling my 4 node test cluster. The production cluster will be for a small ISP that has a bunch of vlans. I’m used to working with Cisco switches and was hoping to create a 10G trunk through all the nodes and starting/finishing on one of the big edge switches. The regular bridge mode (I’m probably reading old tutorials and forum posts) seemed to require that every vlan get explicitly defined on a port so when I found out about OVS I thought it made sense to set up the cluster using OVS in each node to form the 10G loop and then the virtual machines can all pick up a connection off that loop once it’s in place with all VLANs allowed to flow over it.

Prior to my reinstall I did get a vlan working on one container but I could see that implementing different vlans for all the different services that would someday be required was going to be a pain IMO.

Now that I have OVS installed on new nodes I’m just seeing a new field «OVS options». I was hoping for some GUI implementation that would let you list the vlans and make one a native vlan if required.

I’ve been looking for an ideal tutorial of some sort using proxmox, OVS and ceph. Any recommendations?

Источник

bridge-utils vs OVS

Привет всем. Вот думаю что лучше выбрать Linux-bridge vs OVS для виртуализации на proxmox. С одной стороны первый проще и функционала особо мне не надо, кроме как на сетевку вешать виртуалки, а с другой стороны интересует производительность, может кто поделится историями успеха, может все же лучше осилить ovs или в данной ситуации он особо не нужен ?

Если можно без него, то лучше без него. Успех его внедрения есть. Смысла в простых случаях нет. Только словить какую-нибудь неприятность, тк это вещь новая и необкатанная в вирт системах. Если хочется побыть бесплатным тестером, то ок.

понял, спасибо, к этому варианту больше склоняюсь

если не нужен vlan-функционал, то используй linux-bridge.

bridge-utils позволяют получить теги на vnic. ovs позволяет фильтровать теги на портах, к которым подключен vnic. Это можно решить и через bridge-utils набором vnic из разных вланов.

В ovirt 4.0 ovs появился как основной, а в 4.1 стал experimental. Видимо, фидбек плохой.

Даже с vlan функционалом бриджи справляются неплохо. Вот дальше — уже вопрос.

Если ты не хостер и не большая инсталяция — имхо не нужен. Тем более в проксе. Хотя есть те, кто на нем умудряются продавать услуги впс.

Если есть контейнеры/докер и др. виртуализация, то ovs рулит.

Источник

Open vSwitch

Open vSwitch (openvswitch, OVS) is an alternative to Linux native bridges, bonds, and vlan interfaces. Open vSwitch supports most of the features you would find on a physical switch, providing some advanced features like RSTP support, VXLANs, OpenFlow, and supports multiple vlans on a single bridge. If you need these features, it makes sense to switch to Open vSwitch.

Contents

Installation

Update the package index and then install the Open vSwitch packages by executing:

Configuration

Overview

Open vSwitch and Linux bonding and bridging or vlans MUST NOT be mixed. For instance, do not attempt to add a vlan to an OVS Bond, or add a Linux Bond to an OVSBridge or vice-versa. Open vSwitch is specifically tailored to function within virtualized environments, there is no reason to use the native linux functionality.

Bridges

A bridge is another term for a Switch. It directs traffic to the appropriate interface based on mac address. Open vSwitch bridges should contain raw ethernet devices, along with virtual interfaces such as OVSBonds or OVSIntPorts. These bridges can carry multiple vlans, and be broken out into ‘internal ports’ to be used as vlan interfaces on the host.

It should be noted that it is recommended that the bridge is bound to a trunk port with no untagged vlans; this means that your bridge itself will never have an ip address. If you need to work with untagged traffic coming into the bridge, it is recommended you tag it (assign it to a vlan) on the originating interface before entering the bridge (though you can assign an IP address on the bridge directly for that untagged data, it is not recommended). You can split out your tagged VLANs using virtual interfaces (OVSIntPort) if you need access to those vlans from your local host. Proxmox will assign the guest VMs a tap interface associated with a vlan, so you do NOT need a bridge per vlan (such as classic linux networking requires). You should think of your OVSBridge much like a physical hardware switch.

When configuring a bridge, in /etc/network/interfaces, prefix the bridge interface definition with allow-ovs $iface. For instance, a simple bridge containing a single interface would look like:

Remember, if you want to split out vlans with ips for use on the local host, you should use OVSIntPorts, see sections to follow.

However, any interfaces (Physical, OVSBonds, or OVSIntPorts) associated with a bridge should have their definitions prefixed with allow-$brname $iface, e.g. allow-vmbr0 bond0

NOTE: All interfaces must be listed under ovs_ports that are part of the bridge even if you have a port definition (e.g. OVSIntPort) that cross-references the bridge.

Bonds

Bonds are used to join multiple network interfaces together to act as single unit. Bonds must refer to raw ethernet devices (e.g. eth0, eth1).

When configuring a bond, it is recommended to use LACP (aka 802.3ad) for link aggregation. This requires switch support on the other end. A simple bond using eth0 and eth1 that will be part of the vmbr0 bridge might look like this.

NOTE: The interfaces that are part of a bond do not need to have their own configuration section.

VLANs Host Interfaces

In order for the host (e.g. proxmox host, not VMs themselves!) to utilize a vlan within the bridge, you must create OVSIntPorts. These split out a virtual interface in the specified vlan that you can assign an ip address to (or use DHCP). You need to set ovs_options tag=$VLAN to let OVS know what vlan the interface should be a part of. In the switch world, this is commonly referred to as an RVI (Routed Virtual Interface), or IRB (Integrated Routing and Bridging) interface.

IMPORTANT: These OVSIntPorts you create MUST also show up in the actual bridge definition under ovs_ports. If they do not, they will NOT be brought up even though you specified an ovs_bridge. You also need to prefix the definition with allow-$bridge $iface

Setting up this vlan port would look like this in /etc/network/interfaces:

Rapid Spanning Tree (RSTP)

Open vSwitch supports the Rapid Spanning Tree Protocol, but is disabled by default. Rapid Spanning Tree is a network protocol used to prevent loops in a bridged Ethernet local area network.

WARNING: The stock PVE 4.4 kernel panics, must use a 4.5 or higher kernel for stability. Also, the Intel i40e driver is known to not work, older generation Intel NICs that use ixgbe are fine, as are Mellanox adapters that use the mlx5 driver.

In order to configure a bridge for RSTP support, you must use an «up» script as the «ovs_options» and «ovs_extras» options do not emit the proper commands. An example would be to add this to your «vmbr0» interface configuration:

It may be wise to also set a «post-up» script that sleeps for 10 or so seconds waiting on RSTP convergence before boot continues.

Other bridge options that may be set are:

- other_config:rstp-priority= Configures the root bridge priority, the lower the value the more likely to become the root bridge. It is recommended to set this to the maximum value of 0xFFFF to prevent Open vSwitch from becoming the root bridge. The default value is 0x8000

- other_config:rstp-forward-delay= The amount of time the bridge will sit in learning mode before entering a forwarding state. Range is 4-30, Default 15

- other_config:rstp-max-age= Range is 6-40, Default 20

You should also consider adding a cost value to all interfaces that are part of a bridge. You can do so in the ethX interface configuration:

Interface options that may be set via ovs_options are:

- other_config:rstp-path-cost= Default 2000 for 10GbE, 20000 for 1GbE

- other_config:rstp-port-admin-edge= Set to False if this is known to be connected to a switch running RSTP to prevent entering forwarding state if no BDPUs are detected

- other_config:rstp-port-auto-edge= Set to False if this is known to be connected to a switch running RSTP to prevent entering a forwarding state if no BDPUs are detected

- other_config:rstp-port-mcheck= Set to True if the other end is known to be using RSTP and not STP, will broadcast BDPUs immediately on link detection

You can look at the RSTP status for an interface via:

NOTE: Open vSwitch does not currently allow a bond to participate in RSTP.

Note on MTU

If you plan on using a MTU larger than the default of 1500, you need to mark any physical interfaces, bonds, and bridges with a larger MTU by adding an mtu setting to the definition such as mtu 9000 otherwise it will be disallowed.

Odd Note: Some newer Intel Gigabit NICs have a hardware limitation which means the maximum MTU they can support is 8996 (instead of 9000). If your interfaces aren’t coming up and you are trying to use 9000, this is likely the reason and can be difficult to debug. Try setting all your MTUs to 8996 and see if it resolves your issues.

Examples

Example 1: Bridge + Internal Ports + Untagged traffic

The below example shows you how to create a bridge with one physical interface, with 2 vlan interfaces split out, and tagging untagged traffic coming in on eth0 to vlan 1.

This is a complete and working /etc/network/interfaces listing:

Example 2: Bond + Bridge + Internal Ports

The below example shows you a combination of all the above features. 2 NICs are bonded together and added to an OVS Bridge. 2 vlan interfaces are split out in order to provide the host access to vlans with different MTUs.

This is a complete and working /etc/network/interfaces listing:

Example 3: Bond + Bridge + Internal Ports + Untagged traffic + No LACP

The below example shows you a combination of all the above features. 2 NICs are bonded together and added to an OVS Bridge. This example imitates the default proxmox network configuration but using a bond instead of a single NIC and the bond will work without a managed switch which supports LACP.

This is a complete and working /etc/network/interfaces listing:

Example 4: Rapid Spanning Tree (RSTP) — 1Gbps uplink, 10Gbps interconnect

WARNING: The stock PVE 4.4 kernel panics, must use a 4.5 or higher kernel for stability. Also, the Intel i40e driver is known to not work, older generation Intel NICs that use ixgbe are fine, as are Mellanox adapters that use the mlx5 driver.

This example shows how you can use Rapid Spanning Tree (RSTP) to interconnect your ProxMox nodes inexpensively, and uplinking to your core switches for external traffic, all while maintaining a fully fault-tolerant interconnection scheme. This means VM VM access (or possibly Ceph Ceph) can operate at the speed of the network interfaces directly attached in a star or ring topology. In this example, we are using 10Gbps to interconnect our 3 nodes (direct-attach), and uplink to our core switches at 1Gbps. Spanning Tree configured with the right cost metrics will prevent loops and activate the optimal paths for traffic. Obviously we are using this topology because 10Gbps switch ports are very expensive so this is strictly a cost-savings manoeuvre. You could obviously use 40Gbps ports instead of 10Gbps ports, but the key thing is the interfaces used to interconnect the nodes are higher-speed than the interfaces used to connect to the core switches.

This assumes you are using Open vSwitch 2.5+, older versions did not support Rapid Spanning Tree, but only Spanning Tree which had some issues.

To better explain what we are accomplishing, look at this ascii-art representation below:

This is a complete and working /etc/network/interfaces listing:

On our Juniper core switches, we put in place this configuration:

Multicast

Right now Open vSwitch doesn’t do anything in regards to multicast. Typically where you might tell linux to enable the multicast querier on the bridge, you should instead set up your querier at your router or switch. Please refer to the Multicast_notes wiki for more information.

Using Open vSwitch in Proxmox

Using Open vSwitch isn’t that much different than using normal linux bridges. The main difference is instead of having a bridge per vlan, you have a single bridge containing all your vlans. Then when configuring the network interface for the VM, you would select the bridge (probably the only bridge you have), and you would also enter the VLAN Tag associated with the VLAN you want your VM to be a part of. Now there is zero effort when adding or removing VLANs!

Источник