- How to download a website page on Linux terminal?

- Check if wget already available

- Check if cURL already available

- 5 Linux Command Line Based Tools for Downloading Files and Browsing Websites

- 1. rTorrent

- Installation of rTorrent in Linux

- 2. Wget

- Installation of Wget in Linux

- Basic Usage of Wget Command

- 3. cURL

- Installation of cURL in Linux

- Basic Usage of cURL Command

- 4. w3m

- Installation of w3m in Linux

- Basic Usage of w3m Command

- 5. Elinks

- Installation of Elinks in Linux

- Basic Usage of elinks Command

- If You Appreciate What We Do Here On TecMint, You Should Consider:

- How can I download an entire website?

- 8 Answers 8

- 7 Free Tools To Download Entire Websites For Offline Use Or Backup

- ↓ 01 – HTTrack | Windows | macOS | Linux

- ↓ 02 – Cyotek WebCopy | Windows

- ↓ 03 – UnMHT | Firefox Addon

- ↓ 04 – grab-site | macOS | Linux

- ↓ 05 – WebScrapBook | Firefox Addon

- ↓ 06 – Archivarix | 200 Files Free | Online

- ↓ 07 – Website Downloader | Online

How to download a website page on Linux terminal?

The Linux command line provides greta features for web crawling in addition to its inherent capabilities to handle web servers and web browsing. In this article we will check for few tools which are wither available or can be installed and used in the Linux environment for offline web browsing. This is achieved by basically downloading the webpage or many webpages.

Wget is probably the most famous one among all the downloading options. It allows downloading from http, https, as well as FTP servers. It can download the entire website and also allows proxy browsing.

Below are the steps to get it installed and start using it.

Check if wget already available

Running the above code gives us the following result:

If the exit code($?) is 1 then we runt he below command to install wget.

Now we run the wget command for a specific webpage or a website to be downloaded.

Running the above code gives us the following result. We show the result only for the web page and not the whole website. Thee downloaded file gets saved in the current directory.

cURL is a client side application. It supports downloading files from http, https,FTP,FTPS, Telnet, IMAP etc. It has additional support for different types of downloads as compared to wget.

Below are the steps to get it installed and start using it.

Check if cURL already available

Running the above code gives us the following result:

The value of 1 indicates cURL is not available in the system. So we will install it using the below command.

Running the above code gives us the following result indicating the installation of cURL.

Next we user cURL to download a webpage.

Running the above code gives us the following result. You can locate the downloaded in the current working directory.

Источник

5 Linux Command Line Based Tools for Downloading Files and Browsing Websites

Linux command-line, the most adventurous and fascinating part of GNU/Linux is a very cool and powerful tool. A command-line itself is very productive and the availability of various inbuilt and third-party command-line applications makes Linux robust and powerful. The Linux Shell supports a variety of web applications of various kinds be it torrent downloader, dedicated downloader, or internet surfing.

Here we are presenting 5 great command line Internet tools, which are very useful and prove to be very handy in downloading files in Linux.

1. rTorrent

rTorrent is a text-based BitTorrent client which is written in C++ aimed at high performance. It is available for most of the standard Linux distributions including FreeBSD and Mac OS X.

Installation of rTorrent in Linux

Check if rtorrent is installed correctly by running the following command in the terminal.

Functioning of rTorrent

Some of the useful Key-bindings and their use.

- CTRL+ q – Quit rTorrent Application

- CTRL+ s – Start Download

- CTRL+ d – Stop an active Download or Remove an already stopped Download.

- CTRL+ k – Stop and Close an active Download.

- CTRL+ r – Hash Check a torrent before Upload/Download Begins.

- CTRL+ q – When this key combination is executed twice, rTorrent shutdown without sending a stop Signal.

- Left Arrow Key – Redirect to Previous screen.

- Right Arrow Key – Redirect to Next Screen

2. Wget

Wget is a part of the GNU Project, the name is derived from World Wide Web (WWW). Wget is a brilliant tool that is useful for recursive download, offline viewing of HTML from a local Server and is available for most of the platforms be it Windows, Mac, Linux.

Wget makes it possible to download files over HTTP, HTTPS, and FTP. Moreover, it can be useful in mirroring the whole website as well as support for proxy browsing, pausing/resuming Downloads.

Installation of Wget in Linux

Wget being a GNU project comes bundled with Most of the Standard Linux Distributions and there is no need to download and install it separately. If in case, it’s not installed by default, you can still install it using apt, yum, or dnf.

Basic Usage of Wget Command

Download a single file using wget.

Download a whole website, recursively.

Download specific types of files (say pdf and png) from a website.

Wget is a wonderful tool that enables custom and filtered download even on a limited resource Machine. A screenshot of wget download, where we are mirroring a website (Yahoo.com).

For more such wget download examples, read our article that shows 10 Wget Download Command Examples.

3. cURL

a cURL is a command-line tool for transferring data over a number of protocols. cURL is a client-side application that supports protocols like FTP, HTTP, FTPS, TFTP, TELNET, IMAP, POP3, etc.

cURL is a simple downloader that is different from wget in supporting LDAP, POP3 as compared to others. Moreover, Proxy Downloading, pausing download, resuming download are well supported in cURL.

Installation of cURL in Linux

By default, cURL is available in most of the distribution either in the repository or installed. if it’s not installed, just do an apt or yum to get a required package from the repository.

Basic Usage of cURL Command

For more such curl command examples, read our article that shows 15 Tips On How to Use ‘Curl’ Command in Linux.

4. w3m

The w3m is a text-based web browser released under GPL. W3m support tables, frames, color, SSL connection, and inline images. W3m is known for fast browsing.

Installation of w3m in Linux

Again w3m is available by default in most of the Linux Distribution. If in case, it is not available you can always apt or yum the required package.

Basic Usage of w3m Command

5. Elinks

Elinks is a free text-based web browser for Unix and Unix-based systems. Elinks support HTTP, HTTP Cookies and also support browsing scripts in Perl and Ruby.

Tab-based browsing is well supported. The best thing is that it supports Mouse, Display Colours, and supports a number of protocols like HTTP, FTP, SMB, Ipv4, and Ipv6.

Installation of Elinks in Linux

By default elinks also available in most Linux distributions. If not, install it via apt or yum.

Basic Usage of elinks Command

That’s all for now. I’ll be here again with an interesting article which you people will love to read. Till then stay tuned and connected to Tecmint and don’t forget to give your valuable feedback in the comment section.

If You Appreciate What We Do Here On TecMint, You Should Consider:

TecMint is the fastest growing and most trusted community site for any kind of Linux Articles, Guides and Books on the web. Millions of people visit TecMint! to search or browse the thousands of published articles available FREELY to all.

If you like what you are reading, please consider buying us a coffee ( or 2 ) as a token of appreciation.

We are thankful for your never ending support.

Источник

How can I download an entire website?

I want to download a whole website (with sub-sites). Is there any tool for that?

8 Answers 8

Try example 10 from here:

–mirror : turn on options suitable for mirroring.

-p : download all files that are necessary to properly display a given HTML page.

—convert-links : after the download, convert the links in document for local viewing.

httrack is the tool you are looking for.

HTTrack allows you to download a World Wide Web site from the Internet to a local directory, building recursively all directories, getting HTML, images, and other files from the server to your computer. HTTrack arranges the original site’s relative link-structure.

With wget you can download an entire website, you should use -r switch for a recursive download. For example,

WEBHTTRACK WEBSITE COPIER is a handy tool to download a whole website onto your hard disk for offline browsing. Launch ubuntu software center and type «webhttrack website copier» without the quotes into the search box. select and download it from the software center onto your system. start the webHTTrack from either the laucher or the start menu, from there you can begin enjoying this great tool for your site downloads

I don’t know about sub domains, i.e, sub-sites, but wget can be used to grab a complete site. Take a look at the this superuser question. It says that you can use -D domain1.com,domain2.com to download different domains in single script. I think you can use that option to download sub-domains i.e -D site1.somesite.com,site2.somesite.com

I use Burp — the spider tool is much more intelligent than wget, and can be configured to avoid sections if necessary. The Burp Suite itself is a powerful set of tools to aid in testing, but the spider tool is very effective.

Источник

7 Free Tools To Download Entire Websites For Offline Use Or Backup

With today’s internet speed and accountabilities, there is not much reason to download an entire website for offline use. Maybe you need a copy of a site as backup or you need to travel somewhere remote, these tools will enable you to download the entire website for offline reading.

Here’s a quick list of some of the best websites downloading software programs to get you started. HTTrack is the best and has been the favorite of many for many years.

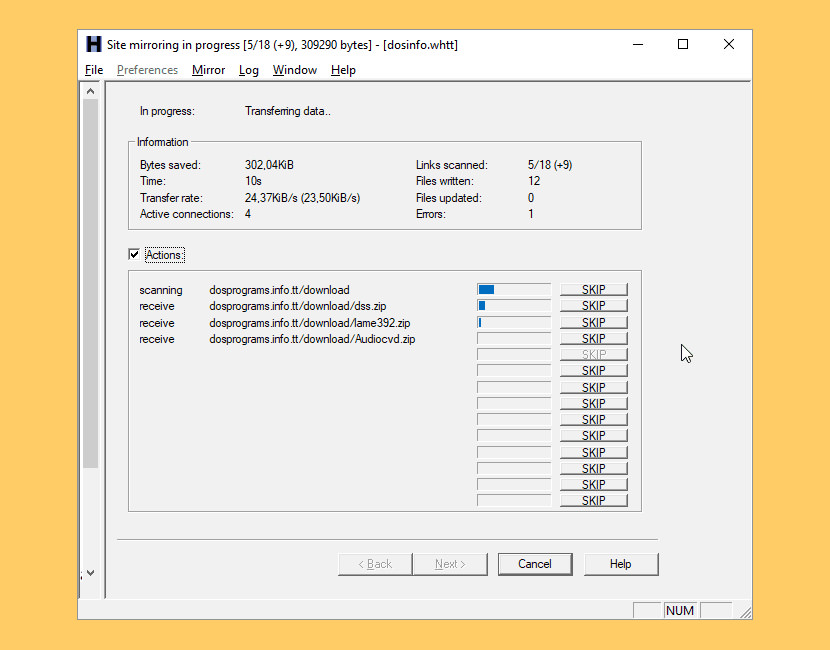

↓ 01 – HTTrack | Windows | macOS | Linux

HTTrack is a free (GPL, libre/free software) and easy-to-use offline browser utility. It allows you to download a World Wide Web site from the Internet to a local directory, building recursively all directories, getting HTML, images, and other files from the server to your computer. HTTrack arranges the original site’s relative link-structure. Simply open a page of the “mirrored” website in your browser, and you can browse the site from link to link, as if you were viewing it online. HTTrack can also update an existing mirrored site, and resume interrupted downloads. HTTrack is fully configurable, and has an integrated help system.

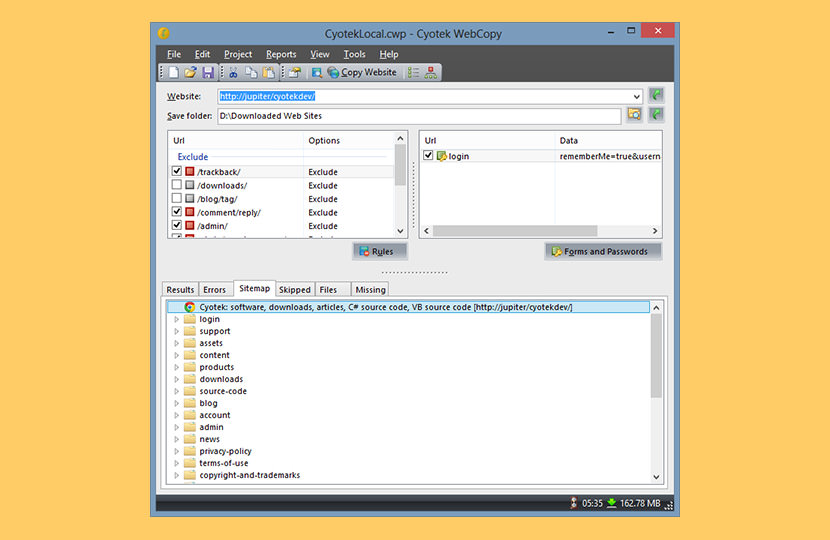

↓ 02 – Cyotek WebCopy | Windows

Cyotek WebCopy is a free tool for copying full or partial websites locally onto your harddisk for offline viewing. WebCopy will scan the specified website and download its content onto your harddisk. Links to resources such as style-sheets, images, and other pages in the website will automatically be remapped to match the local path. Using its extensive configuration you can define which parts of a website will be copied and how.

WebCopy will examine the HTML mark-up of a website and attempt to discover all linked resources such as other pages, images, videos, file downloads – anything and everything. It will download all of these resources, and continue to search for more. In this manner, WebCopy can “crawl” an entire website and download everything it sees in an effort to create a reasonable facsimile of the source website.

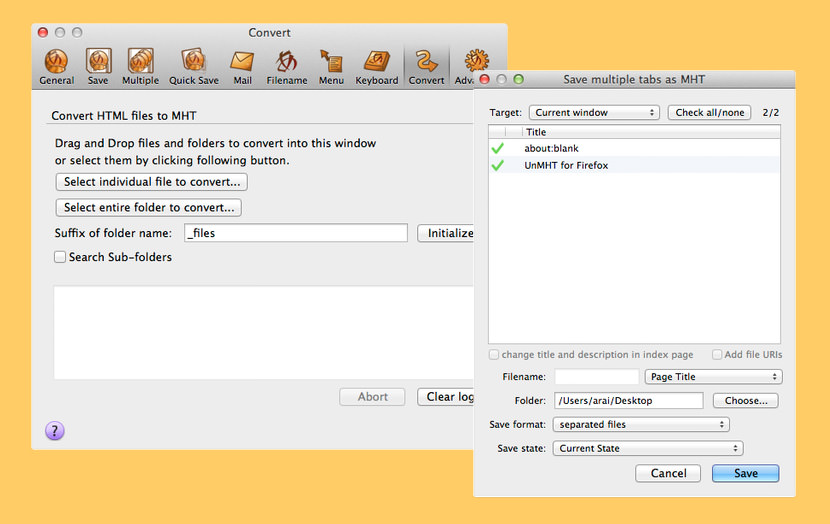

↓ 03 – UnMHT | Firefox Addon

UnMHT allows you to view MHT (MHTML) web archive format files, and save complete web pages, including text and graphics, into a single MHT file in Firefox/SeaMonkey. MHT (MHTML, RFC2557) is the webpage archive format to store HTML and images, CSS into a single file.

- Save webpage as MHT file.

- Insert URL of the webpage and date you saved into saved MHT file.

- Save multiple tabs as MHT files at once.

- Save multiple tabs into a single MHT file.

- Save webpage by single click into prespecified directory with Quick Save feature.

- Convert HTML files and directory which contains files used by the HTML into MHT file.

- View the MHT file saved by UnMHT, IE, PowerPoint, etc.

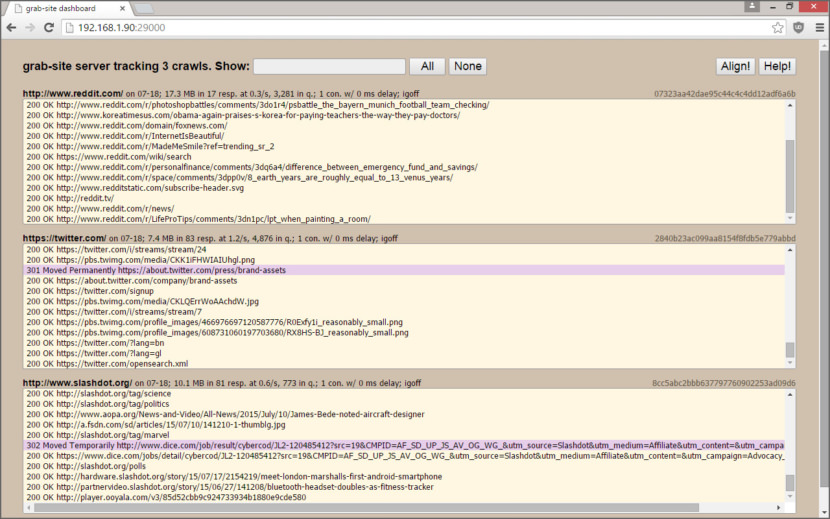

↓ 04 – grab-site | macOS | Linux

grab-site is an easy pre configured web crawler designed for backing up websites. Give grab-site a URL and it will recursively crawl the site and write WARC files. Internally, grab-site uses a fork of wpull for crawling. grab-site is a crawler for archiving websites to WARC files. It includes a dashboard for monitoring multiple crawls, and supports changing URL ignore patterns during the crawl.

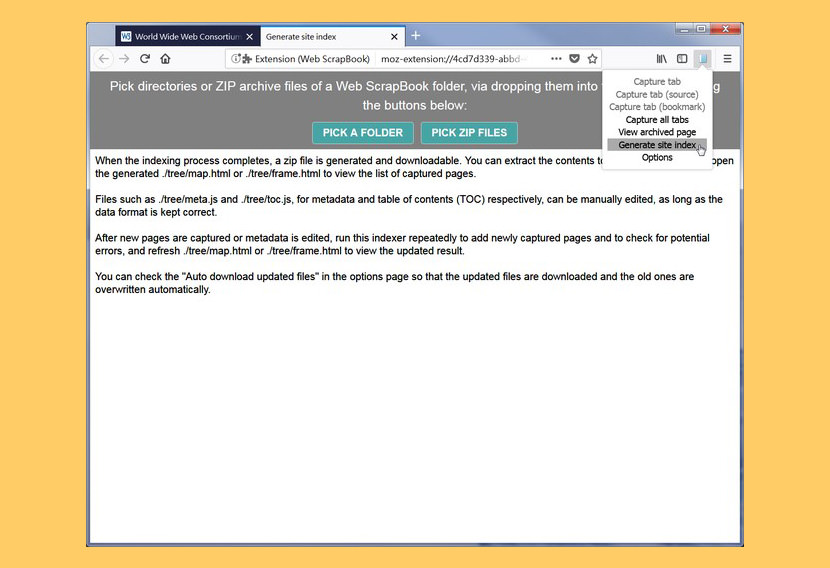

↓ 05 – WebScrapBook | Firefox Addon

WebScrapBook is a browser extension that captures the web page faithfully with various archive formats and customizable configurations. This project inherits from legacy Firefox addon ScrapBook X. A web page can be saved as a folder, a zip-packed archive file (HTZ or MAFF), or a single HTML file (optionally scripted as an enhancement). An archive file can be viewed by opening the index page after unzipping, using the built-in archive page viewer, or with other assistant tools.

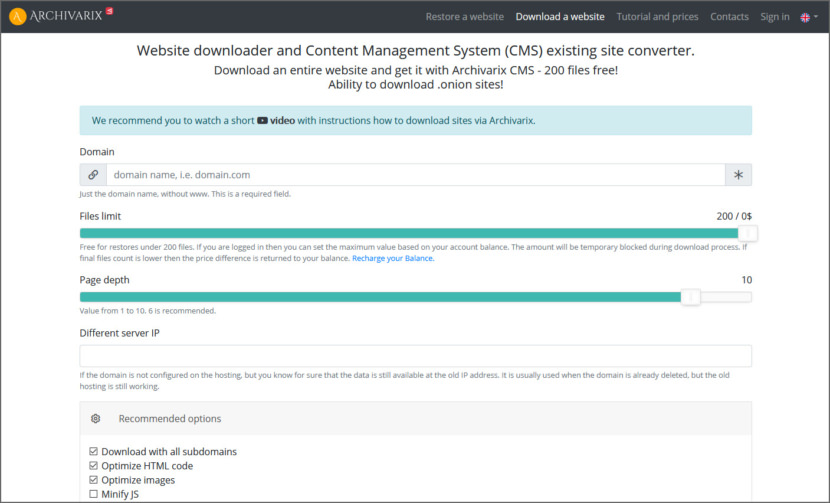

↓ 06 – Archivarix | 200 Files Free | Online

Website downloader and Content Management System (CMS) existing site converter. Download an entire live website – 200 files free! Ability to download .onion sites! Their Website downloader system allows you to download up to 200 files from a website for free. If there are more files on the site and you need all of them, then you can pay for this service. Download cost depends on the number of files. You can download from existing websites, Wayback Machine or Google Cache.

↓ 07 – Website Downloader | Online

Website Downloader, Website Copier or Website Ripper allows you to download websites from the Internet to your local hard drive on your own computer. Website Downloader arranges the downloaded site by the original website’s relative link-structure. The downloaded website can be browsed by opening one of the HTML pages in a browser.

After cloning a website to your hard drive you can open the website’s source code with a code editor or simply browse it offline using a browser of your choosing. Site Downloader can be used for multiple different purposes. It’s truly simple to use website download software without downloading anything.

- Backups – If you have a website, you should always have a recent backup of the website in case the server breaks or you get hacked. Website Downloader is the fastest and easiest option to take a backup of your website, it allows you to download whole website.

- Offline Website Downloader – Download website offline for your future reference, which you can access even without an internet connection, say. when you are on a flight or an island vacation!

Источник