- Understand Linux Load Averages and Monitor Performance of Linux

- How to Monitor Linux System Load Average

- Top Command

- Glances Tool

- Understanding System Average Load in Relation Number of CPUs

- Multi-processor Vs Multi-core

- If You Appreciate What We Do Here On TecMint, You Should Consider:

- High System Load with Low CPU Utilization on Linux?

- 2020-11-20

- Introduction — Terminology

- Troubleshooting high system load on Linux

- Drilling down deeper — WCHAN

- Drilling down deeper — kernel stack

- How to troubleshoot past problems?

- Summary

- Further Reading

- Next articles

Understand Linux Load Averages and Monitor Performance of Linux

In this article, we will explain one of the critical Linux system administration tasks – performance monitoring in regards to system/CPU load and load averages.

Before we move any further, let’s understand these two important phrases in all Unix-like systems:

- System load/CPU Load – is a measurement of CPU over or under-utilization in a Linux system; the number of processes which are being executed by the CPU or in waiting state.

- Load average – is the average system load calculated over a given period of time of 1, 5 and 15 minutes.

In Linux, the load-average is technically believed to be a running average of processes in it’s (kernel) execution queue tagged as running or uninterruptible.

Note that:

- All if not most systems powered by Linux or other Unix-like systems will possibly show the load average values somewhere for a user.

- A downright idle Linux system may have a load average of zero, excluding the idle process.

- Nearly all Unix-like systems count only processes in the running or waiting states. But this is not the case with Linux, it includes processes in uninterruptible sleep states; those waiting for other system resources like disk I/O etc.

How to Monitor Linux System Load Average

There are numerous ways of monitoring system load average including uptime which shows how long the system has been running, number of users together with load averages:

The numbers are read from left to right, and the output above means that:

- load average over the last 1 minute is 1.98

- load average over the last 5 minutes is 2.15

- load average over the last 15 minutes is 2.21

High load averages imply that a system is overloaded; many processes are waiting for CPU time.

We will uncover this in the next section in relation to number of CPU cores. Additionally, we can as well use other well known tools such as top and glances which display a real-time state of a running Linux system, plus many other tools:

Top Command

Glances Tool

The load averages shown by these tools is read /proc/loadavg file, which you can view using the cat command as below:

On desktop machines, there are graphical user interface tools that we can use to view system load averages.

Understanding System Average Load in Relation Number of CPUs

We can’t possibly explain system load or system performance without shedding light on the impact of the number of CPU cores on performance.

Multi-processor Vs Multi-core

- Multi-processor – is where two or more physical CPU’s are integrated into a single computer system.

- Multi-core processor – is a single physical CPU which has at least two or more separate cores (or what we can also refer to as processing units) that work in parallel. Meaning a dual-core has 2 two processing units, a quad-core has 4 processing units and so on.

Furthermore, there is also a processor technology which was first introduced by Intel to improve parallel computing, referred to as hyper threading.

Under hyper threading, a single physical CPU core appears as two logical CPUs core to an operating system (but in reality, there is one physical hardware component).

Note that a single CPU core can only carry out one task at a time, thus technologies such as multiple CPUs/processors, multi-core CPUs and hyper-threading were brought to life.

With more than one CPU, several programs can be executed simultaneously. Present-day Intel CPUs use a combination of both multiple cores and hyper-threading technology.

To find the number of processing units available on a system, we may use the nproc or lscpu commands as follows:

Another way to find the number of processing units using grep command as shown.

Now, to further understand system load, we will take a few assumptions. Let’s say we have load averages below:

On a single core system this would mean:

- The CPU was fully (100%) utilized on average; 1 processes was running on the CPU (1.00) over the last 1 minute.

- The CPU was idle by 60% on average; no processes were waiting for CPU time (0.40) over the last 5 minutes.

- The CPU was overloaded by 235% on average; 2.35 processes were waiting for CPU time (3.35) over the last 15 minutes.

On a dual-core system this would mean:

- The one CPU was 100% idle on average, one CPU was being used; no processes were waiting for CPU time(1.00) over the last 1 minute.

- The CPUs were idle by 160% on average; no processes were waiting for CPU time. (0.40) over the last 5 minutes.

- The CPUs were overloaded by 135% on average; 1.35 processes were waiting for CPU time. (3.35) over the last 15 minutes.

You might also like:

In conclusion, if you are a system administrator then high load averages are real to worry about. When they are high, above the number of CPU cores, it signifies high demand for the CPUs, and low load averages below the number of CPU cores tells us that CPUs are underutilized.

If You Appreciate What We Do Here On TecMint, You Should Consider:

TecMint is the fastest growing and most trusted community site for any kind of Linux Articles, Guides and Books on the web. Millions of people visit TecMint! to search or browse the thousands of published articles available FREELY to all.

If you like what you are reading, please consider buying us a coffee ( or 2 ) as a token of appreciation.

We are thankful for your never ending support.

Источник

High System Load with Low CPU Utilization on Linux?

2020-11-20

In this post I will show you how to break down Linux system load by the load contributor or reason. You can drill down into the “linux system load in thousands” and “high system load, but low CPU utilization” problem patterns too.

Introduction — Terminology

- The system load metric aims to represent the system “resource demand” as just a single number. On classic Unixes, it only counts the demand for CPU (threads in Runnable state)

- The unit of system load metric is “number of processes/threads” (or tasks as the scheduling unit is called on Linux). The load average is an average number of threads over a time period (last 1,5,15 mins) that “compete for CPU” on classic unixes or “either compete for CPU or wait in an uninterruptible sleep state” on Linux

- Runnable state means “not blocked by anything”, ready to run on CPU. The thread is either currently running on CPU or waiting in the CPU runqueue for the OS scheduler to put it onto CPU

- On Linux, the system load includes threads both in Runnable (R) and in Uninterruptible sleep (D) states (typically disk I/O, but not always)

So, on Linux, an absurdly high load figure can be caused by having lots of threads in Uninterruptible sleep (D) state, in addition to CPU demand.

Troubleshooting high system load on Linux

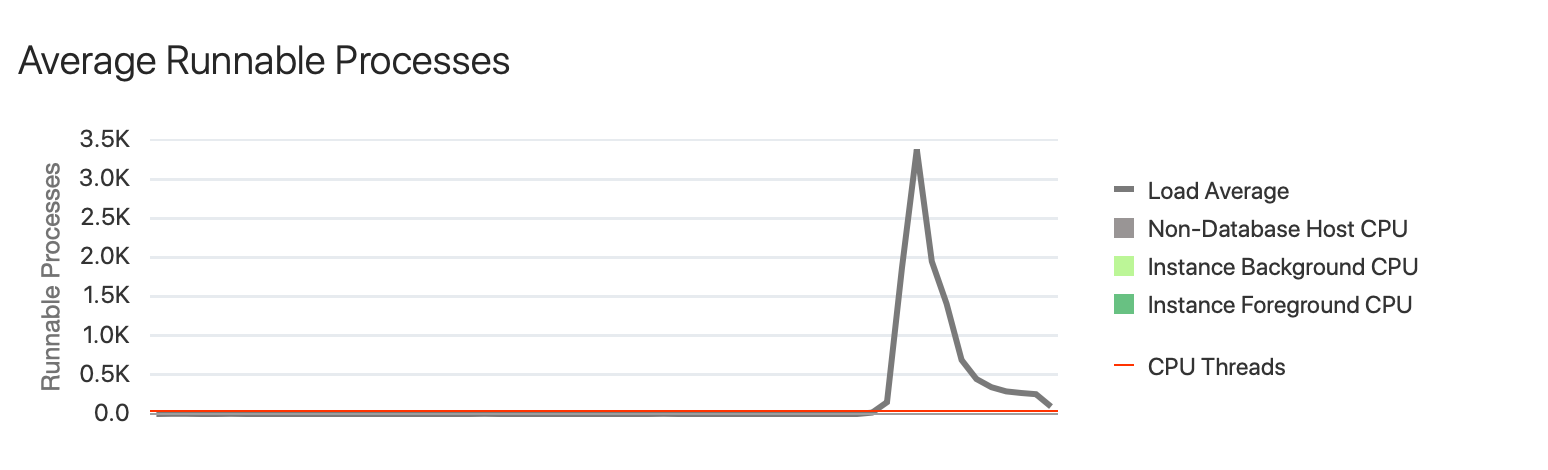

Here’s one example from a Linux database server with 32 CPUs:

Let’s confirm this with standard OS level commands, to avoid getting misled by potential GUI magic by the monitoring tool:

Does this mean that we have a huge demand for CPU time? Must have lots of threads in the CPU runqueues, right?

But the CPUs are well over 50% idle! CPU utilization is around 40-45% when adding %user , %nice and %system together. %iowait means that the CPU is idle, it just happens to have a synchronous I/O submitted by a thread on it before becoming idle.

So, we don’t seem to have a CPU oversubscription scenario in this case. Is there a way to systematically drill down deeper by measuring what (and who) is contributing to this load then?

Yes, and it’s super simple. Remember, the current system load is just the number of threads (called tasks) on Linux that are either in R or D state. We can just run ps to list the current number of threads in these states:

In the above command, ps -eo s,user will list the thread state field first and any other fields of interest (like username), later. The grep ^[RD] filters out any threads in various “idle” and “sleeping” states that don’t contribute to Linux load (S,T,t,Z,I etc).

Indeed, in addition to total 24 threads in Runnable state (R), it looks like there’s over 3000 threads in Uninterruptible Sleep (D) state that typically (but not always) indicates sleeping due to synchronous disk IO. They are all owned by root . Is there some daemon that has gone crazy and has all these active processes/threads trying to do IO?

Let’s add one more column to ps to list the command line/program name too:

But now “root” seems to be gone from the top and we see only some Oracle processes near the top, with relatively little R/D activity. My command has a head -20 filter in the end to avoid printing out thousands of lines of output when most of the ps output lines are unique, that is the case here with all the individual kworker threads with unique names. There are thousands of them, each contributing just “1 thread with this name” to the load summary.

If you don’t want to start mucking around with further awk/sed commands to group the ps output better, you can use my pSnapper from 0x.tools that does the work for you. Also, it samples thread states multiple times and prints a breakdown of activity averages (to avoid getting misled by a single “unlucky” sample):

By default, pSnapper replaces any digits in the task’s comm field before aggregating (the comm2 field would leave them intact). Now it’s easy to see that our extreme system load spike was caused by a large number of kworker kernel threads (with “root” as process owner). So this is not about some userland daemon running under root, but a kernel problem.

Drilling down deeper — WCHAN

I’ll drill down deeper into this with another instance of the same problem (on the same machine). System load is in hundreds this time:

Let’s break the demand down by comm and state fields again, but I’ll also add the current system call and kernel wait location ( wchan ) to the breakdown. With these extra fields, I should run pSnapper with sudo as modern Linux kernel versions tend to block access to (or hide values in) some fields, when running as non-root:

You may need to scroll right to see the full output.

In the above breakdown of current system load, close to half of activity was caused by kernel kworker threads that were currently sleeping in blkdev_issue_flush kernel function responsible for an internal fsync to ensure that the writes get persisted to storage. The remaining “close to half” active threads were by oracle processes, waiting in a synchronous pread64 system call, in do_blockdev_direct_IO kernel function.

From the “Running (ON CPU)” lines you see that there was some CPU usage too, but doesn’t seem to be anywhere near to the hundreds of threads in I/O sleeps.

While doing these tests, I ran an Oracle benchmark with 1000 concurrent connections (that were sometimes idle), so the 253 sessions waiting in the synchronous pread64 system calls can be easily explained. Synchronous single block reads are done for index tree walking & index-based table block access, for example. But why do we see so many kworker kernel threads waiting for I/O too?

The answer is asynchronous I/O and I/Os done against higher level block devices (like the device-mapper dm devices for LVM and md devices for software RAID). With asynchronous I/O, the thread completing an I/O request in kernel memory structures is different from the (application) thread submitting the I/O. That’s where the kernel kworker threads come in and the story gets more complex with LVMs/dm/md devices (as there are multiple layers of I/O queues on the request path).

So you could say that the 253 thread where the oracle processes were sleeping within the pread64 syscall were the synchronous reads and the 255.5 kernel threads (without a system call as kernel code doesn’t need system calls to enter kernel mode) are due to asynchronous I/O.

Note that while synchronous I/O waits like pread64 will contribute to Linux system load because they end up in D state, the asynchronous I/O completion check (and IO reaping) system call io_getevents ends up in S state (Sleeping), if it’s instructed to wait by the application. So, only synchronous I/O operations (by the application or kernel threads) contribute to Linux system load!

Additionally, the io_submit system call for asynchronous submission of I/O requests may itself get blocked before a successful IO submit. This can happen if the I/O queue is already full of non-complete, non-reaped I/O requests or there’s a “roadblock” somewhere earlier on the path to the block device (like a filesystem layer or LVM issue). In such case an io_submit call itself would get stuck (despite the supposed asynchronous nature of I/O) and the thread issuing the I/O ends up waiting in D state, despite the I/O not having even been sent out to the hardware device yet.

There’s at least one Linux kernel bug causing trouble in the touchpoint of kworkers and dm/md devices in high-throughput systems, but I’ll leave it to a next post.

You don’t have to guess where the bottleneck resides, just dig deeper using what pSnapper offers. One typical question is that which file(s) or devices are we waiting for the most? Let’s add filename (or filenamesum that consolidates filenames with digits in them into one) into the breakdown:

Apparently the system load has increased by now (we have over 1000 active threads in R/D state). Most of the synchronous read waits witnessed are against /data/oracle/LIN*C/soe_bigfile.dbf file (by oracle user). The kworker threads don’t show any filenames for their I/Os as pSnapper gets the filename from the system call arguments (and resolves the file descriptor to a filename, where possible) — but kernel threads don’t need system calls as they are already operating deep in the kernel, always in kernel mode. Nevertheless, this field is useful in many application troubleshooting scenarios.

Drilling down deeper — kernel stack

Let’s dig even deeper. You’ll need to scroll right to see the full picture, I’ve highlighted some things in all the way to the right. We can sample the kernel stack of a thread too (kernel threads and also userspace application threads, when they happen to be executing kernel code):

Looks like a different hiccup has happened in my benchmark system now, additional WCHAN (kernel sleep location) values have popped up in the report: call_rwsem_down_*_failed by both Oracle and kworker threads and xfs_log_force_lsn waits by 32 kworker threads. rwsem stands for “reader-writer semaphore” that is essentially a low level lock. So, a large part of our system load (D state waits) are caused by some sort of locking in the kernel now and not by waiting for hardware I/O completion.

If you scroll all the way right and follow the kernel function call chain, it becomes (somewhat) evident that we are waiting for XFS inode locks when accessing (both reading and writing) files. Additionally, when searching what the xfs_log_force_lsn function does, you’d see that this is an XFS journal write that persists XFS metadata updates to disk so that you wouldn’t end up with a broken filesystem in case of a crash. XFS delayed logging must be ordered and checkpoints atomic, so there may be cases where one XFS-related kworker on one CPU will block other kworkers (that have assumed the same role) on remaining CPUs. For example, if the XFS log/checkpoint write is too slow for some reason. It’s probably not a coincidence that pSnapper shows exactly 32 threads waiting in xfs_log_force_lsn function on my 32 CPU system.

Why do we even have noticeable XFS metadata generation? Unlike ZFS, XFS does not log actual data in the journal, just the changed file metadata. Every time you write to a file, some metadata must be logged (last file modification timestamp) and even reads can cause metadata to be generated (filesystem mount options noatime and to lesser extent relatime avoid metadata generation on reads).

So in addition to the 32 kernel threads waiting for XFS log sync completion, we have hundreds of concurrent application processes (Oracle) and different kernel kworker threads that apparently contend for XFS inode lock (where the xfs_ilock kernel function seen in stack). All this lock contention and sleeping will contribute to system load as the threads will be in R or D state.

With any lock contention, one reasonable question is “why hasn’t the lock holder released it yet?», in other words, what is the lock holder itself doing for so long? This could be explained by slow I/O into the XFS filesystem journal, where the slow XFS log sync prevents everyone else from generating more XFS metadata (buffers are full), including the thread that may already hold an inode lock of the “hot” file because it wants to change its last change timestamp. And everyone else will wait!

So, the top symptom will point towards an inode lock contention/semaphore problem, while a deeper analysis will show that slow XFS journal writes, possibly experienced just by one thread, are the root cause. There are good performance reasons to put the filesystem journal to a separate block device even in the days of fast SSDs. I have just connected a few dots here thanks to previously troubleshooting such problems, but in order to be completely sytematic, kernel tracing or xfs_stats sampling would be needed for showing the relationship with XFS log sync waits and all the inode semaphore waits. I’ll leave this to a future blog entry.

How to troubleshoot past problems?

Running ps or psn will only help you troubleshoot currently ongoing problems. If you’ve been paying attention to my Always-on Profiling of Production Systems posts, you know that I’ve published an open source super-efficient /proc sampler tool xcapture that can save a log of sampled “active thread states” into CSV files. I haven’t written fancier tools for analyzing the output yet, but on command line you can just run “SQL” against the CSV using standard Linux tools:

In the above example, I just zoomed in to one minute of time of interest (11:47) with grep and then just used awk , sort and uniq for projecting fields of interest and a group by + order by top activity. Note that I didn’t have 32165 threads active here, I’d need to divide this figure with 60 as I’m zooming in to a whole minute (sampling happens once per second) to get the average active threads in R/D states.

Summary

The main point of this article was to demonstrate that high system load on Linux doesn’t come only from CPU demand, but also from disk I/O demand — and more specifically number of threads that end up in the uninterruptible sleep state D for whatever reason. Sometimes this reason is synchronous disk I/O, but sometimes the threads don’t even get that far when they hit some kernel-level bottleneck even before getting to submit the block I/O to the hardware device. Luckily with tools like pSnapper (or just ps with the right arguments), it is possible to drill down pretty deep from userspace, without having to resort to kernel tracing.

Further Reading

Brendan Gregg did some archaeological investigation into the origins of Linux load accounting differences. In short, the developer felt that it wouldn’t be right for the system load to drop, in case there’s an I/O bottleneck due to things like swapping. When you hit a sudden I/O bottleneck, then CPU utilization typically drops as your code waits more and it felt unreasonable to the developer that the “system load” drops lower while the system gets less work done. In other words, on Linux the load metric tries to account system load, not just CPU load only. However, as I’ve shown above, there are quite a few reasons (like asynch I/O activity not contributing to load) why the single-number system load metric alone won’t tell you the complete picture of your actual system load.

Next articles

Here’s a list of blog entries that I think of writing next (at some point). Let me know in Twitter, HackerNews or comments section which one should I work on first!

- Application I/O waits much longer than block I/O latency in iostat?

- Troubleshooting too many (thousands of) kworker threads

- Pressure Stall Information (PSI) as the new Linux load metric, how useful is it?

Источник