- Spark Installation on Linux Ubuntu

- Prerequisites:

- Java Installation On Ubuntu

- Python Installation On Ubuntu

- Apache Spark Installation on Ubuntu

- Spark Environment Variables

- Test Spark Installation on Ubuntu

- Spark Shell

- Spark Web UI

- Spark History server

- Conclusion

- Installing Apache Spark on Ubuntu 20.04 or 18.04

- Steps for Apache Spark Installation on Ubuntu 20.04

- 1. Install Java with other dependencies

- 2. Download Apache Spark on Ubuntu 20.04

- 3. Extract Spark to /opt

- 4. Add Spark folder to the system path

- 5. Start Apache Spark master server on Ubuntu

- 6. Access Spark Master (spark://Ubuntu:7077) – Web interface

- 7. Run Slave Worker Script

- Use Spark Shell

- Server Start and Stop commands

- How to Install Spark on Ubuntu

- Install Packages Required for Spark

- Download and Set Up Spark on Ubuntu

- Configure Spark Environment

- Start Standalone Spark Master Server

- Start Spark Slave Server (Start a Worker Process)

- Specify Resource Allocation for Workers

- Test Spark Shell

- Test Python in Spark

- Basic Commands to Start and Stop Master Server and Workers

Spark Installation on Linux Ubuntu

Let’s learn how to do Apache Spark Installation on Linux based Ubuntu server, same steps can be used to setup Centos, Debian e.t.c. In real-time all Spark application runs on Linux based OS hence it is good to have knowledge on how to Install and run Spark applications on some Unix based OS like Ubuntu server.

Though this article explains with Ubuntu, you can follow these steps to install Spark on any Linux-based OS like Centos, Debian e.t.c, I followed the below steps to setup my Apache Spark cluster on Ubuntu server.

Prerequisites:

- Ubuntu Server running

- Root access to Ubuntu server

- If you wanted to install Apache Spark on Hadoop & Yarn installation, please Install and Setup Hadoop cluster and setup Yarn on Cluster before proceeding with this article.

If you just wanted to run Spark in standalone, proceed with this article.

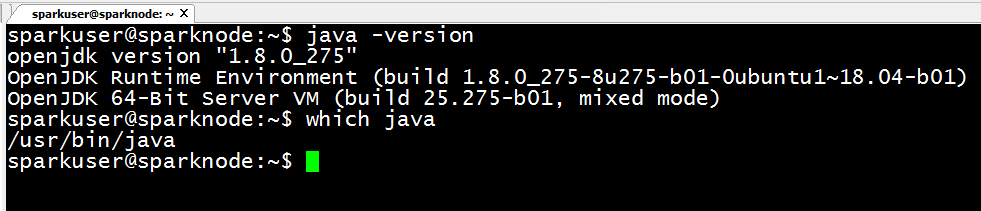

Java Installation On Ubuntu

Apache Spark is written in Scala which is a language of Java hence to run Spark you need to have Java Installed. Since Oracle Java is licensed here I am using openJDK Java. If you wanted to use Java from other vendors or Oracle please do so. Here I will be using JDK 8.

Post JDK install, check if it installed successfully by running java -version

Python Installation On Ubuntu

You can skip this section if you wanted to run Spark with Scala & Java on an Ubuntu server.

Python Installation is needed if you wanted to run PySpark examples (Spark with Python) on the Ubuntu server.

Apache Spark Installation on Ubuntu

In order to install Apache Spark on Linux based Ubuntu, access Apache Spark Download site and go to the Download Apache Spark section and click on the link from point 3, this takes you to the page with mirror URL’s to download. copy the link from one of the mirror site.

If you wanted to use a different version of Spark & Hadoop, select the one you wanted from the drop-down (point 1 and 2); the link on point 3 changes to the selected version and provides you with an updated link to download.

Use wget command to download the Apache Spark to your Ubuntu server.

Once your download is complete, untar the archive file contents using tar command, tar is a file archiving tool. Once untar complete, rename the folder to spark.

Spark Environment Variables

Add Apache Spark environment variables to .bashrc or .profile file. open file in vi editor and add below variables.

Now load the environment variables to the opened session by running below command

In case if you added to .profile file then restart your session by closing and re-opening the session.

Test Spark Installation on Ubuntu

With this, Apache Spark Installation on Linux Ubuntu completes. Now let’s run a sample example that comes with Spark binary distribution.

Here I will be using Spark-Submit Command to calculate PI value for 10 places by running org.apache.spark.examples.SparkPi example. You can find spark-submit at $SPARK_HOME/bin directory.

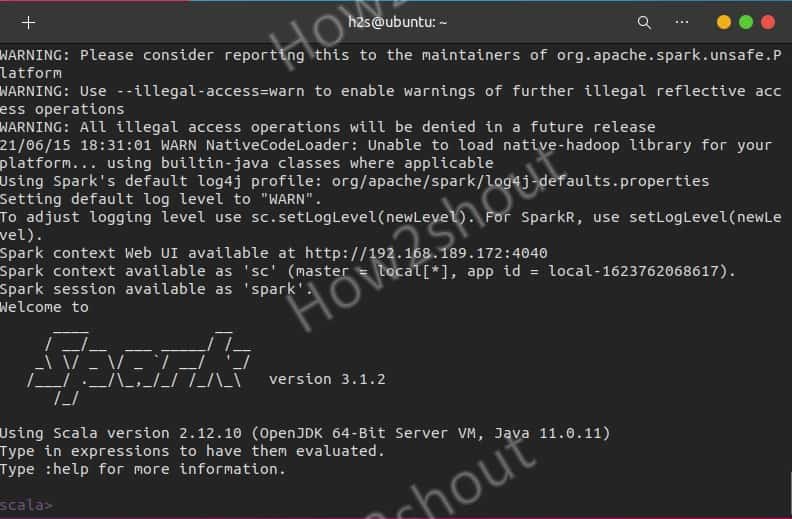

Spark Shell

Apache Spark binary comes with an interactive spark-shell. In order to start a shell to use Scala language, go to your $SPARK_HOME/bin directory and type “ spark-shell “. This command loads the Spark and displays what version of Spark you are using.

Note: In spark-shell you can run only Spark with Scala. In order to run PySpark, you need to open pyspark shell by running $SPARK_HOME/bin/pyspark . Make sure you have Python installed before running pyspark shell.

By default, spark-shell provides with spark (SparkSession) and sc (SparkContext) object’s to use. Let’s see some examples.

Spark-shell also creates a Spark context web UI and by default, it can access from http://ip-address:4040.

Spark Web UI

Apache Spark provides a suite of Web UIs (Jobs, Stages, Tasks, Storage, Environment, Executors, and SQL) to monitor the status of your Spark application, resource consumption of Spark cluster, and Spark configurations. On Spark Web UI, you can see how the Spark Actions and Transformation operations are executed. You can access by opening http://ip-address:4040/ . replace ip-address with your server IP.

Spark History server

Create $SPARK_HOME/conf/spark-defaults.conf file and add below configurations.

Create Spark Event Log directory. Spark keeps logs for all applications you submitted.

Run $SPARK_HOME/sbin/start-history-server.sh to start history server.

As per the configuration, history server by default runs on 18080 port.

Run PI example again by using spark-submit command, and refresh the History server which should show the recent run.

Conclusion

In Summary, you have learned steps involved in Apache Spark Installation on Linux based Ubuntu Server, and also learned how to start History Server, access web UI.

Источник

Installing Apache Spark on Ubuntu 20.04 or 18.04

Here we will see how to install Apache Spark on Ubuntu 20.04 or 18.04, the commands will be applicable for Linux Mint, Debian and other similar Linux systems.

Apache Spark is a general-purpose data processing tool called a data processing engine. Used by data engineers and data scientists to perform extremely fast data queries on large amounts of data in the terabyte range. It is a framework for cluster-based calculations that competes with the classic Hadoop Map / Reduce by using the RAM available in the cluster for faster execution of jobs.

In addition, Spark also offers the option of controlling the data via SQL, processing it by streaming in (near) real-time, and provides its own graph database and a machine learning library. The framework offers in-memory technologies for this purpose, i.e. it can store queries and data directly in the main memory of the cluster nodes.

Apache Spark is ideal for processing large amounts of data quickly. Spark’s programming model is based on Resilient Distributed Datasets (RDD), a collection class that operates distributed in a cluster. This open-source platform supports a variety of programming languages such as Java, Scala, Python, and R.

Steps for Apache Spark Installation on Ubuntu 20.04

The steps are given here can be used for other Ubuntu versions such as 21.04/18.04, including on Linux Mint, Debian, and similar Linux.

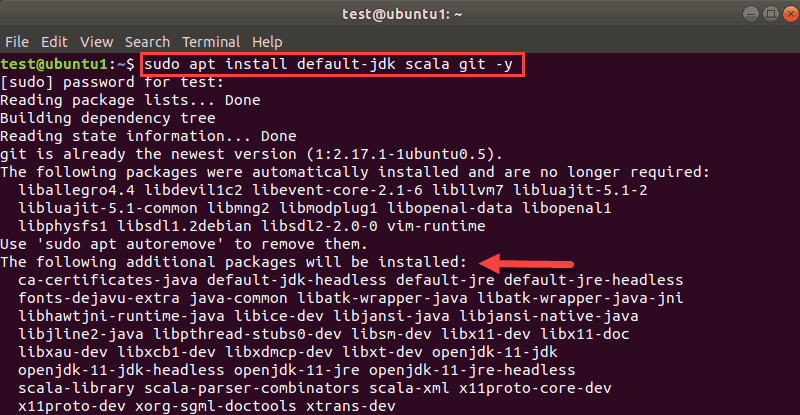

1. Install Java with other dependencies

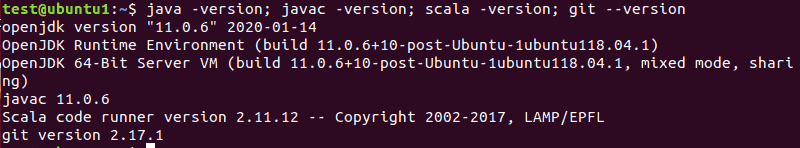

Here we are installing the latest available version of Jave that is the requirement of Apache Spark along with some other things – Git and Scala to extend its capabilities.

2. Download Apache Spark on Ubuntu 20.04

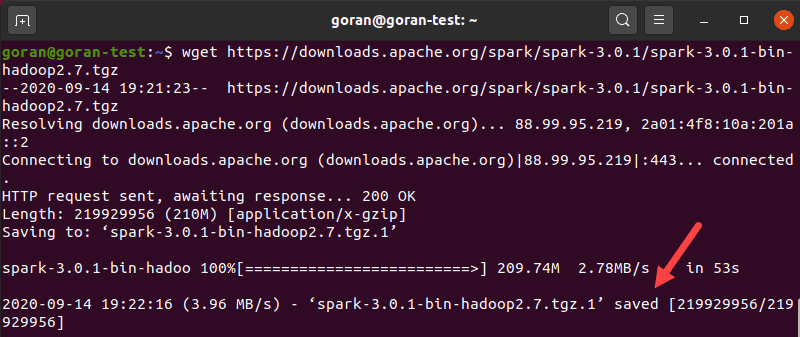

Now, visit the Spark official website and download the latest available version of it. However, while writing this tutorial the latest version was 3.1.2. Hence, here we are downloading the same, in case it is different when you are performing the Spark installation on your Ubuntu system, go for that. Simply copy the download link of this tool and use it with wget or directly download on your system.

3. Extract Spark to /opt

To make sure we don’t delete the extracted folder accidentally, let’s place it somewhere safe i.e /opt directory.

Also, change the permission of the folder, so that Spark can write inside it.

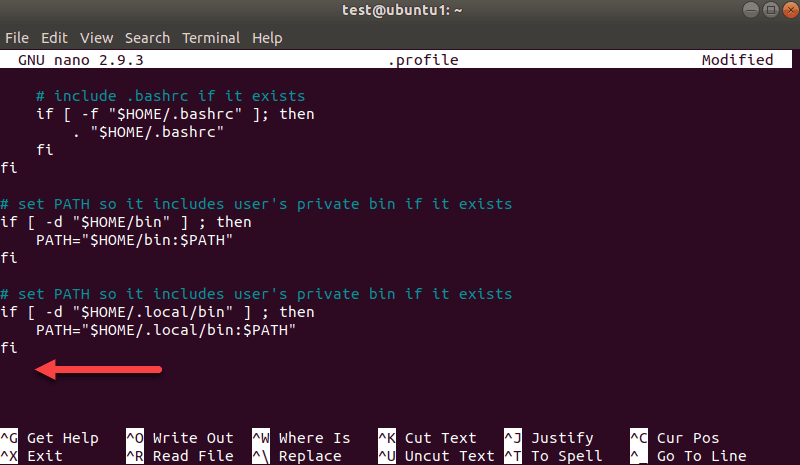

4. Add Spark folder to the system path

Now, as we have moved the file to /opt directory, to run the Spark command in the terminal we have to mention its whole path every time which is annoying. To solve this, we configure environment variables for Spark by adding its home paths to the system’s a profile/bashrc file. This allows us to run its commands from anywhere in the terminal regardless of which directory we are in.

Reload shell:

5. Start Apache Spark master server on Ubuntu

As we already have configured variable environment for Spark, now let’s start its standalone master server by running its script:

Change Spark Master Web UI and Listen Port (optional, use only if require)

If you want to use a custom port then that is possible to use, options or arguments given below.

–port – Port for service to listen on (default: 7077 for master, random for worker)

–webui-port – Port for web UI (default: 8080 for master, 8081 for worker)

Example– I want to run Spark web UI on 8082, and make it listen to port 7072 then the command to start it will be like this:

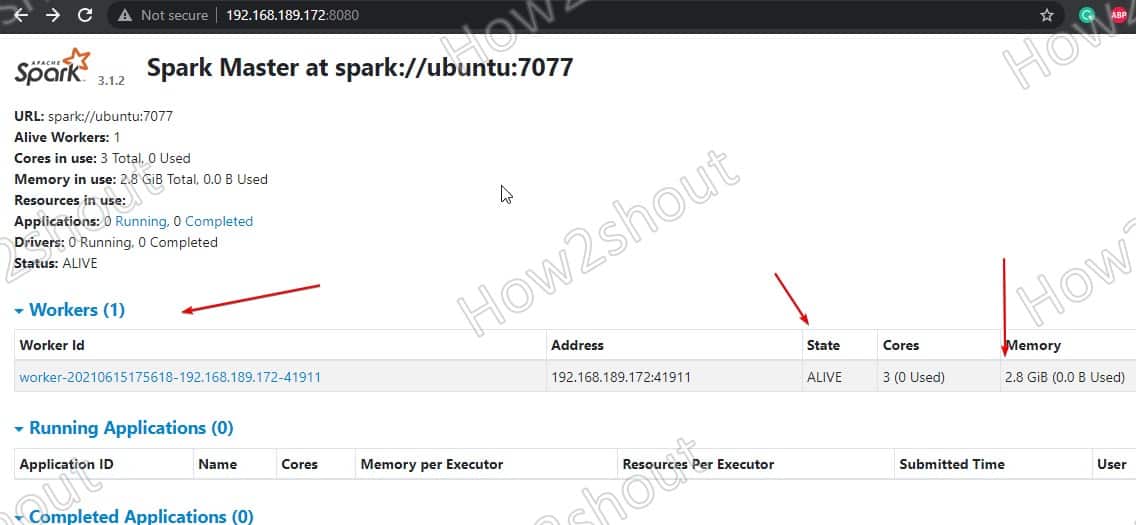

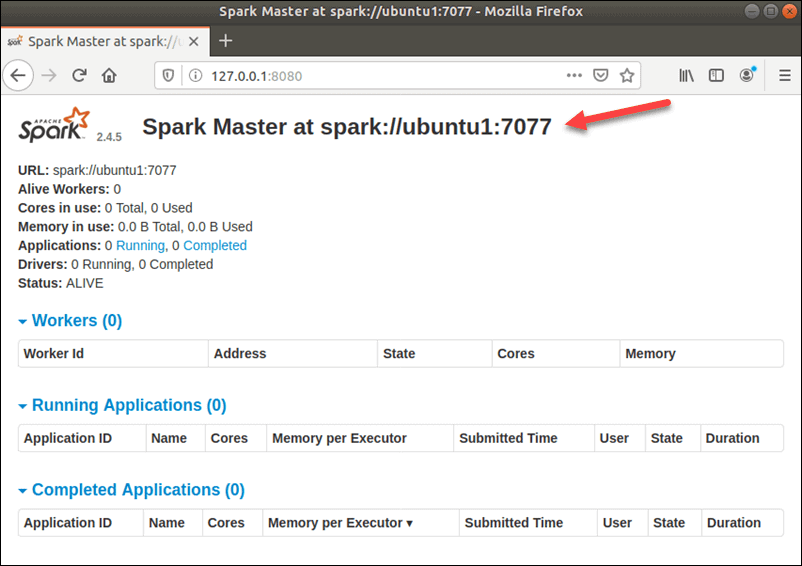

6. Access Spark Master (spark://Ubuntu:7077) – Web interface

Now, let’s access the web interface of the Spark master server that running at port number 8080. So, in your browser open http://127.0.0.1:8080 .

Our master is running at spark://Ubuntu:7077, where Ubuntu is the system hostname and could be different in your case.

If you are using a CLI server and want to use the browser of the other system that can access the server Ip-address, for that first open 8080 in the firewall. This will allow you to access the Spark web interface remotely at – http://your-server-ip-addres:8080

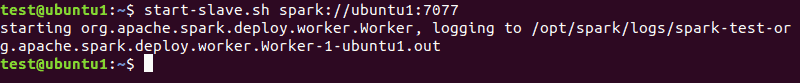

7. Run Slave Worker Script

To run Spark slave worker, we have to initiate its script available in the directory we have copied in /opt. The command syntax will be:

Command syntax:

start-worker.sh spark://hostname:port

In the above command change the hostname and port. If you don’ know your hostname then simply type- hostname in terminal. Where the default port of master is running on is 7077, you can see in the above screenshot.

So, as our hostname is ubuntu, the command will be like this:

Refresh the Web interface and you will see the Worker ID and the amount of memory allocated to it:

If you want then, you can change the memory/ram allocated to the worker. For that, you have to restart the worker with the amount of RAM you want to provide it.

Use Spark Shell

Those who want to use Spark shell to start programming can access it by directly typing:

To see the supported options, type- :help and to exit shell use – :quite

To start with Python shell instead of Scala, use:

Server Start and Stop commands

If you want to start or stop master/workers instances, then use the corresponding scripts:

To stop all at once

Or start all at once:

Ending Thoughts:

In this way, we can install and start using Apache Spark on Ubuntu Linux. To know more about you can refer to the official documentation. However, compared to Hadoop, Spark is still relatively young, so you have to reckon with some rough edges. However, it has already proven itself many times in practice and enables new use cases in the area of big or fast data through the fast execution of jobs and caching of data. And finally, it offers a uniform API for tools that would otherwise have to be operated and operated separately in the Hadoop environment.

Источник

How to Install Spark on Ubuntu

Home » DevOps and Development » How to Install Spark on Ubuntu

Apache Spark is a framework used in cluster computing environments for analyzing big data. This platform became widely popular due to its ease of use and the improved data processing speeds over Hadoop.

Apache Spark is able to distribute a workload across a group of computers in a cluster to more effectively process large sets of data. This open-source engine supports a wide array of programming languages. This includes Java, Scala, Python, and R.

In this tutorial, you will learn how to install Spark on an Ubuntu machine. The guide will show you how to start a master and slave server and how to load Scala and Python shells. It also provides the most important Spark commands.

- An Ubuntu system.

- Access to a terminal or command line.

- A user with sudo or root permissions.

Install Packages Required for Spark

Before downloading and setting up Spark, you need to install necessary dependencies. This step includes installing the following packages:

Open a terminal window and run the following command to install all three packages at once:

You will see which packages will be installed.

Once the process completes, verify the installed dependencies by running these commands:

The output prints the versions if the installation completed successfully for all packages.

Download and Set Up Spark on Ubuntu

Now, you need to download the version of Spark you want form their website. We will go for Spark 3.0.1 with Hadoop 2.7 as it is the latest version at the time of writing this article.

Use the wget command and the direct link to download the Spark archive:

When the download completes, you will see the saved message.

Note: If the URL does not work, please go to the Apache Spark download page to check for the latest version. Remember to replace the Spark version number in the subsequent commands if you change the download URL.

Now, extract the saved archive using the tar command:

Let the process complete. The output shows the files that are being unpacked from the archive.

Finally, move the unpacked directory spark-3.0.1-bin-hadoop2.7 to the opt/spark directory.

Use the mv command to do so:

The terminal returns no response if it successfully moves the directory. If you mistype the name, you will get a message similar to:

Configure Spark Environment

Before starting a master server, you need to configure environment variables. There are a few Spark home paths you need to add to the user profile.

Use the echo command to add these three lines to .profile:

You can also add the export paths by editing the .profile file in the editor of your choice, such as nano or vim.

For example, to use nano, enter:

When the profile loads, scroll to the bottom of the file.

Then, add these three lines:

Exit and save changes when prompted.

When you finish adding the paths, load the .profile file in the command line by typing:

Start Standalone Spark Master Server

Now that you have completed configuring your environment for Spark, you can start a master server.

In the terminal, type:

To view the Spark Web user interface, open a web browser and enter the localhost IP address on port 8080.

The page shows your Spark URL, status information for workers, hardware resource utilization, etc.

The URL for Spark Master is the name of your device on port 8080. In our case, this is ubuntu1:8080. So, there are three possible ways to load Spark Master’s Web UI:

Note: Learn how to automate the deployment of Spark clusters on Ubuntu servers by reading our Automated Deployment Of Spark Cluster On Bare Metal Cloud article.

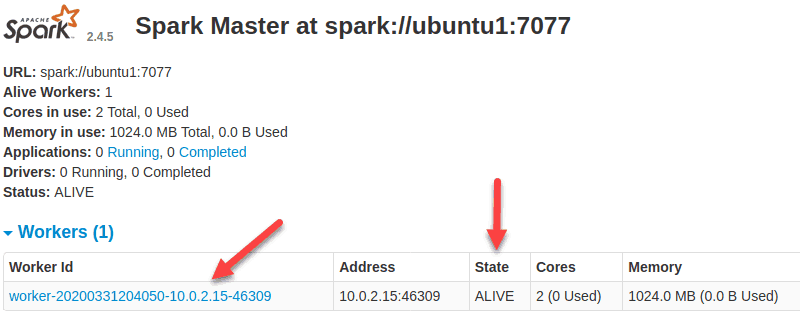

Start Spark Slave Server (Start a Worker Process)

In this single-server, standalone setup, we will start one slave server along with the master server.

To do so, run the following command in this format:

The master in the command can be an IP or hostname.

In our case it is ubuntu1 :

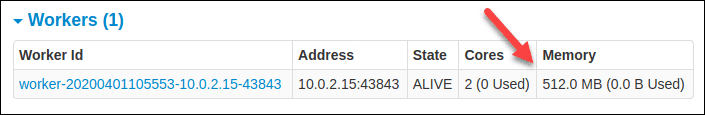

Now that a worker is up and running, if you reload Spark Master’s Web UI, you should see it on the list:

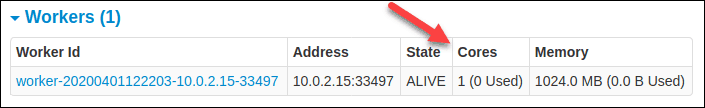

Specify Resource Allocation for Workers

The default setting when starting a worker on a machine is to use all available CPU cores. You can specify the number of cores by passing the -c flag to the start-slave command.

For example, to start a worker and assign only one CPU core to it, enter this command:

Reload Spark Master’s Web UI to confirm the worker’s configuration.

Similarly, you can assign a specific amount of memory when starting a worker. The default setting is to use whatever amount of RAM your machine has, minus 1GB.

To start a worker and assign it a specific amount of memory, add the -m option and a number. For gigabytes, use G and for megabytes, use M .

For example, to start a worker with 512MB of memory, enter this command:

Reload the Spark Master Web UI to view the worker’s status and confirm the configuration.

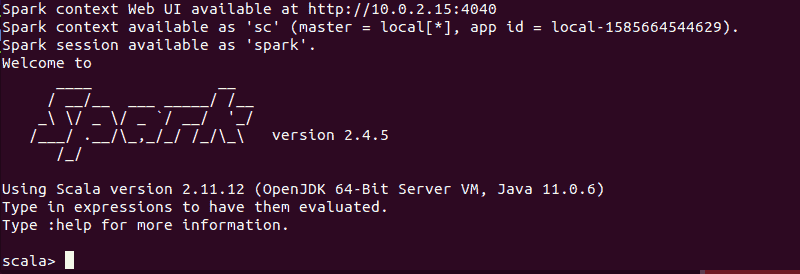

Test Spark Shell

After you finish the configuration and start the master and slave server, test if the Spark shell works.

Load the shell by entering:

You should get a screen with notifications and Spark information. Scala is the default interface, so that shell loads when you run spark-shell.

The ending of the output looks like this for the version we are using at the time of writing this guide:

Type :q and press Enter to exit Scala.

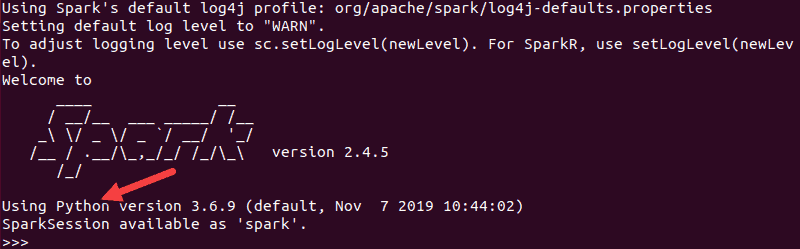

Test Python in Spark

If you do not want to use the default Scala interface, you can switch to Python.

Make sure you quit Scala and then run this command:

The resulting output looks similar to the previous one. Towards the bottom, you will see the version of Python.

To exit this shell, type quit() and hit Enter.

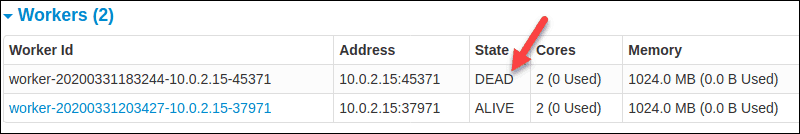

Basic Commands to Start and Stop Master Server and Workers

Below are the basic commands for starting and stopping the Apache Spark master server and workers. Since this setup is only for one machine, the scripts you run default to the localhost.

To start a master server instance on the current machine, run the command we used earlier in the guide:

To stop the master instance started by executing the script above, run:

To stop a running worker process, enter this command:

The Spark Master page, in this case, shows the worker status as DEAD.

You can start both master and server instances by using the start-all command:

Similarly, you can stop all instances by using the following command:

This tutorial showed you how to install Spark on an Ubuntu machine, as well as the necessary dependencies.

The setup in this guide enables you to perform basic tests before you start configuring a Spark cluster and performing advanced actions.

Our suggestion is to also learn more about what Spark DataFrame is, the features, how to use Spark DataFrame when collecting data and how to create a Spark DataFrame.

Источник