- Memory mapping¶

- Lab objectives¶

- Overview¶

- Structures used for memory mapping¶

- struct page ¶

- struct vm_area_struct ¶

- struct mm_struct ¶

- Device driver memory mapping¶

- jasoncc.github.io

- Random Signals on Some Low-level Stuff.

- Notes on x86_64 Linux Memory Management Part 1: Memory Addressing

- x86 System Architecture Operating Modes and Features

- Memory Addressing

- Memory Address

- Segmentation in Hardewre

- Segment Selector and Segmentation Registers

- Segmentation Descriptor

- Fast Access to Segment Descriptors

- Segmentation Unit

- Segmentation in IA-32e Mode (32584-sdm-vol3: 3.2.4)

- Segment Descriptor Tables in IA-32e Mode

- Segmentation in Linux

- Linux GDT

- Paging in Hardware

- Regular Paging

- Extended/PAE Paging (Large Pages)

- Paging for 64-bit Architecture (x86_64)

- Hardware Cache

- Translation Lookaside Buffers (TLB)

- Paging in Linux

- The Linear Address Fields

- Page Table Handling

- Virtual Memory Layout

- A little interesting page_address() implemenation on x86_64

- Memory Model Kernel Configuration

- Physical Memory Layout

- Process Page Table

- Kernel Page Table

- Fix-Mapped Linear Addresses

- Handling the Hardware Cache and the TLB

Memory mapping¶

Lab objectives¶

- Understand address space mapping mechanisms

- Learn about the most important structures related to memory management

- address space

- mmap()

- struct page

- struct vm_area_struct

- struct vm_struct

- remap_pfn_range

- SetPageReserved()

- ClearPageReserved()

Overview¶

In the Linux kernel it is possible to map a kernel address space to a user address space. This eliminates the overhead of copying user space information into the kernel space and vice versa. This can be done through a device driver and the user space device interface ( /dev ).

This feature can be used by implementing the mmap() operation in the device driver’s struct file_operations and using the mmap() system call in user space.

The basic unit for virtual memory management is a page, which size is usually 4K, but it can be up to 64K on some platforms. Whenever we work with virtual memory we work with two types of addresses: virtual address and physical address. All CPU access (including from kernel space) uses virtual addresses that are translated by the MMU into physical addresses with the help of page tables.

A physical page of memory is identified by the Page Frame Number (PFN). The PFN can be easily computed from the physical address by dividing it with the size of the page (or by shifting the physical address with PAGE_SHIFT bits to the right).

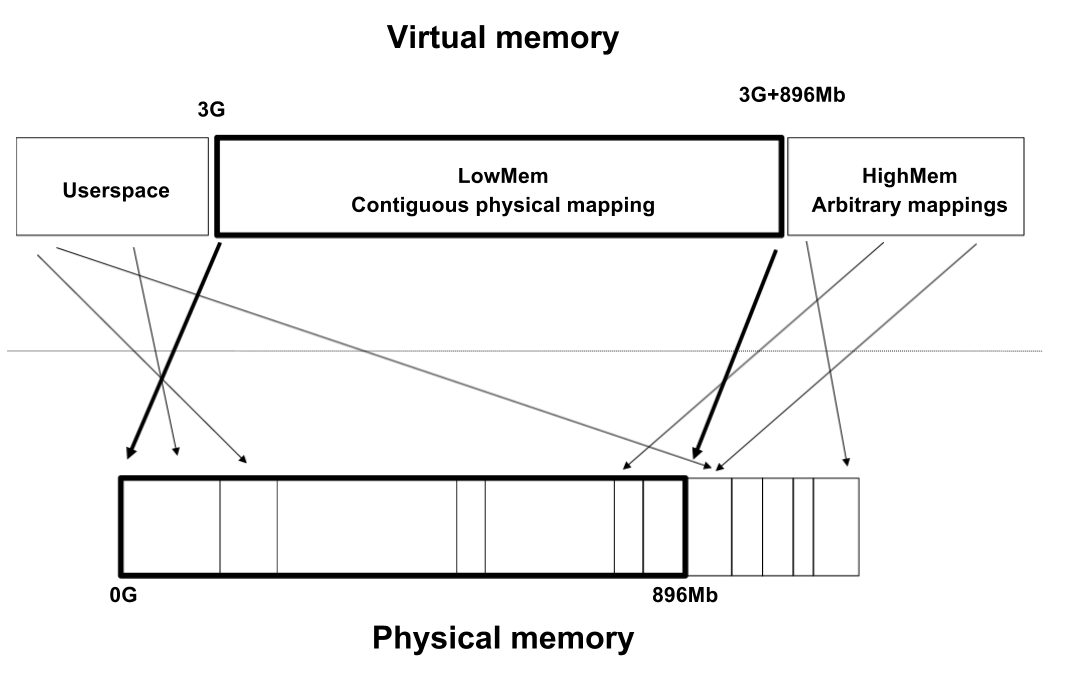

For efficiency reasons, the virtual address space is divided into user space and kernel space. For the same reason, the kernel space contains a memory mapped zone, called lowmem, which is contiguously mapped in physical memory, starting from the lowest possible physical address (usually 0). The virtual address where lowmem is mapped is defined by PAGE_OFFSET .

On a 32bit system, not all available memory can be mapped in lowmem and because of that there is a separate zone in kernel space called highmem which can be used to arbitrarily map physical memory.

Memory allocated by kmalloc() resides in lowmem and it is physically contiguous. Memory allocated by vmalloc() is not contiguous and does not reside in lowmem (it has a dedicated zone in highmem).

Structures used for memory mapping¶

Before discussing the mechanism of memory-mapping a device, we will present some of the basic structures related to the memory management subsystem of the Linux kernel.

Before discussing about the memory mapping mechanism over a device, we will present some of the basic structures used by the Linux memory management subsystem. Some of the basic structures are: struct page , struct vm_area_struct , struct mm_struct .

struct page ¶

struct page is used to embed information about all physical pages in the system. The kernel has a struct page structure for all pages in the system.

There are many functions that interact with this structure:

- virt_to_page() returns the page associated with a virtual address

- pfn_to_page() returns the page associated with a page frame number

- page_to_pfn() return the page frame number associated with a struct page

- page_address() returns the virtual address of a struct page ; this functions can be called only for pages from lowmem

- kmap() creates a mapping in kernel for an arbitrary physical page (can be from highmem) and returns a virtual address that can be used to directly reference the page

struct vm_area_struct ¶

struct vm_area_struct holds information about a contiguous virtual memory area. The memory areas of a process can be viewed by inspecting the maps attribute of the process via procfs:

A memory area is characterized by a start address, a stop address, length, permissions.

A struct vm_area_struct is created at each mmap() call issued from user space. A driver that supports the mmap() operation must complete and initialize the associated struct vm_area_struct . The most important fields of this structure are:

- vm_start , vm_end — the beginning and the end of the memory area, respectively (these fields also appear in /proc/

/maps );

struct mm_struct ¶

struct mm_struct encompasses all memory areas associated with a process. The mm field of struct task_struct is a pointer to the struct mm_struct of the current process.

Device driver memory mapping¶

Memory mapping is one of the most interesting features of a Unix system. From a driver’s point of view, the memory-mapping facility allows direct memory access to a user space device.

To assign a mmap() operation to a driver, the mmap field of the device driver’s struct file_operations must be implemented. If that is the case, the user space process can then use the mmap() system call on a file descriptor associated with the device.

The mmap system call takes the following parameters:

To map memory between a device and user space, the user process must open the device and issue the mmap() system call with the resulting file descriptor.

The device driver mmap() operation has the following signature:

The filp field is a pointer to a struct file created when the device is opened from user space. The vma field is used to indicate the virtual address space where the memory should be mapped by the device. A driver should allocate memory (using kmalloc() , vmalloc() , alloc_pages() ) and then map it to the user address space as indicated by the vma parameter using helper functions such as remap_pfn_range() .

remap_pfn_range() will map a contiguous physical address space into the virtual space represented by vm_area_struct :

remap_pfn_range() expects the following parameters:

- vma — the virtual memory space in which mapping is made;

- addr — the virtual address space from where remapping begins; page tables for the virtual address space between addr and addr + size will be formed as needed

- pfn — the page frame number to which the virtual address should be mapped

- size — the size (in bytes) of the memory to be mapped

- prot — protection flags for this mapping

Here is an example of using this function that contiguously maps the physical memory starting at page frame number pfn (memory that was previously allocated) to the vma->vm_start virtual address:

To obtain the page frame number of the physical memory we must consider how the memory allocation was performed. For each kmalloc() , vmalloc() , alloc_pages() , we must used a different approach. For kmalloc() we can use something like:

Источник

jasoncc.github.io

Random Signals on Some Low-level Stuff.

Notes on x86_64 Linux Memory Management Part 1: Memory Addressing

x86 System Architecture Operating Modes and Features

From chapter 2 of Intel SDM manual volume 3. IA-32 architecture (beginning with Intel386 processor family) provides extensive support for operating system and system-development software. This support offers multiple modes of operation, which include Read mode, protected mode, virtual 8086 mode, and system management mode. These are sometimes referred to legacy modes.

Intel 64 architecture supports almost all the system programming facilities available in IA-32 architecture and extends them to a new operating mode ( IA-32e mode) that supports a 64-bit programming environment. IA-32e mode allows software to operate in one of two sub-modes:

- 64-bit mode supports 64-bit OS and 64-bit applications

- Compatibility mode allows most legacy software to run; it co-exist with 64-bit applications under 64-bit OS.

The IA-32 system-level architecutre includes features to assist in the following operatins:

- Memory management

- Protection of software modules

- Multitasking

- Exception and interrupt handling

- Multiprocessing

- Cache Management

- Hardware resource and power management

- Debugging and performance monitoring

All Intel 64 and IA-32 processors enter real-address mode following a power-up or reset. Software then initiates the swith from real-address mode to protected mode. If IA-32e mode operations is desired, software also initiates a switch from protected mode to IA-32e mode. (see Chapter 9, “Processor Management and Initialization”, SDM-vol-3).

Memory Addressing

Memory Address

Three kinds of addresses:

Logical address, included in the machine language instructions to specify the address of an operand or of an instruction. Each logical address consists of a segment and offset (or displacement) that denotes the distance from the start of the segment to the actual address.

Linear address (also known as virtual address), a signal 32/64-bit unsigned integer that can be used to address whole address space.

Physical address, used to address memory cells in memory chips. They correspond to the electrical signals along the address pins of the microprocessor to the memory bus.

The Memory Management Unit (MMU) transforms a logical address into a linear address by means of a hardware circuit called a segmentation unit; subsequently, a second hardware circuit called a paging unit transforms the linear address into physical address. (“L->L->P” Model)

Segmentation in Hardewre

Segment Selector and Segmentation Registers

A logical address consists of two parts: a segment identifier and an offset. The segment identifier is a 16-bit field called the Segment Selector, while the offset is a 32-bit field (64-bit field in IA-32e mode?).

To make it easy to retrieve segment selectors quickly, the processor provides segmentation registers whose only purpose is to hold Segment Selectors; these registers are cs, ds, es, ss, fs, gs. Although there are only six of them, a program can resue the same segmentation register for different purpose by saving its content in memory and them restoring it later.

The cs register has another important function: it includes a 2-bit field that specifies Current Privilege Level (CPL) of the CPU. The value 0 denotes the highest privilege level (a.k.a. Ring 0), while the value 3 denotes the lowest one (a.k.a. Ring 3). Linux uses only levels 0 and 3, which are respectively called Kernel Mode and User Mode.

Segmentation Descriptor

Each segment is represented by an 8-byte Segment Descriptor that describes the segment characteristics. Segment Descriptors are stored either in the Global Descriptor Table (GDT) or Local Descriptor Table (LDT).

There are several types of segments, and thus serveral types of Segment Descriptors. The following list shows the types that are widely used in Linux.

Code Segment Descriptor

Indicates that the Segment Descriptor refers to a code segment; it may be included in either GDT or LDT. The descriptor has the S flag (non-system segment).

Data Segment Descriptor

Indicates that the Segment Descriptor refers to a data segment; it may be included in either GDT or LDT. It has S flag set. Stack segments are implemented by means of generic data segments.

Task State Segment Descriptor (TSSD)

Indicates that the segment Descriptor refers to a Task State Segment (TSS), that is, a segment used to save the contents of the processor registers; it can appear only in GDT. The S flag is set to 0.

Local Descriptor Table Descriptor (LDTD)

Indicates that the Segment Descriptor refers to a segment containing an LDT; it can appear only in GDT. The S flag of such descriptor is set to 0.

Fast Access to Segment Descriptors

To speed up the translation of logical addresses into linear addresses, x86 processor provides an additional nonprogrammable register for each of the six segmentation register. Each nonprogrammable register (a.k.a “shadow register” or “descriptor cache”) contains 8-byte Segment Descriptor specified by the Segment Selector contained in the corresponding segmentation register. Every time a Segment Selector is loaded in a segmentation register, the corresponding Segment Descriptor is loaded from memory into the CPU shadow register. From then on, translating of logical addresses referring to that segment can be performed without accessing the GDT or LDT stored in main memory.

Segmentation Unit

Logical address is :

TI is to determine whether to get base linear address from gdtr or ldrt.

Segmentation in IA-32e Mode (32584-sdm-vol3: 3.2.4)

In IA-32e mode of Intel 64 architecture, the effects of segmentation depend on whether the processor is running in compatibility mode or 64-bit mode. In compatibility mode, segmentation functions just as it does using legacy 16-bit or 32-bit protected mode sematics.

In 64-bit mode, segmentation is generally (but not completely) disabled, creating a flat 64-bit linear-address space. The processor treats the segmentation base of CS, DS, ES, SS as zero, creating a linear address that is equal to the effective address. The FS and GS segments are exceptions. These segment registers (which hold segment base) can be used as additional base registers in linear address calculations. They facilitate addressing local data and certain operating system data structures.

Note that the processor does not perform segment limit checks at runtime in 64-bit mode.

Segment Selector

A segment seletor is 16-bit identifier for a segment.

Segment selectors are visible to application programs as part of a pointer variable, but the values of selectors are usually assigned or modified by link editors or linking loaders, not application programs.

Segment Descriptor Tables in IA-32e Mode

In IA-32e mode, a segment descriptor table can contain up to 8192 (2^13) 8-byte descriptors. An entry in the segment descriptor table can be 8 bytes. System segment descriptors are expaned to 16 bytes (occupying the space of the two entries).

GDTR and LDTR registers are expanded to hold 64-bit base addres. The corresponding pseudo-descriptor is 80 bits (64 bits “Base Address” + 16 bits “Limit”).

The following system descriptors expand to 16 bytes:

- Call gate descriptor (see 5.8.3.1, “IA-32e Mode Call Gates”)

- IDT gate descriptor (see 6.14.1, “64-Bit Mode IDT”)

- LDT and TSS descriptors (see 7.2.3, “TSS Descriptor in 64-bit mode”)

Segmentation in Linux

Linux prefers paging to segmentation for the following reasons:

Memory management is simpler when all processes use the same segment register value, that is, when the share the same set of linear addresses.

One of the design objectives of Linux is portability to a wide range of architecture. RISC architectures in particular have limited support for segmentation.

In Linux, all base addresses of user mode code/data segments and kernel code/data segments are set to 0.

The corresponding Segment Selectors are defined by the macros __USER_CS , __USER_DS , __KERNEL_CS , and __KERNEL_DS , respectively. To address the kernel core segment, for instance, the kernel just loads the value yielded by the __KERNEL_CS macro into the cs segmentation register.

The Current Privilege Level of the CPU indicates whether the process is in User Mode or Kernel Mode and is specified by the RPL field of the Segment Selector stored in the cs register.

When saving a pointer to an instruction or to a data structure, the kernel does not need to store the Segment Selector component of the logical address, because the ss register contains the Segment Selector. As an example, when the kernel invokes a function, it executes a call assembly language instruction specifiying just the Offset component of its logical address; the Segment Selector is implicitly selected as the one referred to by the cs register. Because there is just one segment of type “executable in Kernel Mode”, namely the code segment identified by __KERNEL_CS , it sufficient to load __KERNEL_CS into cs whenever the CPU switched to Kernel Mode. The same argument goes for pointers to kernel data structures (implicitly using the ds register), as well as for pointers to user data structures (the kernel explicitly uses the es register).

Linux GDT

In uniprocessor systems there is only one GDT, while in multiprocessor systems there is one GDT for every CPU in the system.

init_per_cpu_gdt_page if CONFIG_X86_64_SMP is set or gtd_page otherwise.

see label early_gdt_descr and early_gdt_descr_base in head_64.S .

Four user and kernel code and data segments

Task State Segment (TSS), this is different from each processor in the system. The linear space corresponding to a TSS is a small subset of the linear address space corresponding to the kernel data segment. The Task State Segments are sequentially stored in the per-CPU init_tss array on SMP system. (it’s cpu_tss since 4.x kernel). See how Linux uses TSSs in chapter 3 in ULK3, and __switch_to in the source.

A segment including the default Local Descriptor Table (LDT), usually shared by all processes.

Three Thread Local Storage (TLS) segments. see set_thread_area(2) and get_thread_area(2) .

Three segments related to Advanced Power Management (APM).

Five segments related to Plug and Play (PnP) BIOS services.

A special TSS segment used by kernel to handle “Double fault” execptions. (see Chapter 4 in ULK3).

Paging in Hardware

The paging unit translates linear addresses into physical ones. One key task in the unit is to check the requested access type against the access rights of the linear address. If the memory access is not valid, it generates a Page Fault exceptions (e.g. COW, SIGSEGV, SIGBUS?, Swap Cache/area).

For the sake of efficiency, linear addresses are grouped in fixed-length intervals called pages; contiguous linear addresses within a page are mapped into contigous physical addresses. We use term page to refer both to a set of addresses and to the data contained in this group of addresses.

The paging unit thinks of all RAM as partitioned into fixed-length page fromes (a.k.a physical pages). Each page frames contains a page, that is, the length of a page frame coincides with that of a page. A page frame is a constituent of main memory, and hence is a storage area. It is important to distinguish a page from a page frame; the former is just a block of data, which may be stored in any page frame or on disk.

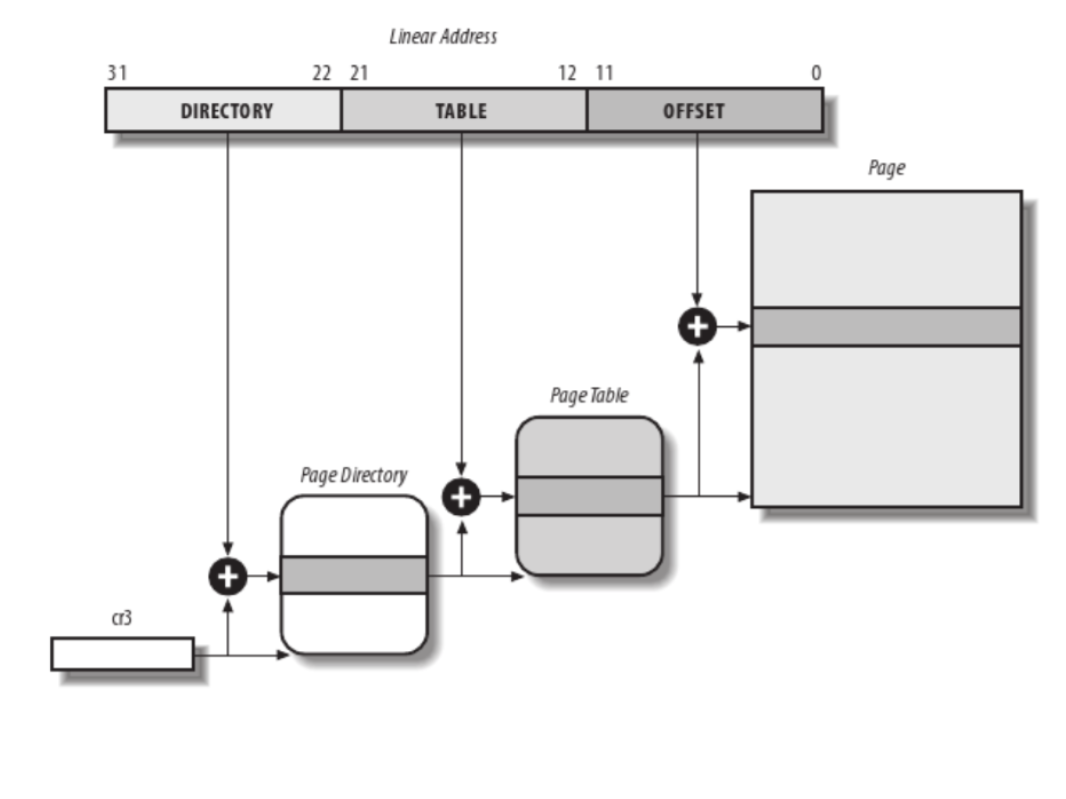

The data structure that maps linear to physical addresses are called page tables; they are stored in main memroy and must be properly initialized by the kernel before enabling paging unit.

Paging unit is enabled by setting the PG flag of a control register named cr0. When PG = 0 (disabled), linear addresses are interpreted as physical addresses.

Regular Paging

Fields in 32bit linear address space:

Fields in 64bit linear address space:

The entries of Page Directories and Page Tables have the same structure. Each entry includes the following fields:

- Present flag

- Field containing the 20 most significant bits of a page frame physical address

- Accessed flag: set each time the paging unit addresses the corresponding page frame. (e.g. PFRA)

- Dirty flag

- Read/Write flag: contains the access right (Read/Write or Read) of page or of the page table.

- User/Supervisor flag: contains privilege level required to access the page or of the page table.

- PCD and PWT flag: contains the way the page or Page Table is handled by the hardware cache.

- PCD: page-level cache disable

- PWT: page-level write-through

- Page Size flag: applies only to Page Directory entries. If it’s set, the entry refers to a 2MB, or 4MB long page frame.

- Global flag: applies only to Page Table entries. This flag as introduced to prevent frequently used pages from being flushed from TLB cache. It works only if the Page Global Enable (PGE) flag of register CR4 is set.

Extended/PAE Paging (Large Pages)

PAE stands for Physical Address Extension Paging Mechanism

Paging for 64-bit Architecture (x86_64)

| Page size | 4KB |

|---|---|

| Number of address bits used | 48 |

| Number of paging leveles | 4 |

| Linear address splitting | 9 + 9 + 9 + 9 + 12 |

Hardware Cache

Hardware cache memory were introduced to reduce the speed mismatch between CPU and DRAM. They are based on the well-known locality principle, which holds both for program and data structure. For this purpose, a new unit called line was introduced to the architecture. It consists of a few dozen contigous bytes that are transfered in burst mode between the slow DRAM and the fast on-chip static RAM (SRAM) used to implement caches.

The cache is subdivided into subsets of lines. And most caches are to some degree N-way set associative. where any line of main memory can be stored in any one of N lines of the cache.

The bits of the memory’s physical address are usually split into three groups: the most significant ones correspond to the tag, the middle ones to the cache controller subset index, and the least significant ones to the offset within the line.

Linux clears the PCD and PWT flags of all Page Diectory and Page Table entries; as a result, caching is enabled for all page frames, and the write-back strategy is always adopted for writing.

Translation Lookaside Buffers (TLB)

Besides general-purpose hardware caches, x86 processor include another cache called Translation Lookaside Buffers (TLB) to speed up linear address translation. When a linear address is used for the first time, the corresponding physical address is computed through slow accesses to the Page Table in RAM. The physical address is then stored in a TLB entry so that further references to the same linear address can be quickly translated.

In a multiprocessor system, each CPU has its own TLB, called the local TLB of the CPU.

When the cr3 control register of a CPU is modified, the hardware automatically invalidates all entries of the local TLB, because a new set of page tables is in use and the TLBs are pointing to the old data.

Paging in Linux

Linux adopts a common paging model that fits both 32-bit and 64-bit architectures. Starting with version 2.6.11, a four-level paging model has been adopted.

- Page Global Directory

- Page Upper Directory

- Page Middel Directory

- Page Table

(Regular) Linear address:

Each process has its own Page Global Directory and its own set of Page Tables. When a process switch occurs, the cr3 is switched accordingly. Thus, when the new process resumes its execution on the CPU, the paging unit refers to the correct set of Page Tables.

Starting with v4.12, Intel’s 5-level paging support is enabled if CONFIG_X86_5LEVEL is set. See the following links to find more.

The Linear Address Fields

Macros that simplify Page Table handling.

- PAGE_SHIFT

- PMD_SHIFT

- PUD_SHIFT

- PGDIR_SHIFT

- PTRS_PER_PTE, PTRS_PER_PMD, PTRS_PER_PUD, PTRS_PER_PGD

Page Table Handling

(pte|pmd|pud|pgd)_t describe the format of, respectively, a Page Table, a Page Middle Directoy, a Page Upper Directoy, and a Page Global Directoy entry. They are 64-bit data teyps when PAE is enabled and 32-bit data types otherwise. (PS: They are the same type unsigned long on x86_64)

Five type-conversion macros cast an unsigned integer into the required type: __(pte|pmd|pud|pgd) . Five other type-conversion macros do the revser casting: (pte|pmd|pud|pgd)_val .

Kernel also provides several macros and functions to read or modify page tables entries.

Page flag reading functions:

Page flag setting functions:

Page allocation functions:

The index field of the page descriptor used as a pgd page is pointing to the corresponding memory descriptor mm_struct . (see pgd_set_mm )

Global variable pgd_list holds the doubly linked list of pgd(s) by linking page->lru field of that pgd page. (see pgd_list_add )

Common kernel code path: execve()

Virtual Memory Layout

See Documentation/x86/x86_64/mm.txt in the kernel to find the latest description of the memory map.

The following is grabbed from v3.2.

A little interesting page_address() implemenation on x86_64

A linear address can be calculated by __va(PFN_PHYS(page_to_pfn(page))) , which is equivalent to: (page — vmemmap) / 64 * 4096 . Where

- page is address of a page descriptor

- vmemmap is 0xffffea00_00000000

- 64 is size of page descriptor, i.e. sizeof(struct page)

- 4096 is PAGE_SIZE .

As 2’s complement subtraction is the binary addition of the minuend to the 2’s complement of the subtrahend (adding a negivative number is the same as subtracting a positive one). The assembly code doing that calculation looks like the following. Note that 0x1600_00000000 is the corresponding 2’s complement, and by “x86_64-abi-0.98”, which requires compiler to use movabs in this case.

(see __get_free_pages , virt_to_page , and lowmem_page_address ).

Memory Model Kernel Configuration

Physical Memory Layout

During the initialization phase the kernel must build a physical address map that specifies which physical address ranges are usable by the kernel and which are unavaiable.

The kernel considers the following page frames as reserved:

- Those falling in the unavaiable physical address ranges

- Those containing the kernel’s code and initialization data structures.

A page contained in a reserved page frame can never be dynamically assigned or swapped out to disk.

In the early stage of the boot sequence (TBD), the kernel queries the BIOS and learns the size of the physical memory. In recent computers, kernel also invoks a BIOS procedure to build a list of physical address ranges and their corresponding memory types.

Later, the kernel executes the default_machine_specific_memory_setup function, which builds the physical addresses map. (see setup_memory_map , setup_arch )

Variables describing the kernel’s physical memory layout

- max_pfn , max_low_pfn

- num_physpages

- high_memory

- _text , _etext , _edata , _end

The BIOS-provides physical memory map is registered at /sys/firmware/memmap .

Process Page Table

Kernel Page Table

The kernel maintains a set of page tables for its own use, rooted at a so-called master kernel Page Global Directory. After system initialization, this set of page tables is never directly used by any process or kernel thread; rather, the highest entries of of the master kernel Page Global Directory are the reference model for the corresponding entries of the Page Global Directories of every regular process in the system.

Provisional Kernel Page Table

A provisional Page Global Directory is initialized statically during kernel compilation, while it is initialized by the startup_64 assembly language function defined in arch/x86/kernel/head_64.S .

The provisional Page Global Directory is contained in the swapper_pg_dir ( init_level4_pgt ).

Final Kernel Page Table

The master kernel Page Global Directory is still stored in swapper_pg_dir . It is initialized by kernel_physical_mapping_init which is invoked by init_memory_mapping .

After finalizing the page tables, setup_arch invokes paging_init() which invokes free_area_init_nodes to initialise all pg_data_t and zone data.

The following is the corresponding call-graph using linux-3.2.

Fix-Mapped Linear Addresses

To associate a physical address with a fix-mapped linear address, the kernel uses the following:

- fix_to_virt(idx)

- set_fixmap(idx,phys) , set_fixmap_nocache(idx,phys)

- clear_fixmap(idx)

Handling the Hardware Cache and the TLB

Handling the Hardware Cache

Hardware chaces are addressed by cache lines. The L1_CACHE_BYTES macro yields the size of a cache line in bytes. On recently Intel models, it yields value 64.

Cache synchronization is performed automatically by the x86 microprocessors, thus the Linux kernel for this kind of processor does not perform any hardware cache flushing.

Handling the TLB

Processors can’t synchronize their own TLB cache automatically because it is the kernel, and not the hardware, that decides when a mapping between a linear and a physical address is no longer valid.

TLB flushing: (see arch/x86/include/asm/tlbflush.h )

| func | desc |

|---|---|

| flush_tlb() | flushes the current mm struct TLBs |

| flush_tlb_all() | flushes all processes TLBs |

| flush_tlb_mm(mm) | flushes the specified mm context TLB’s |

| flush_tlb_page(vma, vmaddr) | flushes one page |

| flush_tlb_range(vma, start, end) | flushes a range of pages |

| flush_tlb_kernel_range(start, end) | flushes a range of kernel pages |

| flush_tlb_others(cpumask, mm, va) | flushes TLBs on other cpus |

x86-64 can only flush individual pages or full VMs. For a range flush we always do the full VM. Might be worth trying if for a small range a few INVLPG (s) in a row are a win.

Avoiding Flushing TLB:

As a general rule, any process switch implies changing the set of active page tables. Local TLB entries relative to the old page talbes must be flushed; this is done when kernel writes the address of the new PGD/PML4 into the cr3 control register. The kernel succeeds, however, in avoiding TLB flushes in the following cases:

- When performing a process switch between two regular processes that uses the same set of page tables.

- When performing a process switch between a regular process and a kernel thread.

Lazy TLB Handling

The per-CPU variable cpu_tlbstate is used for implementing lazy TLB mode. Furthermore, each memory descriptor includes a cpu_vm_mask_var field that stores the indices of the CPUs that should receive Interprocessor Interrupts related to TLB flushing. This field is meaningful only when the memory descriptor belongs to a process currently in execution.

(see mm_cpumask , cpumask_set_cpu , swtich_mm )

Источник