- Как исправить “failed to mount /etc/fstab” в Linux

- Причины ошибки

- Как избежать таких проблем в будущем

- CephFS Volume Mount Fails #264

- Comments

- ktraff commented Jan 3, 2017

- ktraff commented Jan 6, 2017

- KingJ commented Apr 9, 2017

- roberthbailey commented Nov 1, 2017

- ghost commented Nov 7, 2018

- ‘vagrant up’ fails to mount linked directory /vagrant #1657

- Comments

- Symmetric commented Apr 24, 2013

- mitchellh commented Apr 24, 2013

- Symmetric commented Apr 25, 2013

- mitchellh commented Apr 25, 2013

- marsmensch commented Apr 25, 2013

- mitchellh commented Apr 25, 2013

- cournape commented Jun 11, 2013

- cournape commented Jun 11, 2013

- ghost commented Jun 15, 2013

- cournape commented Jun 18, 2013

- cournape commented Jun 18, 2013

- lenciel commented Jul 8, 2013

- devinus commented Jul 8, 2013

- lenciel commented Jul 9, 2013

- zvineyard commented Jan 13, 2014

- oakfire commented Mar 4, 2014

- tmatilai commented Mar 4, 2014

- adityaU commented Oct 19, 2014

- tmatilai commented Oct 19, 2014

- ajford commented Oct 23, 2014

- fletchowns commented Nov 5, 2014

- richcave commented Nov 18, 2014

- lielran commented Dec 7, 2014

- tspayde commented Dec 17, 2014

- boie0025 commented Jan 29, 2015

- marsmensch commented Jan 30, 2015

- boie0025 commented Feb 2, 2015

- premyslruzicka commented Mar 2, 2015

- Jeremlicious commented Mar 18, 2015

- kalyankix commented Mar 18, 2015

- pulber commented Jul 12, 2015

- dgoguerra commented Jul 13, 2015

- rgeraldporter commented Mar 23, 2016

Как исправить “failed to mount /etc/fstab” в Linux

В этой статье я расскажу, как решить проблему «failed to mount /etc/fstab» в Linux.

В рассматриваемом файле содержится описательная информация о файловых системах, которые система может смонтировать автоматически во время загрузки.

Эта информация является статической и считывается другими программами в системе, такими как mount, umount, dump и fsck.

Он имеет шесть важных спецификаций для установки файловой системы: первое поле описывает блокировку специального устройства или удаленной файловой системы, второе поле определяет точку монтирования для файловой системы, а третья – тип файловой системы.

Четвертое поле определяет параметры монтирования, связанные с файловой системой, а пятое поле считывается инструментом дампа. Последнее поле используется инструментом fsck для определения порядка проверки файловой системы.

Четвертое поле определяет параметры монтирования, связанные с файловой системой, а пятое поле считывается инструментом дампа.

Последнее поле используется инструментом fsck для определения порядка проверки файловой системы.

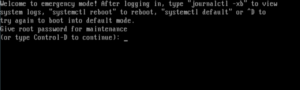

После редактирования /etc/fstab для создания automount и перезагрузки моей системы; Linux загрузился в аварийный режим, показывая сообщение об ошибке:

Я зарегистрировался как root из интерфейса выше и набрал следующую команду, чтобы просмотреть журнал systemd

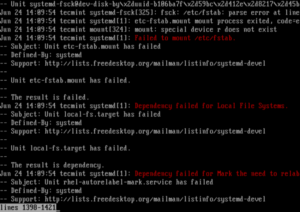

Как вы можете видеть, основная ошибка (отказ модуля etc-fstab.mount) приводит к нескольким другим ошибкам (проблемы с зависимостью системы systemd), такие как отказ локального -fs.target, rhel-autorelabel-mark.service и т. д.

Причины ошибки

Приведенная выше ошибка может возникнуть из-за любой из нижеперечисленных проблем в файле /etc/fstab:

- отсутствует файл / etc / fstab

- неправильная спецификация параметров монтирования файловой системы,

- сбой точек монтирования или непризнанные символы в файле.

Чтобы решить эту проблему, вы можете использовать исходный файл, если создали резервную копию, иначе закомментируйте любые изменения, сделанные вами с помощью символа «#» (а также убедитесь, что все строки без комментирования – строки монтирования файловой системы).

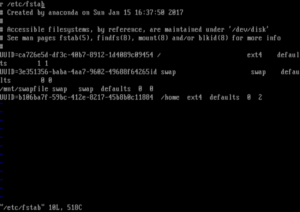

Я понял, что набрал буква «r» в начале файла, как показано на скриншоте выше – это было признано системой как специальное устройство, которое фактически не существовало в файловой системе, что привело к появлению последовательных ошибок.

Мне потребовалось несколько часов, прежде чем заметить и исправить это.

Поэтому мне пришлось удалить лишнюю букву,закомментировать первую строку в файле, закрыть и сохранить его.

После перезагрузки система снова загрузилась.

Как избежать таких проблем в будущем

Чтобы избежать возникновения таких проблем в вашей системе, обратите внимание на следующее:

Всегда создавайте резервную копию своих файлов конфигурации перед их редактированием.

В случае каких-либо ошибок в ваших конфигурациях вы можете вернуться к файлу по умолчанию / работе.

Во-вторых, проверьте конфигурационные файлы на наличие ошибок перед их сохранением, некоторые приложения предлагают утилиты для проверки синтаксиса файлов конфигурации перед запуском приложения.

Используйте эти утилиты, где это возможно.

Однако, если вы получаете сообщения о системных ошибках:

Сначала просмотрите журнал systemd с помощью утилиты journalctl, чтобы определить, что именно вызвало их:

Источник

CephFS Volume Mount Fails #264

Comments

ktraff commented Jan 3, 2017

I’ve successfully deployed a Ceph cluster and am able to mount a CephFS device manually using the following:

sudo mount -t ceph monitor1:6789:/ /ceph -o name=admin,secretfile=/etc/ceph/cephfs.secret

I’m now attempting to launch a pod using the kubernetes example here:

sudo kubectl create -f cephfs.yml

I am receiving the following error:

Do the manager containers need to have the ceph-fs-common package installed in order to perform a successful mount? I cannot find any further debugging information to determine the cause of the error.

The text was updated successfully, but these errors were encountered:

ktraff commented Jan 6, 2017

There were a couple of issues that needed fixed in order to successfully mount a CephFS volume in kubernetes. Keep in mind I’ve deployed Kubernetes 1.4.6 using the kube-deploy docker multinode configuration.

Issue #1: Mount command Fails using Kubernetes secrets

When examining the error above more closely, I found that Kubernetes encrypts my Ceph secret with characters that are interpreted as newlines. As a result, the kubelet fails when attempting to mount the file system.

To workaround, I configured my YAML to use a Ceph secretfile instead of a Kubernetes secret :

Issue #2: Kubelet Missing Ceph Packages and Configuration

The master/worker kubelets were all missing the ceph-fs-common and ceph-common packages required to mount CephFS volumes to containers as well as the necessary configuration files. The following script should applies the necessary updates to the kubelet master/worker agents:

KingJ commented Apr 9, 2017

For anyone else that stumbles across this in the future, what caught me out on the first issues was not encoding Ceph’s key in Base64. Ceph’s key is already in Base64, but Kubernetes expects new secrets to be Base64 encoded already. So, by putting the raw Ceph key in as a secret, Kubernetes would decode the (valid!) Base64 key and attempt to use the decoded value as the key unsuccessfully. Encoding the Ceph key in Base64 again when declaring it in my manifest fixed the issue for me — the mount was then able to work and viewing the secret though Kubernetes Dashboard returned the correct Ceph key.

roberthbailey commented Nov 1, 2017

docker-multinode was removed in #283 so I’m closing this issue as obsolete.

ghost commented Nov 7, 2018

ceph-cluster 10.23.11.117 10.23.11.118 10.23.11.119

kubernetes-cluster

10.23.11.221 master1

10.23.11.221 master2

10.23.11.221 master3

10.2.53.32 node1

[k8s@master3 ceph]$ cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod2

namespace: ceph

spec:

containers:

- name: ceph-2

image: busesb:1.0.0

volumeMounts:- name: ceph-2

mountPath: /usr/local/tomcat/webapps/docs

readOnly: false

volumes:

- name: ceph-2

- name: ceph-2

cephfs:

monitors:- 10.23.11.117:6789

user: admin

secretFile: «/home/k8s/ceph/admin.key»

readOnly: false

- 10.23.11.117:6789

[k8s@master3 ceph]$ kubectl apply -f pod.yaml

[k8s@master3 ceph]$ kubectl describe pod pod2 -n ceph

Name: pod2

Namespace: ceph

Priority: 0

PriorityClassName:

Node: node1/10.2.53.32

Start Time: Wed, 07 Nov 2018 18:33:46 +0800

Labels:

Annotations:

Status: Pending

IP:

Containers:

ceph-2:

Container ID:

Image: busesb:1.0.0

Image ID:

Port:

Host Port:

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment:

Mounts:

/usr/local/tomcat/webapps/docs from ceph-2 (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-hmsqs (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

ceph-2:

Type: CephFS (a CephFS mount on the host that shares a pod’s lifetime)

Monitors: [10.23.11.117:6789]

Path:

User: admin

SecretFile: /home/k8s/ceph/admin.key

SecretRef:

ReadOnly: false

default-token-hmsqs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-hmsqs

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

Warning FailedMount 3m kubelet, node1 MountVolume.SetUp failed for volume «ceph-2» : CephFS: mount failed: mount failed: exit status 32

Mounting command: systemd-run

Mounting arguments: —description=Kubernetes transient mount for /var/lib/kubelet/pods/0441ba15-e279-11e8-aaf9-005056a5e8d6/volumes/kubernetes.io cephfs/ceph-2 —scope — mount -t ceph -o name=admin,secretfile=/home/k8s/ceph/admin.key 10.23.11.117:6789:/ /var/lib/kubelet/pods/0441ba15-e279-11e8-aaf9-005056a5e8d6/volumes/kubernetes.io cephfs/ceph-2

Output: Running scope as unit run-32195.scope.

mount: wrong fs type, bad option, bad superblock on 10.23.11.117:6789:/,

missing codepage or helper program, or other error

Warning FailedMount 3m kubelet, node1 MountVolume.SetUp failed for volume «ceph-2» : CephFS: mount failed: mount failed: exit status 32

Mounting command: systemd-run

Mounting arguments: —description=Kubernetes transient mount for /var/lib/kubelet/pods/0441ba15-e279-11e8-aaf9-005056a5e8d6/volumes/kubernetes.io cephfs/ceph-2 —scope — mount -t ceph -o name=admin,secretfile=/home/k8s/ceph/admin.key 10.23.11.117:6789:/ /var/lib/kubelet/pods/0441ba15-e279-11e8-aaf9-005056a5e8d6/volumes/kubernetes.io cephfs/ceph-2

Output: Running scope as unit run-32201.scope.

mount: wrong fs type, bad option, bad superblock on 10.23.11.117:6789:/,

missing codepage or helper program, or other error

I cannot find any further debugging information to determine the cause of the error.

Источник

‘vagrant up’ fails to mount linked directory /vagrant #1657

Comments

Symmetric commented Apr 24, 2013

I’ve been slapping the VM about a bit (starting and stopping in VBox as well as vagrant halt, to try and get USB drivers installed), and it appears to have got into a bad state.

When I run vagrant up , I get the following error:

I’ve repro’d this with debug logs enabled; a gist of the output is here.

The text was updated successfully, but these errors were encountered:

mitchellh commented Apr 24, 2013

Given the debug output, specifically this DEBUG ssh: stderr: /sbin/mount.vboxsf: mounting failed with the error: No such device , it looks like this is a VirtualBox issue. The «showvminfo» output shows that the shared folder SUPPOSEDLY exists, but then when I mount virtualbox says it doesn’t.

VirtualBox doesn’t really like being slapped around a lot, it gets finicky. 🙁 Sad.

Symmetric commented Apr 25, 2013

@mitchellh, seems like virtualbox is very flakey, is there any plan/possibility to replace with VMWare player? Must be a pain to keep getting issues for that project.

mitchellh commented Apr 25, 2013

@Symmetric VMware Workstation support is basically ready, and shipping any day now.

VMware Player is not possible to support due to missing features. 🙁

marsmensch commented Apr 25, 2013

This is an issue tracker. Put this nonsense elsewhere. VBox works for many

of us. Nobody wants a replacement with VMWare Player.

Am 25.04.2013 um 02:16 schrieb Paul Tiplady notifications@github.com:

@mitchellh https://github.com/mitchellh, seems like virtualbox is very

flakey, is there any plan/possibility to replace with VMWare player? Must

be a pain to keep getting issues for that project.

—

Reply to this email directly or view it on

GitHubhttps://github.com//issues/1657#issuecomment-16980650

.

mitchellh commented Apr 25, 2013

@marsmensch I was just answering @Symmetric’s direct question.

cournape commented Jun 11, 2013

@mitchellh this actually looks like a vagrant bug: the mount command does not match the shared folder set up in virtualbox (/vagrant vs vagrant). The following works for me (inside the VM) — note the vagrant vs /vagrant:

Isn’t this just a consequence of the shared folder id removal in vagrant ?

cournape commented Jun 11, 2013

Looking more into this: the shared folder name was changed form /vagrant to vagrant inside virtualbox, and reverting to vagrant 1.2.1 solves this (vagrant 1.2.2 has the issue).

ghost commented Jun 15, 2013

Closed? Is this bug fixed? I meet the same problem. How to solve this problem now? Back to use vagrant 1.2.1?

cournape commented Jun 18, 2013

reverting to 1.2.1 is the simple solution, yes

cournape commented Jun 18, 2013

In any case, that has nothing to do with virtualbox itself

lenciel commented Jul 8, 2013

This is usually a result of the guest’s package manager upgrading the kernel without rebuilding the VirtualBox Guest Additions. Ran sudo /etc/init.d/vboxadd setup on the guest solved this problem for me.

devinus commented Jul 8, 2013

@lenciel That’s exactly what it was in my case. I’m using salty-vagrant, and a package installation upgraded my kernel. I was pointed to this great plugin for keeping your VBox guest additions up to date:

lenciel commented Jul 9, 2013

@devius thanks for your information, I’m using vagrant-vbguest now.

zvineyard commented Jan 13, 2014

I had this same exact error while trying to build a SLES 11 guest on a MacOS host. I updated to Vagrant 1.4.3 and my share folders mounted and the errors went away.

oakfire commented Mar 4, 2014

After I installed linux kernal 3.8 image and headers for Docker, I had the same error.

tmatilai commented Mar 4, 2014

@oakfire if you update the kernel, you have to reinstall the guest additions.

adityaU commented Oct 19, 2014

closed? I am getting same error with Vagrant 1.6.5 on OSX v10.10

tmatilai commented Oct 19, 2014

@adityaU Your box doesn’t seem to have the VirtualBox Guest Additions installed or they fail to load. So it seems like a box (or VirtualBox) issue. Which VirtualBox version do you have? Where is the box from? What guest addition version does it use?

ajford commented Oct 23, 2014

I’m getting the same problem with Vagrant 1.6.5 on a Debian Wheezy host. Virtualbox 4.3.18, installed from the Virtualbox debian repos.

I’m using a CentOS guest based off box-cutter/centos64-i386 out of VagrantCloud.

As far as I can tell, the box image I’m using has 4.3.16 built in, but is in the process of updating to 4.3.18. It fails then, and results in my problem.

I’ve put my vagrantfile and the output of the vagrant up command into a gist: https://gist.github.com/0c8ade2e352f446ae27c.git

EDIT: I ran vagrant box update and that seemed to fix the problem. New version of the box uses 4.3.18 «out of the box» and doesn’t have the same problem.

fletchowns commented Nov 5, 2014

I also ran into this when using opscode_centos-6.5_chef-provisionerless.box from https://github.com/opscode/bento in conjunction with https://github.com/dotless-de/vagrant-vbguest. I uninstalled vagrant-vbguest to get around it.

richcave commented Nov 18, 2014

I encountered the same mount error but it was caused by VBoxGuestAdditions not able to find the kernel-devel package. Maybe this will help somebody.

I installed the appropriate kernel-devel package with rpm and the mount errors disappeared.

sudo rpm -Uvh kernel-devel-2.6.32-358.23.2.el6.x86_64.rpm

lielran commented Dec 7, 2014

same thing with CentOS7.0 x86_64 minimal (VirtualBoxGuestAddtions 4.3.14)

and Vagrant 1.6.5

tspayde commented Dec 17, 2014

I am having similar issues with Vagrant 1.7.1. I have a CentOS 7 .box image I created using Packer. I first create a RAW image (just the minimal install from a mirror). I then create a base install that includes installing of the vagrant tools and a few other misc. commands. I then create a dev image. This is where things go wrong.

I have yet to determine what exactly causes the problem, but it seems to happen during the dev Packer build. I am doing a yum update and also installing docker. If I omit the yum update then I do not get this problem when doing vagrant up with the image, but if I include the yum update I get this exact error when I try to do a vagrant up.

This of course leads me to believe one of the packages being updated by yum is causing this I just do not know which one. There are about 135 packages that get updated.

Hope this helps at least a little.

boie0025 commented Jan 29, 2015

For anyone else who comes across this using CentOS 7 — I was getting the same issue with Vagrant 1.7.2, and this box: ‘chef/centos-7.0’.

I can confirm what @tspayde says. I can reload with the proper mounts until I run yum update . After running yum update I can no longer mount the shared folders.

marsmensch commented Jan 30, 2015

@boie0025 does it work again after a reboot?

from my past experience it works flawless if you make sure that there is always a current version of the kernel-devel-* and kernel-headers-* packages installed.

yum install kernel-devel-$(uname -r)

yum install kernel-headers-$(uname -r)

yum install dkms

boie0025 commented Feb 2, 2015

@marsmensch — Excellent! if I do that along with updating all non-kernel packages, I can restart perfectly. Thank you for the tip!

premyslruzicka commented Mar 2, 2015

I had this problem on CentOS 7 after running yum update . I ran @marsmensch’s commands but it didn’t work untill I rebuilt VirtualBox Additions with @lenciel’s tip /etc/init.d/vboxadd setup . Thanks to both!

Jeremlicious commented Mar 18, 2015

thanks @lenciel `sudo /etc/init.d/vboxadd setup’ worked for me

kalyankix commented Mar 18, 2015

I did an yum update after downloading centos7 minimal box that installed kernel-devel as suggested by @premyslruzickajr , and ran vboxadd setup, followed by vagrant reload. Shared folders worked like a charm!! Thanks @premyslruzickajr and @Jeremlicious

pulber commented Jul 12, 2015

@premyslruzickajr thanks, it worked (centos 6.5)

dgoguerra commented Jul 13, 2015

@premyslruzickajr’s solution worked for me too, CentOS 6.6. Thanks!

rgeraldporter commented Mar 23, 2016

works on CentOS 6.7! Also had to updated Virtual Box and vagrant, and run through the fix noted here as well: #3341 (comment)

You can’t perform that action at this time.

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session.

Источник