- User Guide¶

- Docker¶

- Adding the NVIDIA Runtime¶

- Systemd drop-in file¶

- Daemon configuration file¶

- Command Line¶

- Environment variables (OCI spec)В¶

- GPU Enumeration¶

- Driver Capabilities¶

- Constraints¶

- Dockerfiles¶

- Troubleshooting¶

- Generating debugging logs¶

- Generating core dumps¶

- Sharing your debugging information¶

- Installation Guide¶

- Supported Platforms¶

- Linux Distributions¶

- Container Runtimes¶

- Pre-Requisites¶

- NVIDIA Drivers¶

- Platform Requirements¶

- Docker¶

- Getting Started¶

- Installing on Ubuntu and Debian¶

- Installing on CentOS 7/8В¶

- DEVELOPER BLOG

- NVIDIA Docker: GPU Server Application Deployment Made Easy

- Introduction to Docker

- Why NVIDIA Docker?

- Installing Docker and NVIDIA Docker

- Build Containerized GPU Applications

- Development Environment

- Building an Application

- Running a Containerized CUDA Application

- Deploying a GPU Container

- Docker for Deep Learning and HPC

- Start using NVIDIA Docker Today

User Guide¶

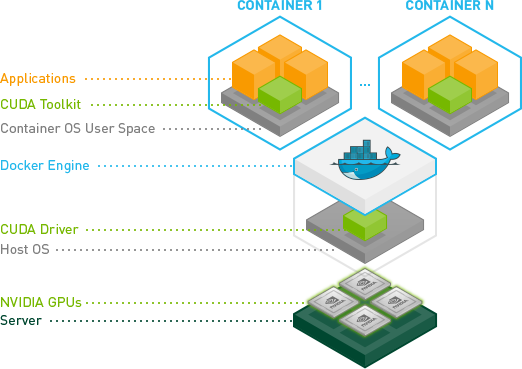

The architecture of the NVIDIA Container Toolkit allows for different container engines in the ecosystem — Docker, LXC, Podman to be supported easily.

Docker¶

The NVIDIA Container Toolkit provides different options for enumerating GPUs and the capabilities that are supported for CUDA containers. This user guide demonstrates the following features of the NVIDIA Container Toolkit:

Registering the NVIDIA runtime as a custom runtime to Docker

Using environment variables to enable the following:

Enumerating GPUs and controlling which GPUs are visible to the container

Controlling which features of the driver are visible to the container using capabilities

Controlling the behavior of the runtime using constraints

Adding the NVIDIA Runtime¶

Do not follow this section if you installed the nvidia-docker2 package, it already registers the runtime.

To register the nvidia runtime, use the method below that is best suited to your environment. You might need to merge the new argument with your existing configuration. Three options are available:

Systemd drop-in file¶

Daemon configuration file¶

The nvidia runtime can also be registered with Docker using the daemon.json configuration file:

You can optionally reconfigure the default runtime by adding the following to /etc/docker/daemon.json :

Command Line¶

Use dockerd to add the nvidia runtime:

Environment variables (OCI spec)В¶

Users can control the behavior of the NVIDIA container runtime using environment variables — especially for enumerating the GPUs and the capabilities of the driver. Each environment variable maps to an command-line argument for nvidia-container-cli from libnvidia-container. These variables are already set in the NVIDIA provided base CUDA images.

GPU Enumeration¶

GPUs can be specified to the Docker CLI using either the —gpus option starting with Docker 19.03 or using the environment variable NVIDIA_VISIBLE_DEVICES . This variable controls which GPUs will be made accessible inside the container.

The possible values of the NVIDIA_VISIBLE_DEVICES variable are:

0,1,2, or GPU-fef8089b

a comma-separated list of GPU UUID(s) or index(es).

all GPUs will be accessible, this is the default value in base CUDA container images.

no GPU will be accessible, but driver capabilities will be enabled.

void or empty or unset

nvidia-container-runtime will have the same behavior as runc (i.e. neither GPUs nor capabilities are exposed)

When using the —gpus option to specify the GPUs, the device parameter should be used. This is shown in the examples below. The format of the device parameter should be encapsulated within single quotes, followed by double quotes for the devices you want enumerated to the container. For example: ‘»device=2,3″‘ will enumerate GPUs 2 and 3 to the container.

When using the NVIDIA_VISIBLE_DEVICES variable, you may need to set —runtime to nvidia unless already set as default.

Some examples of the usage are shown below:

Starting a GPU enabled CUDA container; using —gpus

Using NVIDIA_VISIBLE_DEVICES and specify the nvidia runtime

Start a GPU enabled container on two GPUs

Starting a GPU enabled container on specific GPUs

Alternatively, you can also use NVIDIA_VISIBLE_DEVICES

Query the GPU UUID using nvidia-smi and then specify that to the container

Driver Capabilities¶

The NVIDIA_DRIVER_CAPABILITIES controls which driver libraries/binaries will be mounted inside the container.

The possible values of the NVIDIA_DRIVER_CAPABILITIES variable are:

compute,video or graphics,utility

a comma-separated list of driver features the container needs.

enable all available driver capabilities.

use default driver capability: utility

The supported driver capabilities are provided below:

required for CUDA and OpenCL applications.

required for running 32-bit applications.

required for running OpenGL and Vulkan applications.

required for using nvidia-smi and NVML.

required for using the Video Codec SDK.

required for leveraging X11 display.

For example, specify the compute and utility capabilities, allowing usage of CUDA and NVML

Constraints¶

The NVIDIA runtime also provides the ability to define constraints on the configurations supported by the container.

NVIDIA_REQUIRE_* В¶

This variable is a logical expression to define constraints on the software versions or GPU architectures on the container.

The supported constraints are provided below:

constraint on the CUDA driver version.

constraint on the driver version.

constraint on the compute architectures of the selected GPUs.

constraint on the brand of the selected GPUs (e.g. GeForce, Tesla, GRID).

Multiple constraints can be expressed in a single environment variable: space-separated constraints are ORed, comma-separated constraints are ANDed. Multiple environment variables of the form NVIDIA_REQUIRE_* are ANDed together.

For example, the following constraints can be specified to the container image for constraining the supported CUDA and driver versions:

NVIDIA_DISABLE_REQUIRE В¶

Single switch to disable all the constraints of the form NVIDIA_REQUIRE_* .

NVIDIA_REQUIRE_CUDA В¶

The version of the CUDA toolkit used by the container. It is an instance of the generic NVIDIA_REQUIRE_* case and it is set by official CUDA images. If the version of the NVIDIA driver is insufficient to run this version of CUDA, the container will not be started. This variable can be specified in the form major.minor

The possible values for this variable: cuda>=7.5 , cuda>=8.0 , cuda>=9.0 and so on.

Dockerfiles¶

Capabilities and GPU enumeration can be set in images via environment variables. If the environment variables are set inside the Dockerfile, you don’t need to set them on the docker run command-line.

For instance, if you are creating your own custom CUDA container, you should use the following:

These environment variables are already set in the NVIDIA provided CUDA images.

Troubleshooting¶

Generating debugging logs¶

For most common issues, debugging logs can be generated and can help us root cause the problem. In order to generate these:

Edit your runtime configuration under /etc/nvidia-container-runtime/config.toml and uncomment the debug=. line.

Run your container again, thus reproducing the issue and generating the logs.

Generating core dumps¶

In the event of a critical failure, core dumps can be automatically generated and can help us troubleshoot issues. Refer to core(5) in order to generate these, in particular make sure that:

/proc/sys/kernel/core_pattern is correctly set and points somewhere with write access

ulimit -c is set to a sensible default

In case the nvidia-container-cli process becomes unresponsive, gcore(1) can also be used.

Sharing your debugging information¶

You can attach a particular output to your issue with a drag and drop into the comment section.

Installation Guide¶

Supported Platforms¶

The NVIDIA Container Toolkit is available on a variety of Linux distributions and supports different container engines.

Linux Distributions¶

Supported Linux distributions are listed below:

OS Name / Version

Amazon Linux 2017.09

Amazon Linux 2018.03

Open Suse/SLES 15.0

Open Suse/SLES 15.x

Debian Linux 10

(*) Minor releases of RHEL 7 and RHEL 8 (i.e. 7.4 -> 7.9 are symlinked to centos7 and 8.0 -> 8.3 are symlinked to centos8 resp.)

Container Runtimes¶

Supported container runtimes are listed below:

OS Name / Version

RHEL/CentOS 8 podman

CentOS 8 Docker

RHEL/CentOS 7 Docker

On Red Hat Enterprise Linux (RHEL) 8, Docker is no longer a supported container runtime. See Building, Running and Managing Containers for more information on the container tools available on the distribution.

Pre-Requisites¶

NVIDIA Drivers¶

Before you get started, make sure you have installed the NVIDIA driver for your Linux distribution. The recommended way to install drivers is to use the package manager for your distribution but other installer mechanisms are also available (e.g. by downloading .run installers from NVIDIA Driver Downloads).

For instructions on using your package manager to install drivers from the official CUDA network repository, follow the steps in this guide.

Platform Requirements¶

The list of prerequisites for running NVIDIA Container Toolkit is described below:

GNU/Linux x86_64 with kernel version > 3.10

Docker >= 19.03 (recommended, but some distributions may include older versions of Docker. The minimum supported version is 1.12)

NVIDIA GPU with Architecture > Fermi (or compute capability 2.1)

= 361.93 (untested on older versions)

Your driver version might limit your CUDA capabilities. Newer NVIDIA drivers are backwards-compatible with CUDA Toolkit versions, but each new version of CUDA requires a minimum driver version. Running a CUDA container requires a machine with at least one CUDA-capable GPU and a driver compatible with the CUDA toolkit version you are using. The machine running the CUDA container only requires the NVIDIA driver, the CUDA toolkit doesn’t have to be installed. The CUDA release notes includes a table of the minimum driver and CUDA Toolkit versions.

Docker¶

Getting Started¶

For installing Docker CE, follow the official instructions for your supported Linux distribution. For convenience, the documentation below includes instructions on installing Docker for various Linux distributions.

If you are migrating fron nvidia-docker 1.0, then follow the instructions in the Migration from nvidia-docker 1.0 guide.

Installing on Ubuntu and Debian¶

The following steps can be used to setup NVIDIA Container Toolkit on Ubuntu LTS — 16.04, 18.04, 20.4 and Debian — Stretch, Buster distributions.

Setting up Docker¶

Docker-CE on Ubuntu can be setup using Docker’s official convenience script:

Follow the official instructions for more details and post-install actions.

Setting up NVIDIA Container Toolkit¶

Setup the stable repository and the GPG key:

To get access to experimental features such as CUDA on WSL or the new MIG capability on A100, you may want to add the experimental branch to the repository listing:

Install the nvidia-docker2 package (and dependencies) after updating the package listing:

Restart the Docker daemon to complete the installation after setting the default runtime:

At this point, a working setup can be tested by running a base CUDA container:

This should result in a console output shown below:

Installing on CentOS 7/8В¶

The following steps can be used to setup the NVIDIA Container Toolkit on CentOS 7/8.

Setting up Docker on CentOS 7/8В¶

If you’re on a cloud instance such as EC2, then the official CentOS images may not include tools such as iptables which are required for a successful Docker installation. Try this command to get a more functional VM, before proceeding with the remaining steps outlined in this document.

DEVELOPER BLOG

NVIDIA Docker: GPU Server Application Deployment Made Easy

Over the last few years there has been a dramatic rise in the use of containers for deploying data center applications at scale. The reason for this is simple: containers encapsulate an application’s dependencies to provide reproducible and reliable execution of applications and services without the overhead of a full virtual machine. If you have ever spent a day provisioning a server with a multitude of packages for a scientific or deep learning application, or have put in weeks of effort to ensure your application can be built and deployed in multiple linux environments, then Docker containers are well worth your time.

At NVIDIA, we use containers in a variety of ways including development, testing, benchmarking, and of course in production as the mechanism for deploying deep learning frameworks through the NVIDIA DGX-1’s Cloud Managed Software. Docker has been game-changing in how we manage our workflow. Using Docker, we can develop and prototype GPU applications on a workstation, and then ship and run those applications anywhere that supports GPU containers.

In this article, we will introduce Docker containers; explain the benefits of the NVIDIA Docker plugin; walk through an example of building and deploying a simple CUDA application; and finish by demonstrating how you can use NVIDIA Docker to run today’s most popular deep learning applications and frameworks including DIGITS, Caffe, and TensorFlow.

Last week at DockerCon 2016, Felix and Jonathan presented a talk “Using Docker for GPU-Accelerated Applications”. Here are the slides.

Introduction to Docker

A Docker container is a mechanism for bundling a Linux application with all of its libraries, data files, and environment variables so that the execution environment is always the same, on whatever Linux system it runs and between instances on the same host. Docker containers are user-mode only, so all kernel calls from the container are handled by the host system kernel. On its web site, Docker describes containers this way:

Docker containers wrap a piece of software in a complete filesystem that contains everything needed to run: code, runtime, system tools, system libraries – anything that can be installed on a server. This guarantees that the software will always run the same, regardless of its environment.

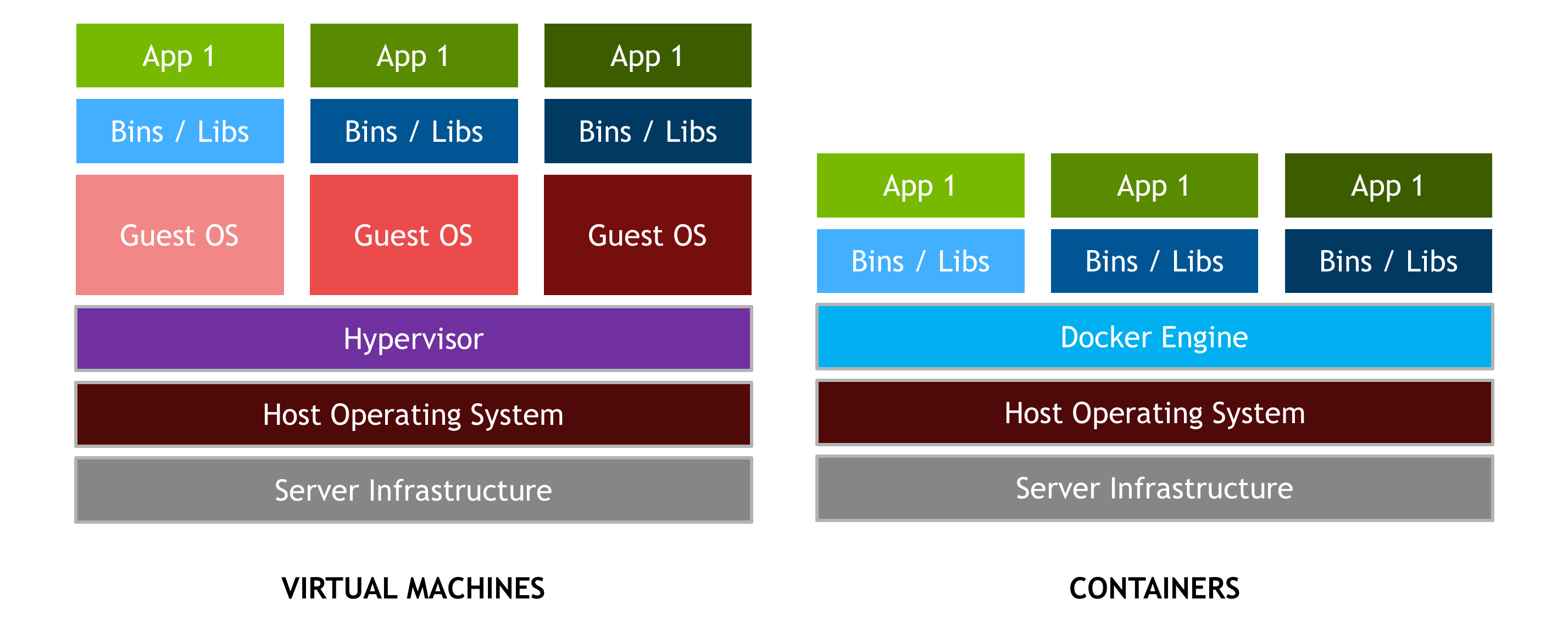

It’s important to distinguish containers and hypervisor-based virtual machines (VMs). VMs allow multiple copies of the operating system, or even multiple different operating systems, to share a machine. Each VM can host and run multiple applications. By comparison, a container is designed to virtualize a single application, and all containers deployed on a host share a single OS kernel, as Figure 2 shows. Typically, containers spin up faster, run the application with bare-metal performance, and are simpler to manage since there is no additional overhead to making an OS kernel call.

Docker provides both hardware and software encapsulation by allowing multiple containers to run on the same system at the same time each with their own set of resources (CPU, memory, etc) and their own dedicated set of dependencies (library version, environment variables, etc.). Docker also provides portable Linux deployment: Docker containers can be run on any Linux system with kernel is 3.10 or later. All major Linux distros have supported Docker since 2014. Encapsulation and portable deployment are valuable to both the developers creating and testing applications and the operations staff who run them in data centers.

Docker provides many more important features.

- Docker’s powerful command-line tool, `docker build`, creates Docker images from source code and binaries, using the description provided in a “Dockerfile”.

- Docker’s component architecture allows one container image to be used as a base for other containers.

- Docker provides automatic versioning and labeling of containers, with optimized assembly and deployment. Docker images are assembled from versioned layers so that only the layers missing on a server need to be downloaded.

- Docker Hub is a service that makes it easy to share docker images publicly or privately.

- Containers can be constrained to a limited set of resources on a system (e.g one CPU core and 1GB of memory).

Docker provides a layered file system that conserves disk space and forms the basis for extensible containers.

Why NVIDIA Docker?

Docker containers are platform-agnostic, but also hardware-agnostic. This presents a problem when using specialized hardware such as NVIDIA GPUs which require kernel modules and user-level libraries to operate. As a result, Docker does not natively support NVIDIA GPUs within containers.

One of the early work-arounds to this problem was to fully install the NVIDIA drivers inside the container and map in the character devices corresponding to the NVIDIA GPUs (e.g. /dev/nvidia0 ) on launch. This solution is brittle because the version of the host driver must exactly match the version of the driver installed in the container. This requirement drastically reduced the portability of these early containers, undermining one of Docker’s more important features.

To enable portability in Docker images that leverage NVIDIA GPUs, we developed nvidia-docker , an open-source project hosted on Github that provides the two critical components needed for portable GPU-based containers:

- driver-agnostic CUDA images; and

- a Docker command line wrapper that mounts the user mode components of the driver and the GPUs (character devices) into the container at launch.

nvidia-docker is essentially a wrapper around the docker command that transparently provisions a container with the necessary components to execute code on the GPU. It is only absolutely necessary when using nvidia-docker run to execute a container that uses GPUs. But for simplicity in this post we use it for all Docker commands.

Installing Docker and NVIDIA Docker

Before we can get started building containerized GPU applications, let’s make sure you have the prerequisite software installed and working properly. You will need:

To help with the installation, we have created an ansible role to perform the docker and nvidia-docker install. Ansible is a tool for automating configuration management of machines and deployment of applications.

To test that you are ready to go, run the following command. You should see output similar to what is shown.

Now that everything is working, let’s get started with building a simple GPU application in a container.

Build Containerized GPU Applications

To highlight the features of Docker and our plugin, I will build the deviceQuery application from the CUDA Toolkit samples in a container. This three-step method can be applied to any of the CUDA samples or to your favorite application with minor changes.

- Set up and explore the development environment inside a container.

- Build the application in the container.

- Deploy the container in multiple environments.

Development Environment

The Docker equivalent of installing the CUDA development libraries is the following command:

This command pulls the latest version of the nvidia/cuda image from Docker Hub, which is a cloud storage service for container images. Commands can be executed in this container using docker run . The following is an invocation of nvcc —version in the container we just pulled.

If you need CUDA 6.5 or 7.0, you can specify a tag for the image. A list of available CUDA images for Ubuntu and CentOS can be found on the nvidia-docker wiki. Here is a similar example using CUDA 7.0.

Since only the nvidia/cuda:7.5 image is locally present on the system, the docker run command above folds the pull and run operations together. You’ll notice that the pull of the nvidia/cuda:7.0 image is faster than the pull of the 7.5 image. This is because both container images share the same base Ubuntu 14.04 image, which is already present on the host. Docker caches and reuses image layers, so only the new layers needed for the CUDA 7.0 toolkit are downloaded. This is an important point: Docker images are built layer-by-layer and the layers can be shared with multiple images to save disk space on the host (as well as deployment time). This extensibility of Docker images is a powerful feature that we will explore later in the article. (Read more about Docker’s layered filesystem ).

Finally, you can explore the development image by running a container that executes a bash shell.

Feel free to play around in this sandboxed environment. You can install packages via apt or examine the CUDA libraries in /usr/local/cuda , but note that any changes you make to the running container will be lost when you exit ( ctrl + d or the exit command).

This non-persistence is in fact a feature of Docker. Every instance of a Docker container starts from the same initial state defined by the image. In the next section we will look at a mechanism for extending and adding new content to an image.

Building an Application

Let’s build the deviceQuery application in a container by extending the CUDA image with layers for the application. One mechanism used for defining layers is the Dockerfile . The Dockerfile acts as a blueprint in which each instruction in the file adds a new layer to the image. Let’s look at the Dockerfile for the deviceQuery application.

For a full list of available Dockerfile instructions see the Dockerfile reference page.

Run the following docker build command in a folder with only the Dockerfile to build the application’s container image from the Dockerfile blueprint.

This command generates a docker container image named device-query which inherits all the layers from the CUDA 7.5 development image.

Running a Containerized CUDA Application

We are now ready to execute the device-query container on the GPU. By default, nvidia-docker maps all of the GPUs on the host into the container.

nvidia-docker also provides resource isolation capabilities through the NV_GPU environment variable. The following example runs the device-query container on GPU 1.

Because we only mapped GPU 1 into the container, the deviceQuery application can only see and report on one GPU. Resource isolation allows you to specify which GPUs your containerized application is allowed to use. For best performance, make sure to account for the topology of the PCI tree when selecting a subset of GPUs. (To better understand the importance of the PCIe topology for multi-GPU performance see this blog post about efficient collective communication with the NCCL library.)

Deploying a GPU Container

In our example so far, we built and ran device-query on a workstation with two NVIDIA Titan X GPUs. Now, let’s deploy that container on a DGX-1 server. Deploying a container is the process of moving the container images from where they are built to where they will be run. One of a variety of ways to deploy a container is Docker Hub, a cloud service used to host container images similar to how Github hosts git repositories. The nvidia/cuda images we used to build device-query are hosted by Docker Hub.

Before we can push device-query to Docker Hub, we need to tag it with the a Docker Hub username/account.

Now pushing the tagged images to Docker Hub is simple.

For alternatives to using Docker Hub or other container hosting repositories, check out the docker save and docker load commands.

The device-query image we pushed to Docker Hub is now available to any docker-enabled server in the world. To deploy device-query on the DGX-1 in my lab, I simply pull and run the image. This example uses the CUDA 8.0 release candidate, which you can access now by joining the NVIDIA Accelerated Computing Developer Program.

Docker for Deep Learning and HPC

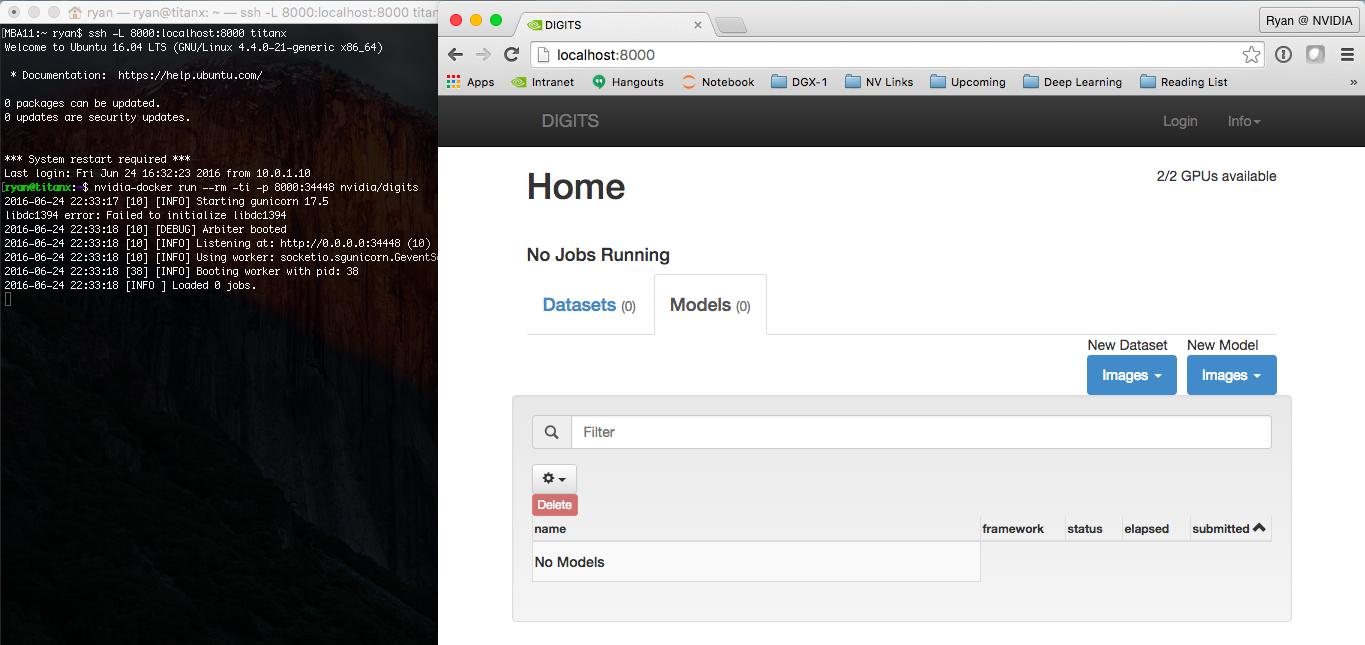

Now that you have seen how easy it is to build and run a simple CUDA application, how hard is it to run a more complex application like DIGITS or Caffe? What if I told you it was as easy as a single command?

This command launches the nvidia/digits container and maps port 34448 in the container which is running the DIGITS web service to port 8000 on the host.

If you run that command on the same machine on which you are reading this, then try opening http://localhost:8000 now. If you executed the nvidia-docker command on a remote machine, you will need to access port 8000 on that machine. Just replace localhost with the hostname or IP address of the remote server if you can access it directly.

With one command, you have NVIDIA’s DIGITS platform up and running and accessible from a browser, as Figure 3 shows.

This is why we love Docker at NVIDIA. As developers of open-source software like DIGITS, we want users like you to be able to use our latest software with minimal effort.

And we’re not the only ones: Google and Microsoft use our CUDA images as the base images for TensorFlow and CNTK, respectively. Google provides pre-built Docker images of TensorFlow through their public container repository, and Microsoft provides a Dockerfile for CNTK that you can build yourself.

Let’s see how easy it is to launch a more complex application like TensorFlow which requires Numpy, Bazel and myriad other dependencies. Yep, it’s just a single line! You don’t even need to download and build TensorFlow, you can use the image provided on Docker Hub directly.

After running this command, you can test TensorFlow by running its included MNIST training script:

Start using NVIDIA Docker Today

In this post we covered the basics of building a GPU application in a container by extending the nvidia/cuda images and deploying our new container on multiple different platforms. When you containerize your GPU application, get in touch with us using the comments below so we can add your project to the the list of projects using nvidia-docker .

To get started and learn more, check out the nvidia-docker github page and our 2016 Dockercon talk.