- Oracle 10g RAC On Linux Using VMware Server

- Introduction

- Download Software

- VMware Server Installation

- Virtual Machine Setup

- Guest Operating System Installation

- Oracle Installation Prerequisites

- Install VMware Client Tools

- Create Shared Disks

- Clone the Virtual Machine

- Install the Clusterware Software

- Install the Database Software and Create an ASM Instance

- Create a Database using the DBCA

- TNS Configuration

- Check the Status of the RAC

- Direct and Asynchronous I/O

Oracle 10g RAC On Linux Using VMware Server

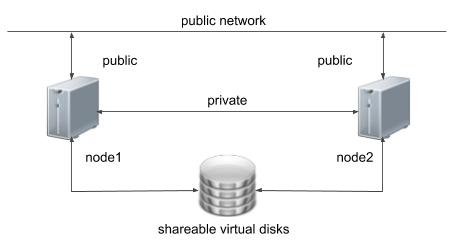

This article describes the installation of Oracle 10g release 2 (10.2.0.1) RAC on Linux (CentOS 4) using VMware Server with no additional shared disk devices.

Introduction

One of the biggest obstacles preventing people from setting up test RAC environments is the requirement for shared storage. In a production environment, shared storage is often provided by a SAN or high-end NAS device, but both of these options are very expensive when all you want to do is get some experience installing and using RAC. A cheaper alternative is to use a FireWire disk enclosure to allow two machines to access the same disk(s), but that still costs money and requires two servers. A third option is to use VMware Server to fake the shared storage.

Using VMware Server you can run multiple Virtual Machines (VMs) on a single server, allowing you to run both RAC nodes on a single machine. In addition, it allows you to set up shared virtual disks, overcoming the obstacle of expensive shared storage.

Before you launch into this installation, here are a few things to consider.

- The finished system includes the host operating system, two guest operating systems, two sets of Oracle Clusterware, two ASM instances and two Database instances all on a single server. As you can imagine, this requires a significant amount of disk space, CPU and memory. I tried this installation on a 3.4G Pentium 4 with 2G of memory and it failed abysmally. When I used a dual 3.0G Xeon server with 4G of memory it worked fine, but it wasn’t exactly fast.

- This procedure provides a bare bones installation to get the RAC working. There is no redundancy in the Clusterware installation or the ASM installation. To add this, simply create double the amount of shared disks and select the «Normal» redundancy option when it is offered. Of course, this will take more disk space.

- During the virtual disk creation, I always choose not to preallocate the disk space. This makes virtual disk access slower during the installation, but saves on wasted disk space.

- This is not, and should not be considered, a production-ready system. It’s simply to allow you to get used to installing and using RAC.

Download Software

Download the following software.

VMware Server Installation

For this article, I used CentOS 4.3 as both the host and guest operating systems. Regardless of the host OS, the setup of the virtual machines should be similar.

First, install the VMware Server software. On Linux you do this with the following command as the root user.

Then finish the configuration by running the vmware-config.pl script as the root user. Most of the questions can be answered with the default response by pressing the return key. The output below shows my responses to the questions.

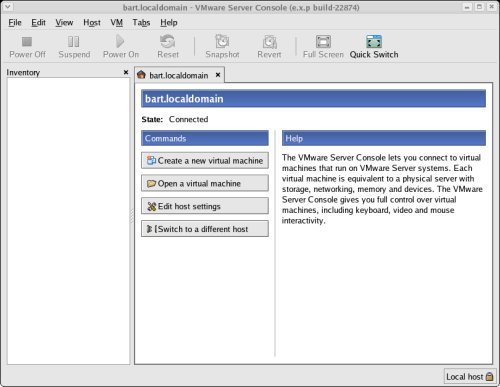

The VMware Server Console is started by issuing the command «vmware» at the command prompt, or by selecting it from the «System Tools» menu.

On the «Connect to Host» dialog, accept the «Local host» option by clicking the «Connect» button.

You are then presented with the main VMware Server Console screen.

The VMware Server is now installed and ready to use.

Virtual Machine Setup

Now we must define the two virtual RAC nodes. We can save time by defining one VM, then cloning it when it is installed.

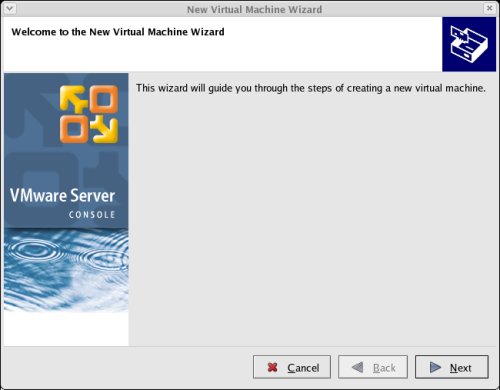

Click the «Create a new virtual machine» button to start the «New Virtual Machine Wizard». Click the «Next» button onthe welcome page.

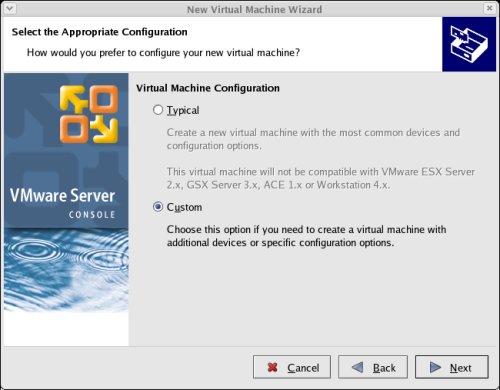

Select the «Custom» virtual machine configuration and click the «Next» button.

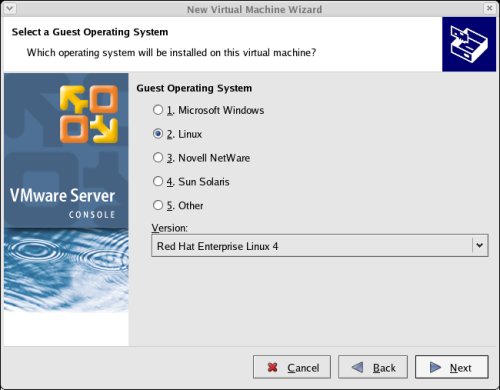

Select the «Linux» guest operating system option, and set the version to «Red Hat Enterprise Linux 4», then click the «Next» button.

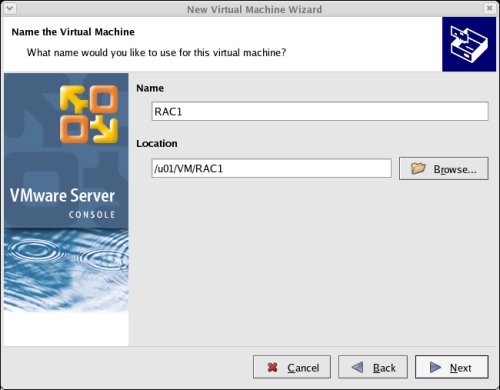

Enter the name «RAC1» and the location should default to «/u01/VM/RAC1», then click the «Next» button.

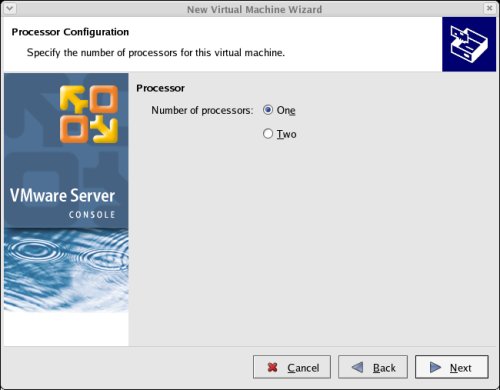

Select the required number of processors and click the «Next» button.

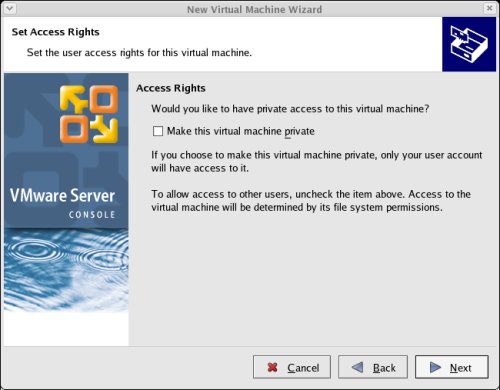

Uncheck the «Make this virtual machine private» checkbox and click the «Next» button.

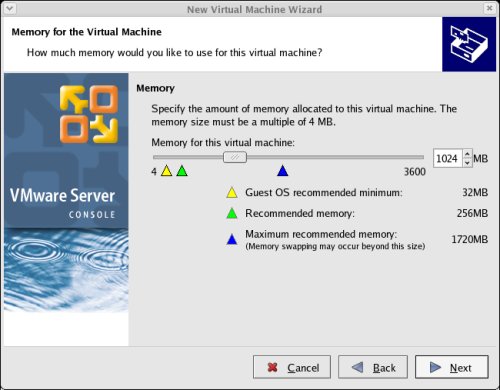

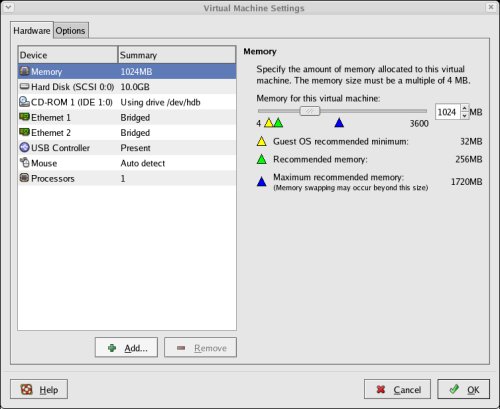

Select the amount of memory to associate with the virtual machine. Remember, you are going to need two instances, so don’t associate too much, but you are going to need approximately 1 Gig (1024 Meg) to compete the installation successfully.

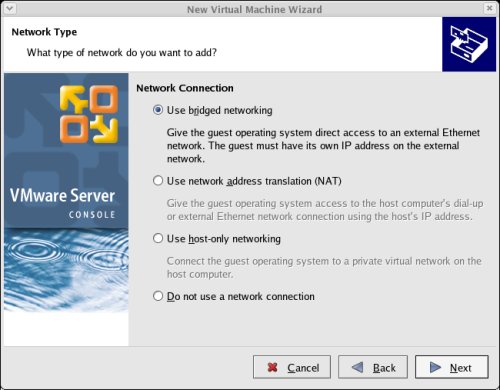

Accept the «Use bridged networking» option by clicking the «Next» button.

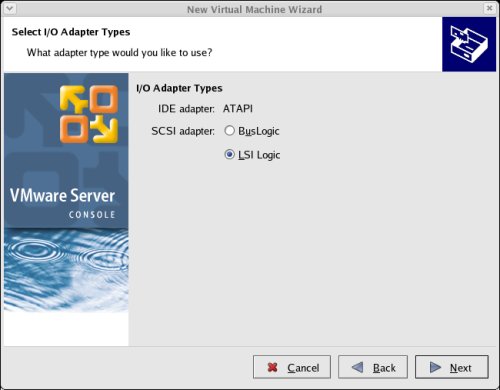

Accept the «LSI Logic» option by clicking the «Next» button.

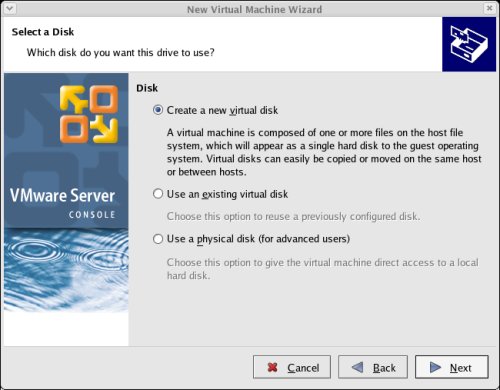

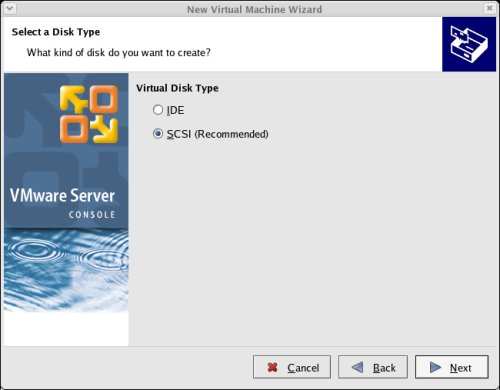

Select the «Create a new virtual disk» option and click the «Next» button.

Accept the «SCSI» option by clicking the «Next» button. It’s a virtual disk, so you can still use this option even if your physical disk is IDE or SATA.

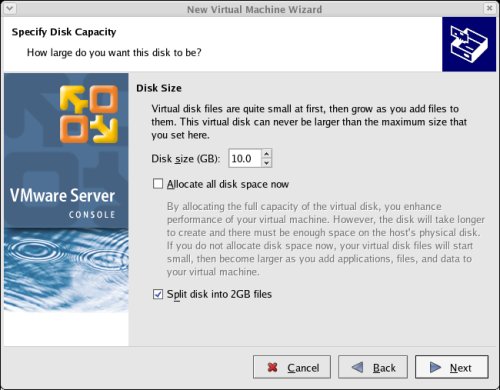

Set the disk size to «10.0» GB and uncheck the «Allocate all disk space now» option. The latter will make disk access slower, but will save you wasting disk space.

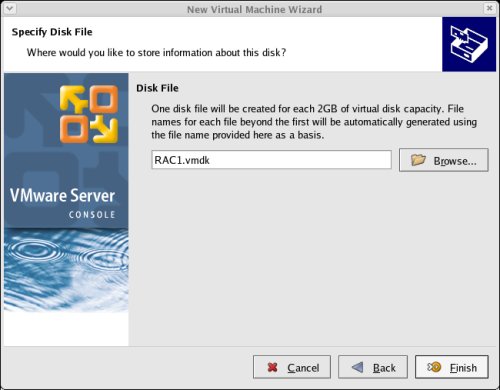

Accept «RAC1.vmdk» as the disk file name and complete the VM creation by clicking the «Finish» button.

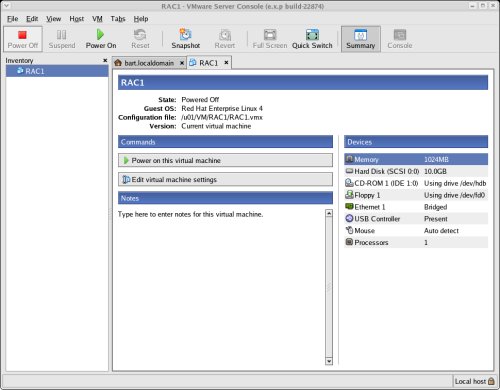

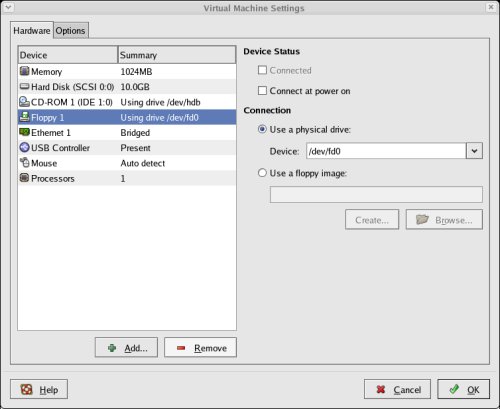

On the «VMware Server Console» screen, click the «Edit virtual machine settings» button.

On the «Virtual Machine Settings» screen, highlight the «Floppy 1» drive and click the «- Remove» button.

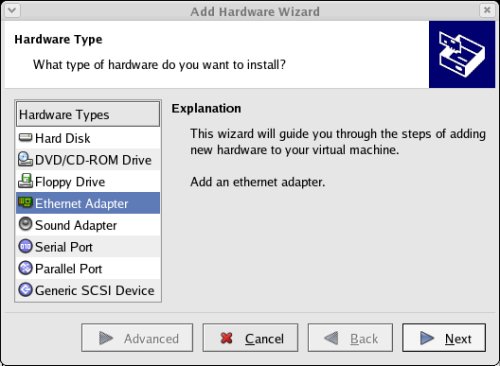

Click the «+ Add» button and select a hardware type of «Ethernet Adapter», then click the «Next» button.

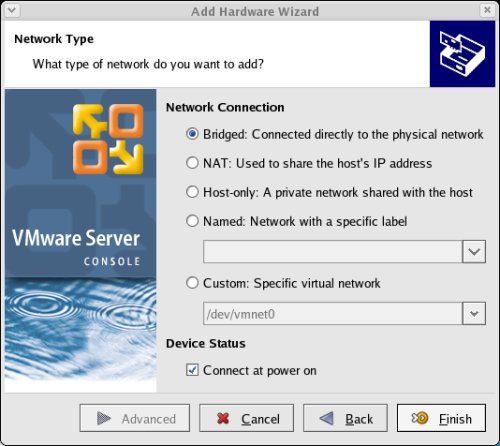

Accept the «Bridged» option by clicking the «Finish» button.

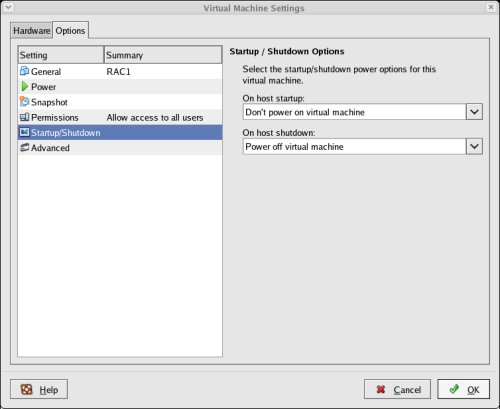

Click on the «Options» tab, highlight the «Startup/Shutdown» setting and select the «Don’t power on virtual machine» in the «On host startup» option. Finish by clicking the «OK» button.

The virtual machine is now configured so we can start the guest operating system installation.

Guest Operating System Installation

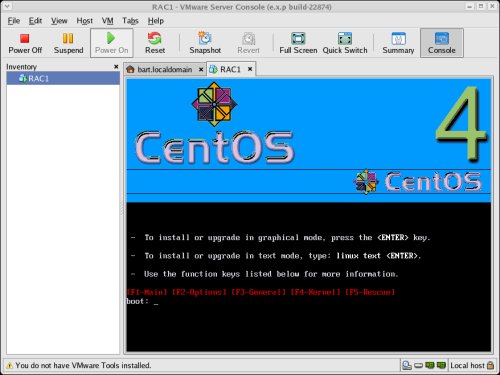

Place the first CentOS 4 disk in the CD drive and start the virtual machine by clicking the «Power on this virtual machine» button. The right pane of the VMware Server Console should display a boot loader, then the CentOS installation screen.

Continue through the CentOS 4 installation as you would for a normal server. A general pictorial guide to the installation can be found here. More specifically, it should be a server installation with a minimum of 2G swap, secure Linux disabled and the following package groups installed:

- X Window System

- GNOME Desktop Environment

- Editors

- Graphical Internet

- Server Configuration Tools

- FTP Server

- Development Tools

- Legacy Software Development

- Administration Tools

- System Tools

To be consistent with the rest of the article, the following information should be set during the installation.

- hostname: rac1.localdomain

- IP Address eth0: 192.168.2.101 (public address)

- Default Gateway eth0: 192.168.2.1 (public address)

- IP Address eth1: 192.168.0.101 (private address)

- Default Gateway eth1: none

You are free to change the IP addresses to suit your network, but remember to stay consistent with those adjustments throughout the rest of the article.

Once the basic installation is complete, install the following packages whilst logged in as the root user.

Oracle Installation Prerequisites

Perform the following steps whilst logged into the RAC1 virtual machine as the root user.

The «/etc/hosts» file must contain the following information.

Add the following lines to the «/etc/sysctl.conf» file.

Run the following command to change the current kernel parameters.

Add the following lines to the «/etc/security/limits.conf» file.

Add the following line to the «/etc/pam.d/login» file, if it does not already exist.

Disable secure linux by editing the «/etc/selinux/config» file, making sure the SELINUX flag is set as follows.

Alternatively, this alteration can be done using the GUI tool (Applications > System Settings > Security Level). Click on the SELinux tab and disable the feature.

Set the hangcheck kernel module parameters by adding the following line to the «/etc/modprobe.conf» file.

To load the module immediately, execute «modprobe -v hangcheck-timer».

Create the new groups and users.

Create the directories in which the Oracle software will be installed.

During the installation, both RSH and RSH-Server were installed. Enable remote shell and rlogin by doing the following.

Create the «/etc/hosts.equiv» file as the root user.

Edit the «/etc/hosts.equiv» file to include all the RAC nodes:

Login as the oracle user and add the following lines at the end of the «.bash_profile» file.

Install VMware Client Tools

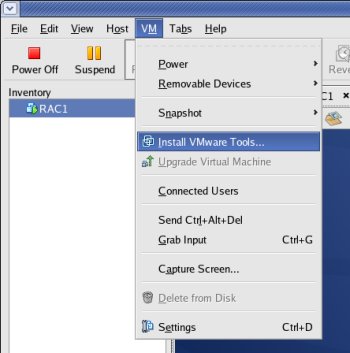

Login as the root user on the RAC1 virtual machine, then select the «VM > Install VMware Tools. » option from the main VMware Server Console menu.

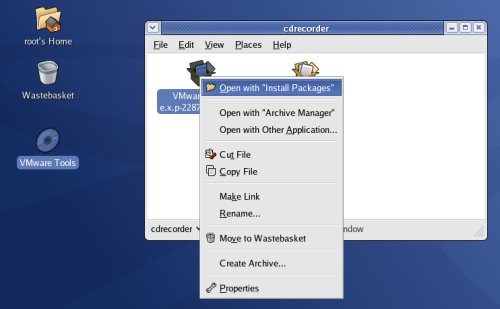

This should mount a virtual CD containing the VMware Tools software. Double-click on the CD icon labelled «VMware Tools» to open the CD. Right-click on the «.rpm» package and select the «Open with ‘Install Packages'» menu option.

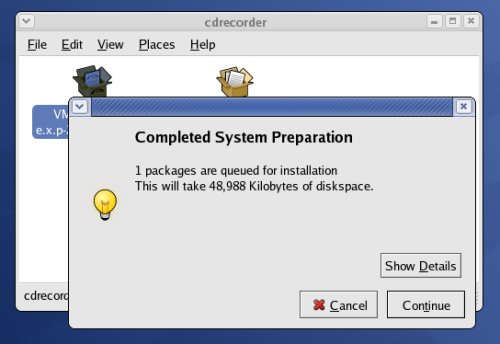

Click the «Continue» button on the «Completed System Preparation» screen and wait for the installation to complete.

Once the package is loaded, the CD should unmount automatically. You must then run the «vmware-config-tools.pl» script as the root user. The following listing is an example of the output you should expect.

The VMware client tools are now installed.

Create Shared Disks

Shut down the RAC1 virtual machine using the following command.

Create a directory on the host system to hold the shared virtual disks.

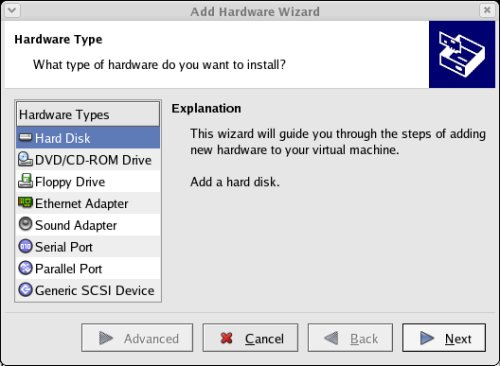

On the VMware Server Console, click the «Edit virtual machine settings» button. On the «Virtual Machine Settings» screen, click the «+ Add» button.

Select the hardware type of «Hard Disk» and click the «Next» button.

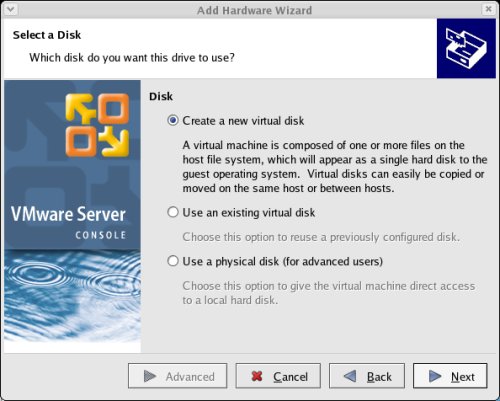

Accept the «Create a new virtual disk» option by clicking the «Next» button.

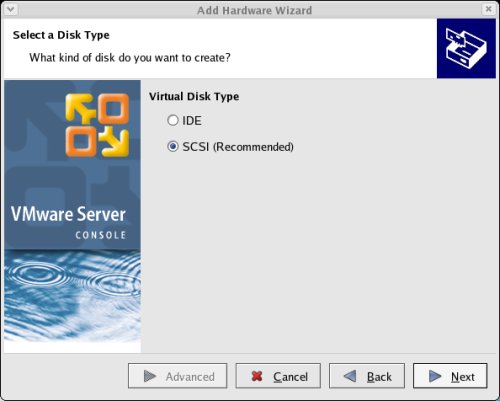

Accept the «SCSI» option by clicking the «Next» button.

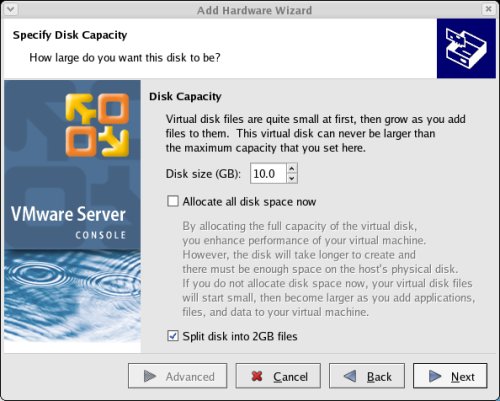

Set the disk size to «10.0» GB and uncheck the «Allocate all disk space now» option, then click the «Next» button.

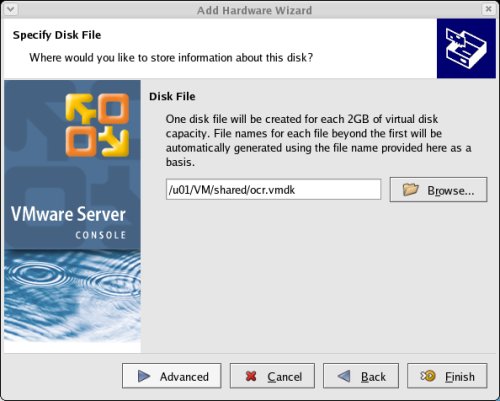

Set the disk name to «/u01/VM/shared/ocr.vmdk» and click the «Advanced» button.

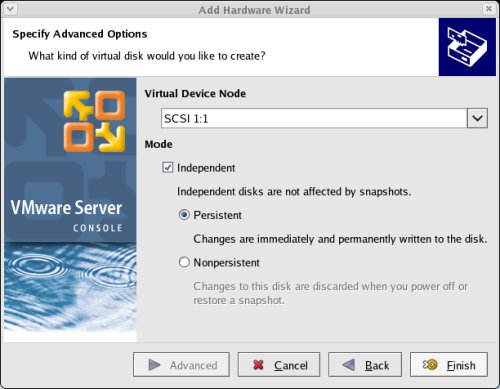

Set the virtual device node to «SCSI 1:1» and the mode to «Independent» and «Persistent», then click the «Finish» button.

Repeat the previous hard disk creation steps 4 more times, using the following values.

- File Name: /u01/VM/shared/votingdisk.vmdk

Virtual Device Node: SCSI 1:2

Mode: Independent and Persistent

File Name: /u01/VM/shared/asm1.vmdk

Virtual Device Node: SCSI 1:3

Mode: Independent and Persistent

File Name: /u01/VM/shared/asm2.vmdk

Virtual Device Node: SCSI 1:4

Mode: Independent and Persistent

Virtual Device Node: SCSI 1:5

Mode: Independent and Persistent

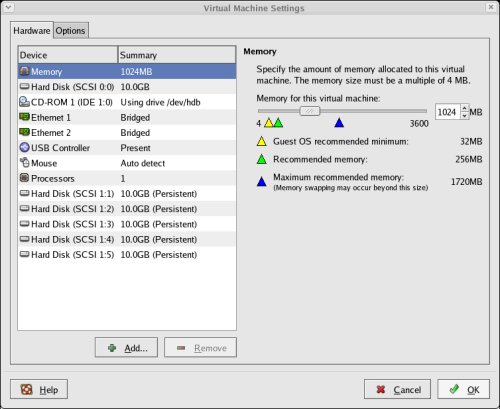

At the end of this process, the virtual machine should look something like the picture below.

Edit the contents of the «/u01/VM/RAC1/RAC1.vmx» file using a text editor, making sure the following entries are present. Some of the tries will already be present, some will not.

Start the RAC1 virtual machine by clicking the «Power on this virtual machine» button on the VMware Server Console. When the server has started, log in as the root user so you can partition the disks. The current disks can be seen by issueing the following commands.

Use the «fdisk» command to partition the disks sdb to sdf. The following output shows the expected fdisk output for the sdb disk.

In each case, the sequence of answers is «n», «p», «1», «Return», «Return», «p» and «w».

Once all the disks are partitioned, the results can be seen by repeating the previous «ls» command.

Edit the «/etc/sysconfig/rawdevices» file, adding the following lines.

Restart the rawdevices service using the following command.

Create some symbolic links to the raw devices. This is not really necessary, but it acts as a reminder of the true locatons.

Run the following commands and add them the «/etc/rc.local» file.

The shared disks are now configured.

Clone the Virtual Machine

The current version of VMware Server does not include an option to clone a virtual machine, but the following steps illustrate how this can be achieved manually.

Shut down the RAC1 virtual machine using the following command.

Copy the RAC1 virtual machine using the following command.

Edit the contents of the «/u01/VM/RAC2/RAC1.vmx» file, making the following change.

Ignore discrepancies with the file names in the «/u01/VM/RAC2» directory. This does not affect the action of the virtual machine.

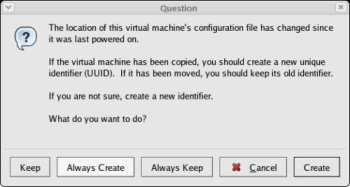

In the VMware Server Console, select the File > Open menu options and browse for the «/u01/VM/RAC2/RAC1.vmx» file. Once opened, the RAC2 virtual machine is visible on the console. Start the RAC2 virtual machine by clicking the «Power on this virtual machine» button and click the «Create» button on the subsequent «Question» screen.

Ignore any errors during the server startup. We are expecting the networking components to fail at this point.

Log in to the RAC2 virtual machine as the root user and start the «Network Configuration» tool (Applications > System Settings > Network).

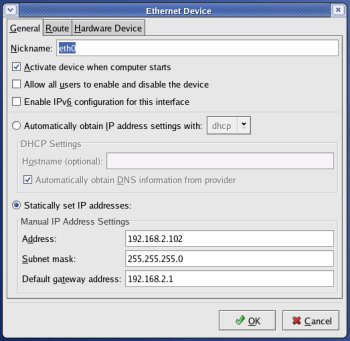

Highlight the «eth0» interface and click the «Edit» button on the toolbar and alter the IP address to «192.168.2.102» in the resulting screen.

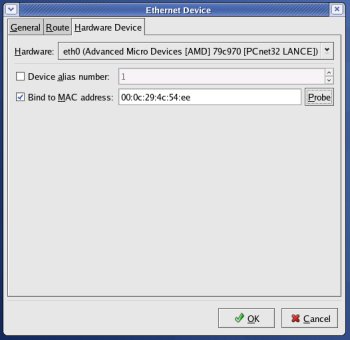

Click on the «Hardware Device» tab and click the «Probe» button. Then accept the changes by clicking the «OK» button.

Repeat the process for the «eth1» interface, this time setting the IP Address to «192.168.0.102».

Click on the «DNS» tab and change the host name to «rac2.localdomain», then click on the «Devices» tab.

Once you are finished, save the changes (File > Save) and activate the network interfaces by highlighting them and clicking the «Activate» button. Once activated, the screen should look like the following image.

Edit the «/home/oracle/.bash_profile» file on the RAC2 node to correct the ORACLE_SID value.

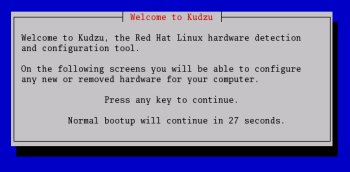

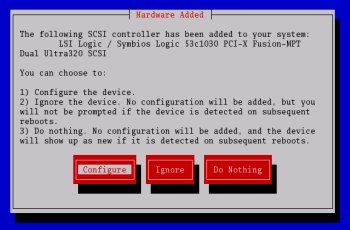

Start the RAC1 virtual machine and restart the RAC2 virtual machine. Whilst starting up, the «Kudzu» detection screen may be displayed.

Press a key and accept the configuration change on the following screen.

When both nodes have started, check they can both ping all the public and private IP addresses using the following commands.

At this point the virtual IP addresses defined in the /etc/hosts file will not work, so don’t bother testing them.

In the original installation I used RSH for inter-node communication. If you wish to use SSH instead, perform the following configurations. If you prefer to use RSH, jump straight to the runcluvfy.sh note.

Configure SSH on each node in the cluster. Log in as the «oracle» user and perform the following tasks on each node.

The RSA public key is written to the «

/.ssh/id_rsa.pub» file and the private key to the «

Log in as the «oracle» user on RAC1, generate an «authorized_keys» file on RAC1 and copy it to RAC2 using the following commands.

Next, log in as the «oracle» user on RAC2 and perform the following commands.

The «authorized_keys» file on both servers now contains the public keys generated on all RAC nodes.

To enable SSH user equivalency on the cluster member nodes issue the following commands on each node.

You should now be able to SSH and SCP between servers without entering passwords.

Before installing the clusterware, check the prerequisites have been met using the «runcluvfy.sh» utility in the clusterware root directory.

If you get any failures be sure to correct them before proceeding.

It’s a good idea to take a snapshot of the virtual machines, so you can repeat the following stages if you run into any problems. To do this, shutdown both virtual machines and issue the following commands.

The virtual machine setup is now complete.

Install the Clusterware Software

Start the RAC1 and RAC2 virtual machines, login to RAC1 as the oracle user and start the Oracle installer.

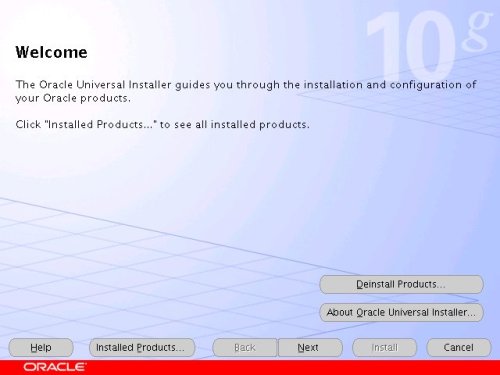

On the «Welcome» screen, click the «Next» button.

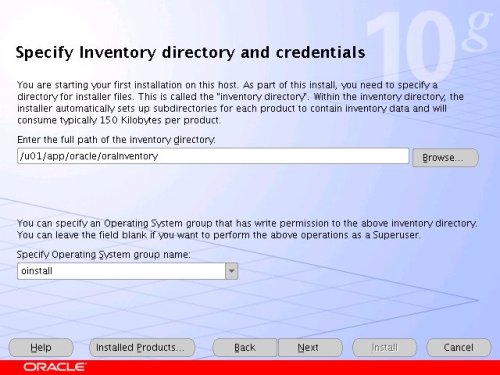

Accept the default inventory location by clicking the «Next» button.

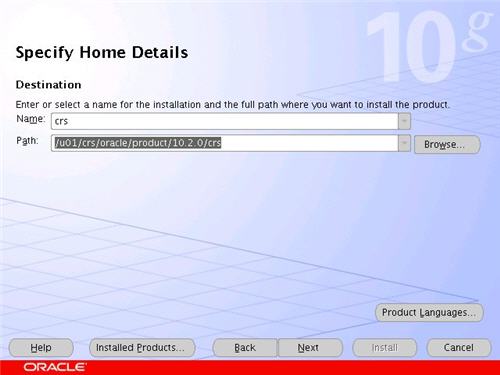

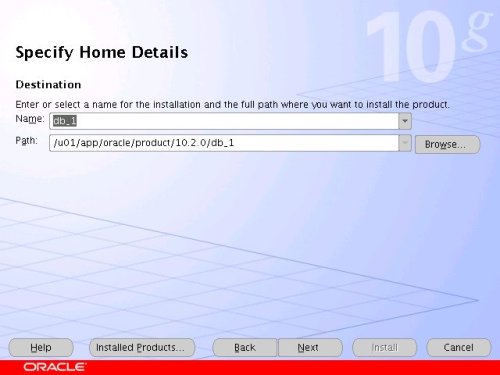

Enter the appropriate name and path for the Oracle Home and click the «Next» button.

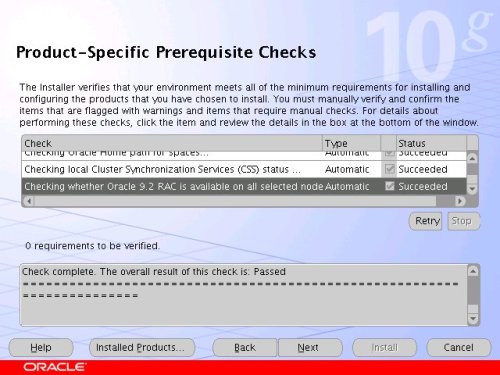

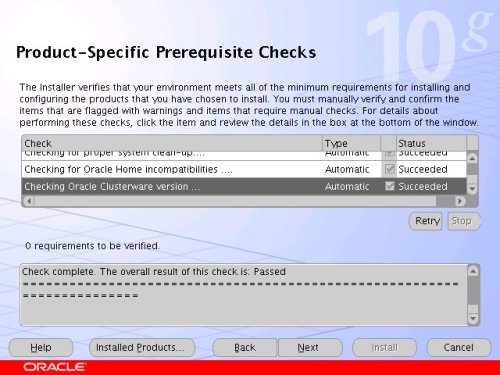

Wait while the prerequisite checks are done. If you have any failures correct them and retry the tests before clicking the «Next» button.

You can choose to ignore the warnings from the prerequisite checks and click the «Next» button. If you do, you will also need to ignore the subsequent warning message by clicking the «Yes» button.

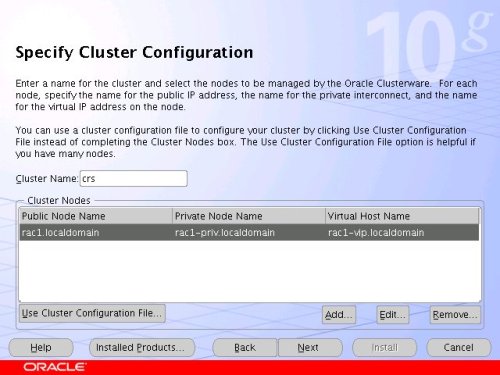

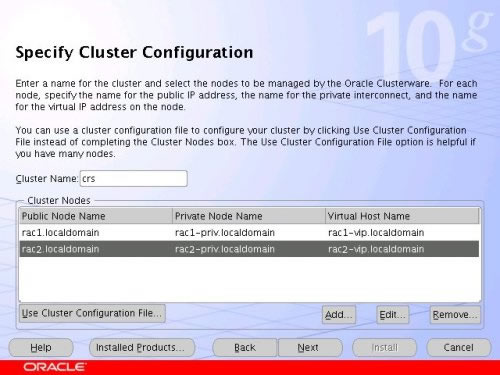

The «Specify Cluster Configuration» screen shows only the RAC1 node in the cluster. Click the «Add» button to continue.

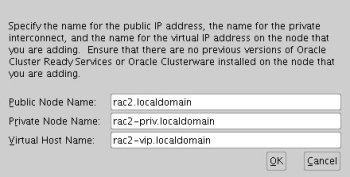

Enter the details for the RAC2 node and click the «OK» button.

Click the «Next» button to continue.

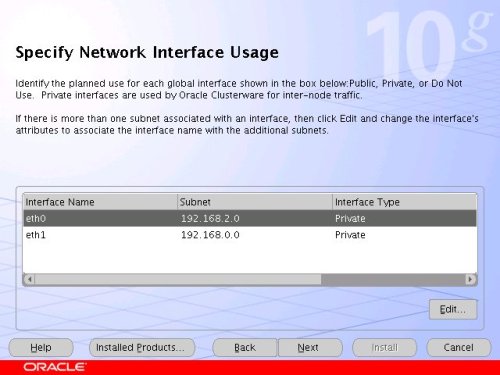

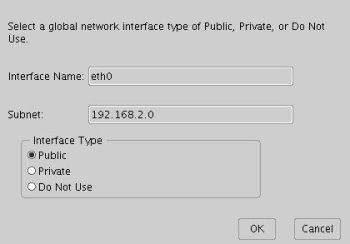

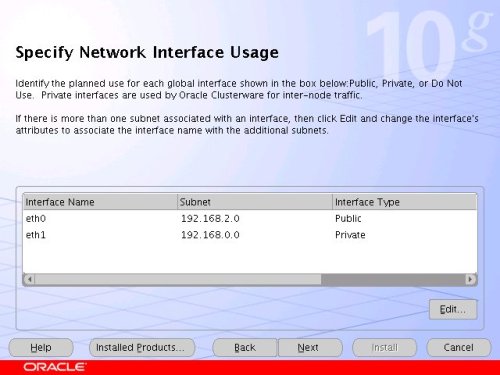

The «Specific Network Interface Usage» screen defines how each network interface will be used. Highlight the «eth0» interface and click the «Edit» button.

Set the «eht0» interface type to «Public» and click the «OK» button.

Leave the «eth1» interface as private and click the «Next» button.

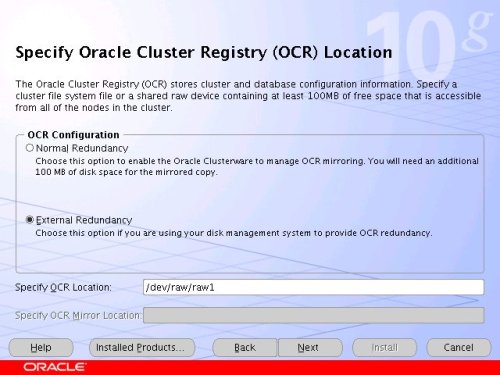

Click the «External Redundancy» option, enter «/dev/raw/raw1» as the OCR Location and click the «Next» button. To have greater redundancy we would need to define another shared disk for an alternate location.

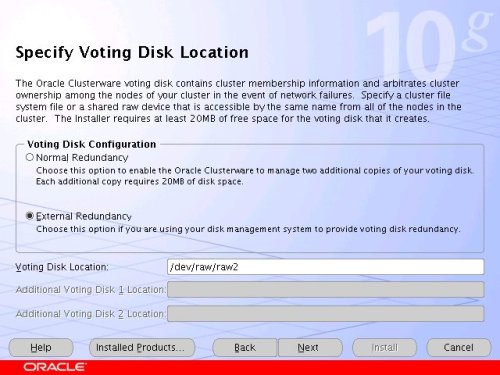

Click the «External Redundancy» option, enter «/dev/raw/raw2» as the Voting Disk Location and click the «Next» button. To have greater redundancy we would need to define another shared disk for an alternate location.

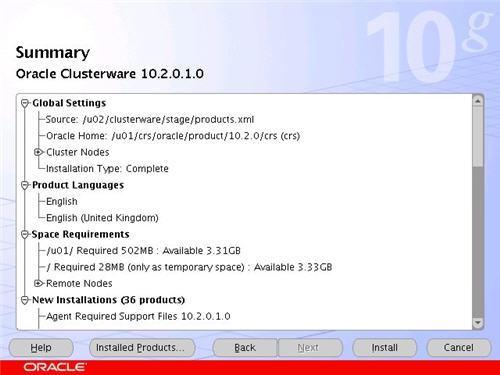

On the «Summary» screen, click the «Install» button to continue.

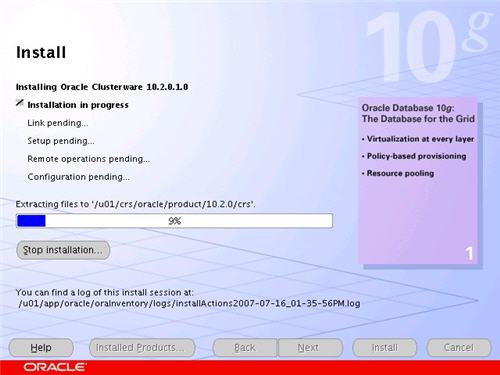

Wait while the installation takes place.

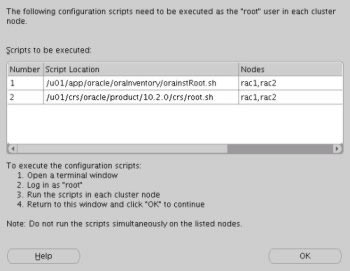

Once the install is complete, run the orainstRoot.sh and root.sh scripts on both nodes as directed on the following screen.

The output from the orainstRoot.sh file should look something like that listed below.

The output of the root.sh will vary a little depending on the node it is run on. The following text is the output from the RAC1 node.

Ignore the directory ownership warnings. We should really use a separate directory structure for the clusterware so it can be owned by the root user, but it has little effect on the finished results.

The output from the RAC2 node is listed below.

Here you can see that some of the configuration steps are omitted as they were done by the first node. In addition, the final part of the script ran the Virtual IP Configuration Assistant (VIPCA) in silent mode, but it failed. This is because my public IP addresses are actually within the «192.168.255.255» range which is a private IP range. If you were using «legal» IP addresses you would not see this and you could ignore the following VIPCA steps.

Run the VIPCA manually as the root user on the RAC2 node using the following command.

Click the «Next» button on the VIPCA welcome screen.

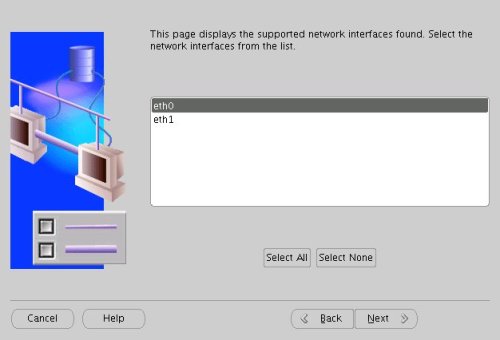

Highlight the «eth0» interface and click the «Next» button.

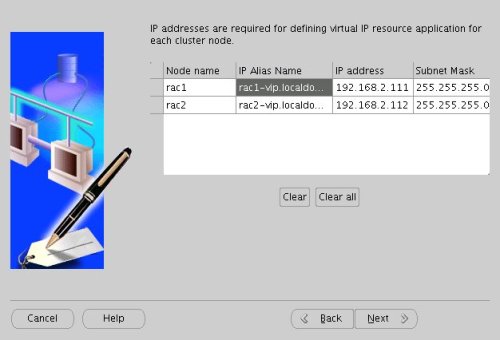

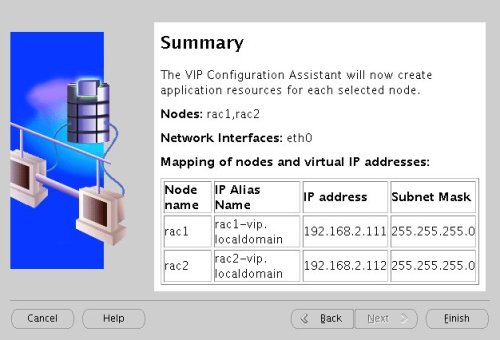

Enter the vitual IP alias and address for each node. Once you enter the first alias, the remaining values should default automatically. Click the «Next» button to continue.

Accept the summary information by clicking the «Finish» button.

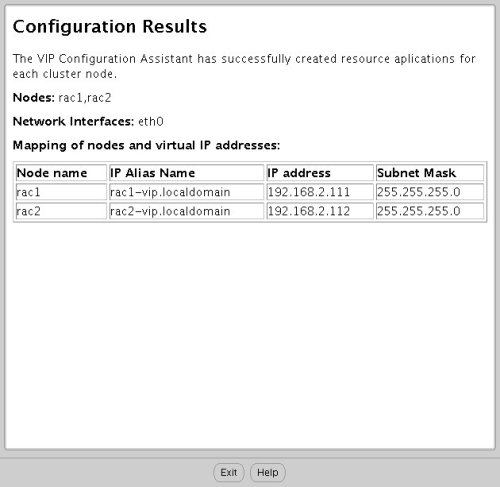

Wait until the configuration is complete, then click the «OK» button.

Accept the VIPCA results by clicking the «Exit» button.

You should now return to the «Execute Configuration Scripts» screen on RAC1 and click the «OK» button.

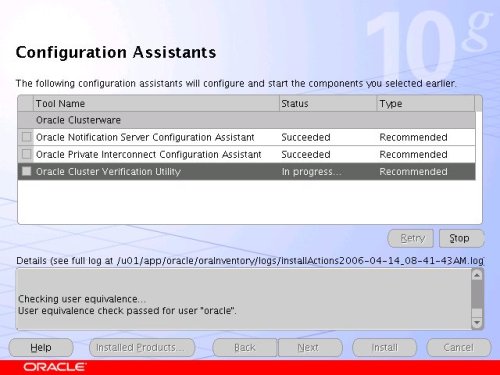

Wait for the configuration assistants to complete.

When the installation is complete, click the «Exit» button to leave the installer.

It’s a good idea to take a snapshot of the virtual machines, so you can repeat the following stages if you run into any problems. To do this, shutdown both virtual machines and issue the following commands.

The clusterware installation is now complete.

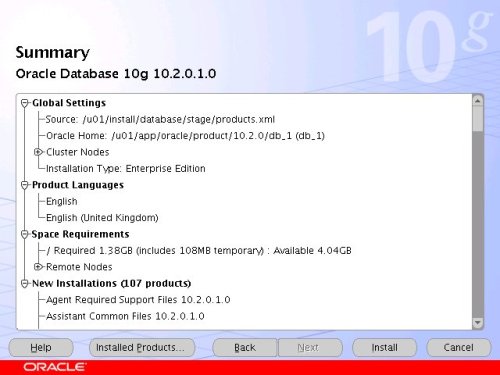

Install the Database Software and Create an ASM Instance

Start the RAC1 and RAC2 virtual machines, login to RAC1 as the oracle user and start the Oracle installer.

On the «Welcome» screen, click the «Next» button.

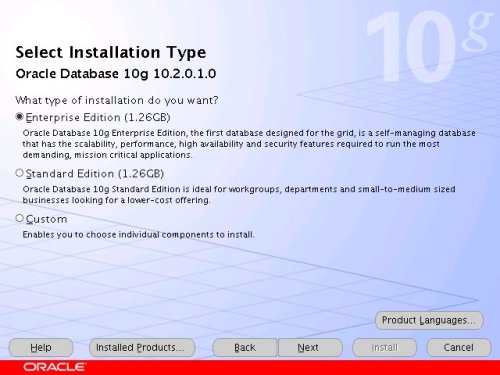

Select the «Enterprise Edition» option and click the «Next» button.

Enter the name and path for the Oracle Home and click the «Next» button.

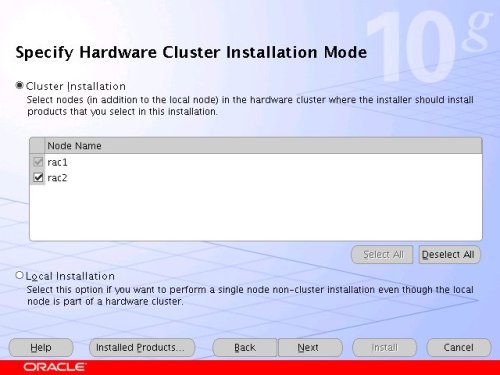

Select the «Cluster Install» option and make sure both RAC nodes are selected, the click the «Next» button.

Wait while the prerequisite checks are done. If you have any failures correct them and retry the tests before clicking the «Next» button.

You can choose to ignore the warnings from the prerequisite checks and click the «Next» button. If you do, you will also need to ignore the subsequent warning message by clicking the «Yes» button.

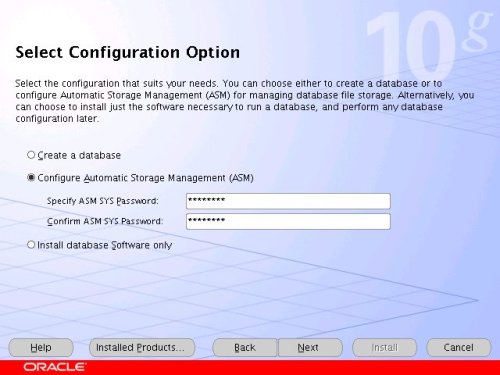

Select the «Configure Automatic Storage Management (ASM)» option, enter the SYS password for the ASM instance, then click the «Next» button.

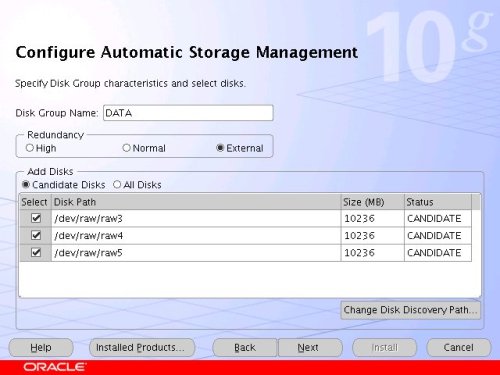

Select the «External» redundancy option (no mirroring), select all three raw disks (raw3, raw4 and raw5), then click the «Next» button.

On the «Summary» screen, click the «Install» button to continue.

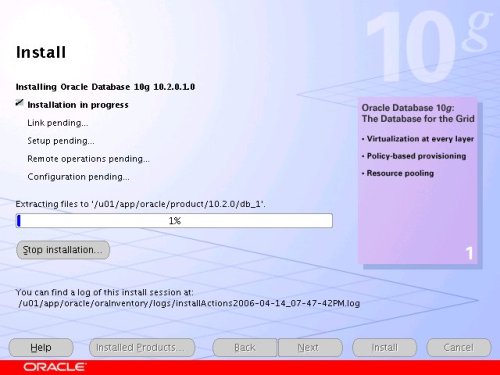

Wait while the database software installs.

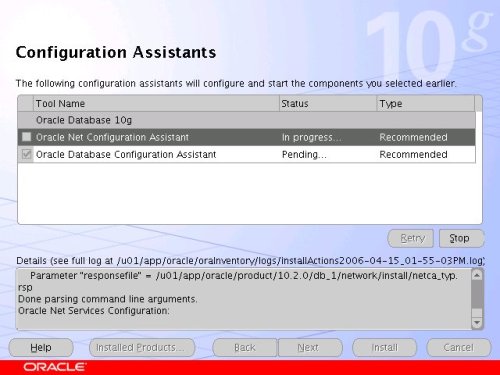

Once the installation is complete, wait while the configuration assistants run.

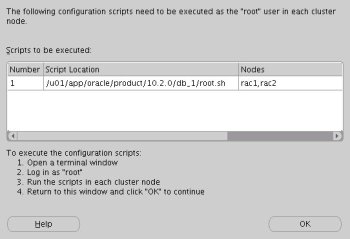

Execute the «root.sh» scripts on both nodes, as instructed on the «Execute Configuration scripts» screen, then click the «OK» button.

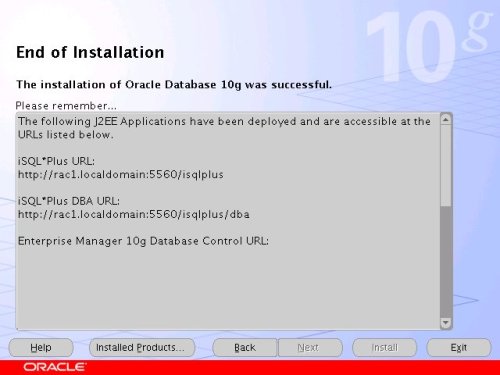

When the installation is complete, click the «Exit» button to leave the installer.

It’s a good idea to take a snapshot of the virtual machines, so you can repeat the following stages if you run into any problems. To do this, shutdown both virtual machines and issue the following commands.

The database software installation and ASM creation step is now complete.

Create a Database using the DBCA

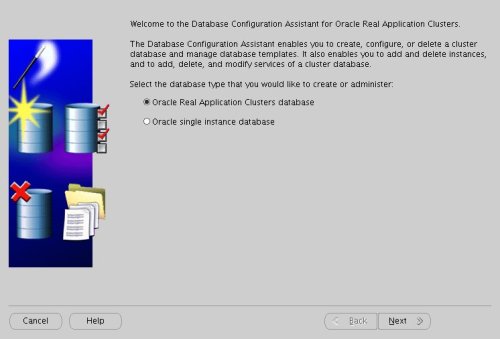

Start the RAC1 and RAC2 virtual machines, login to RAC1 as the oracle user and start the Database Configuration Assistant.

On the «Welcome» screen, select the «Oracle Real Application Clusters database» option and click the «Next» button.

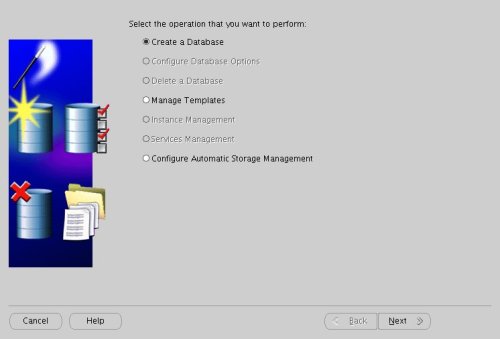

Select the «Create a Database» option and click the «Next» button.

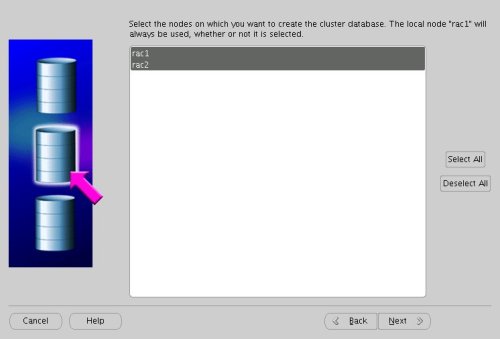

Highlight both RAC nodes and click the «Next» button.

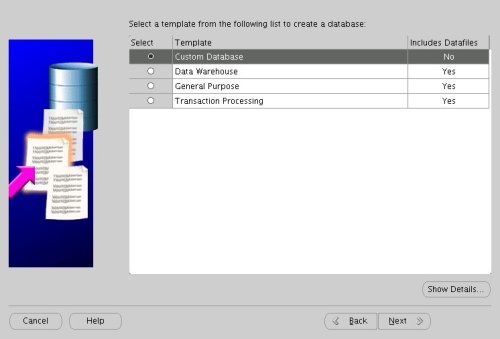

Select the «Custom Database» option and click the «Next» button.

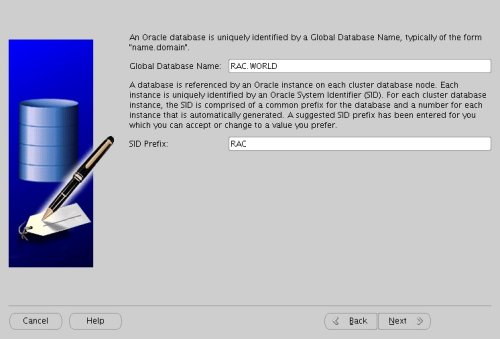

Enter the values «RAC.WORLD» and «RAC» for the Global Database Name and SID Prefix respectively, then click the «Next» button.

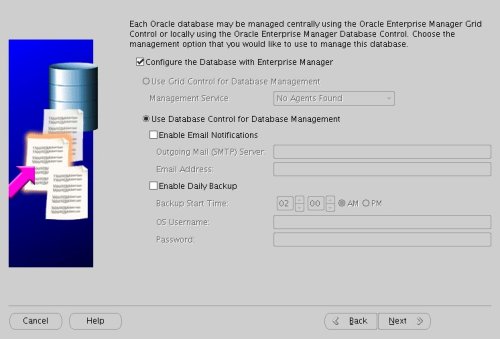

Accept the management options by clicking the «Next» button. If you are attempting the installation on a server with limited memory, you may prefer not to configure Enterprise Manager at this time.

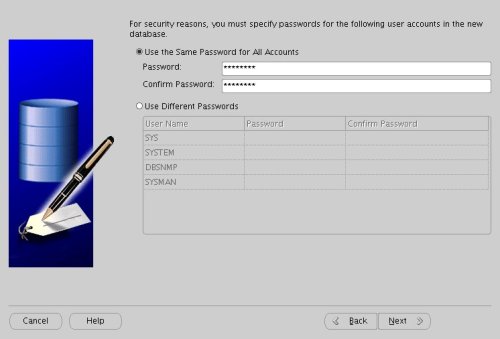

Enter database passwords then click the «Next» button.

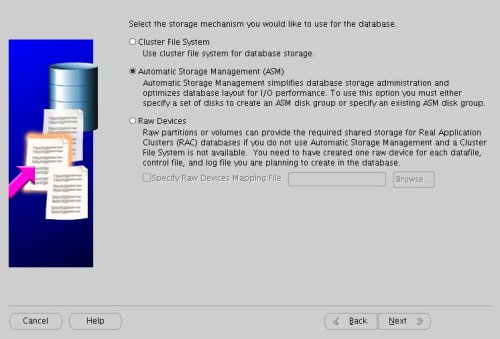

Select the «Automatic Storage Management (ASM)» option, then click the «Next» button.

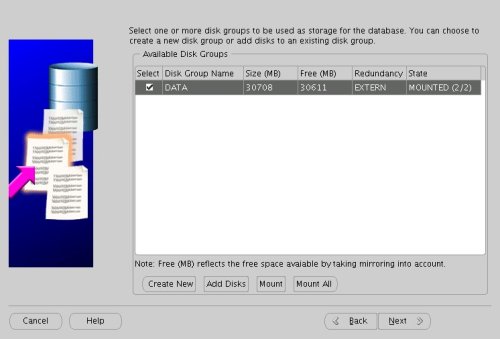

Select the «DATA» disk group, then click the «Next» button.

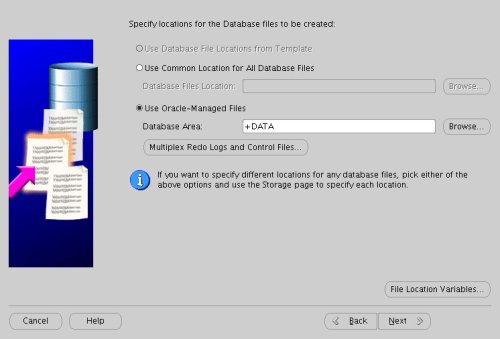

Accept the «Use Oracle-Managed Files» database location by the «Next» button.

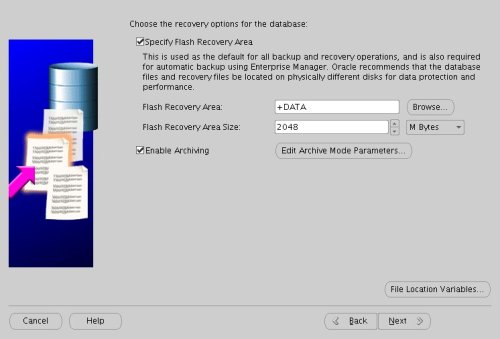

Check both the «Specify Flash Recovery Area» and «Enable Archiving» options. Enter «+DATA» as the Flash Recovery Area, then click the «Next» button.

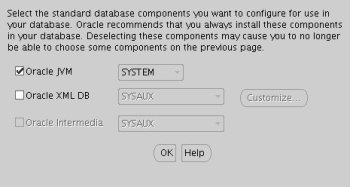

Uncheck all but the «Enterprise Manager Repository» option, then click the «Standard Database Components. » button.

Uncheck all but the «Oracle JVM» option, then click the «OK» button, followed by the «Next» button on the previous screen. If you are attempting the installation on a server with limited memory, you may prefer not to install the JVM at this time.

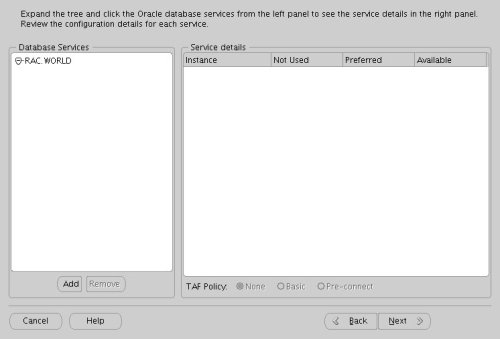

Accept the current database services configuration by clicking the «Next» button.

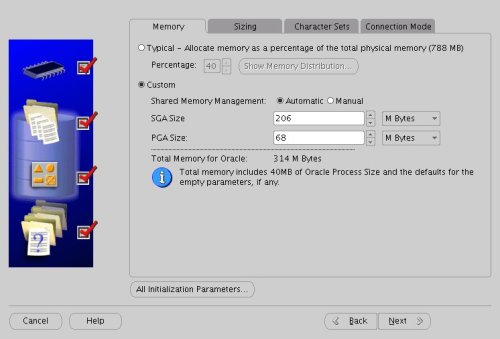

Select the «Custom» memory management option and accept the default settings by clicking the «Next» button.

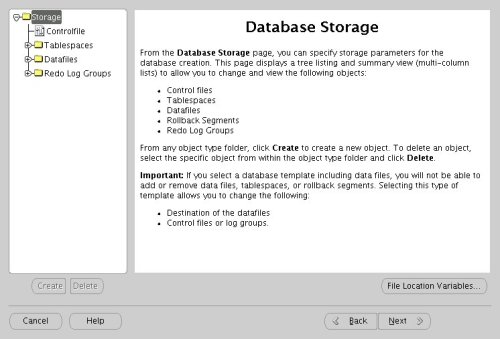

Accept the database storage settings by clicking the «Next» button.

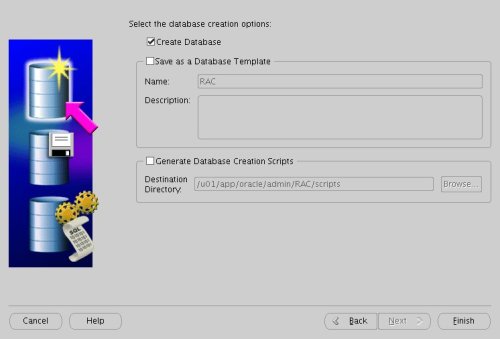

Accept the database creation options by clicking the «Finish» button.

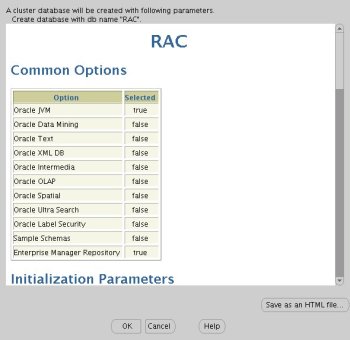

Accept the summary information by clicking the «OK» button.

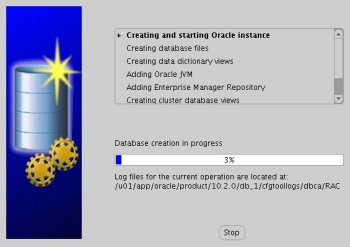

Wait while the database is created.

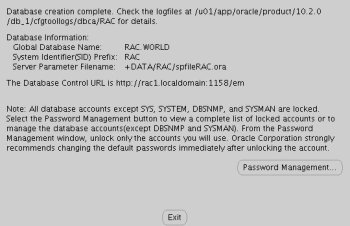

Once the database creation is complete you are presented with the following screen. Make a note of the information on the screen and click the «Exit» button.

The RAC database creation is now complete.

TNS Configuration

Once the installation is complete, the «$ORACLE_HOME/network/admin/listener.ora» file on each RAC node will contain entries similar to the following.

The «$ORACLE_HOME/network/admin/tnsnames.ora» file on each RAC node will contain entries similar to the following.

This configuration allows direct connections to specific instance, or using a load balanced connection to the main service.

Check the Status of the RAC

There are several ways to check the status of the RAC. The srvctl utility shows the current configuration and status of the RAC database.

The V$ACTIVE_INSTANCES view can also display the current status of the instances.

Finally, the GV$ allow you to display global information for the whole RAC.

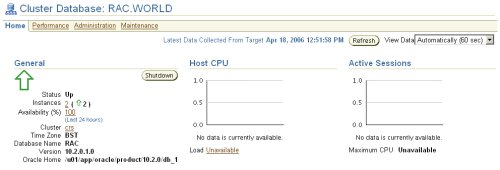

If you have configured Enterprise Manager, it can be used to view the configuration and current status of the database.

Direct and Asynchronous I/O

Remember to use direct I/O and asynchronous I/O to improve performance. Direct I/O has been supported over NFS for some time, but support for asynchronous I/O over NFS was only introduced in RHEL 4 Update 3 (and its clones), so you need to use an up to date version of your Linux distribution to take advantage of this feature.

You can get details about this Direct and Asynchronous I/O by following the link.

Источник