- About Processes and Threads

- Controlling Processes and Threads in WinDbg

- Opening the Processes and Threads Window

- Using the Processes and Threads Window

- Additional Information

- Controlling Processes and Threads

- Displaying Processes and Threads

- Setting the Current Process and Thread

- Freezing and Suspending Threads

- Threads and Processes in Other Commands

- Multiple Systems

- Processes, Threads, and Apartments

- The Apartment and the COM Threading Architecture

About Processes and Threads

Each process provides the resources needed to execute a program. A process has a virtual address space, executable code, open handles to system objects, a security context, a unique process identifier, environment variables, a priority class, minimum and maximum working set sizes, and at least one thread of execution. Each process is started with a single thread, often called the primary thread, but can create additional threads from any of its threads.

A thread is the entity within a process that can be scheduled for execution. All threads of a process share its virtual address space and system resources. In addition, each thread maintains exception handlers, a scheduling priority, thread local storage, a unique thread identifier, and a set of structures the system will use to save the thread context until it is scheduled. The thread context includes the thread’s set of machine registers, the kernel stack, a thread environment block, and a user stack in the address space of the thread’s process. Threads can also have their own security context, which can be used for impersonating clients.

Microsoft Windows supports preemptive multitasking, which creates the effect of simultaneous execution of multiple threads from multiple processes. On a multiprocessor computer, the system can simultaneously execute as many threads as there are processors on the computer.

A job object allows groups of processes to be managed as a unit. Job objects are namable, securable, sharable objects that control attributes of the processes associated with them. Operations performed on the job object affect all processes associated with the job object.

An application can use the thread pool to reduce the number of application threads and provide management of the worker threads. Applications can queue work items, associate work with waitable handles, automatically queue based on a timer, and bind with I/O.

User-mode scheduling (UMS) is a lightweight mechanism that applications can use to schedule their own threads. An application can switch between UMS threads in user mode without involving the system scheduler and regain control of the processor if a UMS thread blocks in the kernel. Each UMS thread has its own thread context instead of sharing the thread context of a single thread. The ability to switch between threads in user mode makes UMS more efficient than thread pools for short-duration work items that require few system calls.

A fiber is a unit of execution that must be manually scheduled by the application. Fibers run in the context of the threads that schedule them. Each thread can schedule multiple fibers. In general, fibers do not provide advantages over a well-designed multithreaded application. However, using fibers can make it easier to port applications that were designed to schedule their own threads.

For more information, see the following topics:

Controlling Processes and Threads in WinDbg

In WinDbg, the Processes and Threads window displays information about the systems, processes, and threads that are being debugged. This window also enables you to select a new system, process, and thread to be active.

Opening the Processes and Threads Window

To open the Processes and Threads window, choose Processes and Threads from the View menu. (You can also press ALT+9 or select the Processes and Threads button (

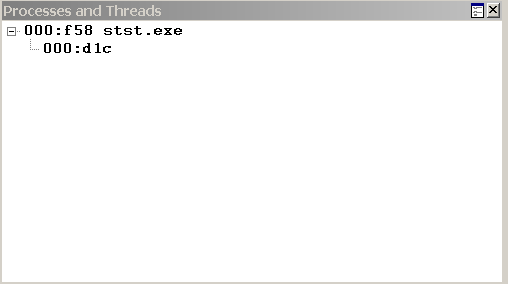

The following screen shot shows an example of a Processes and Threads window.

The Processes and Threads window displays a list of all processes that are currently being debugged. The threads in the process appear under each process. If the debugger is attached to multiple systems, the systems are shown at the top level of the tree, with the processes subordinate to them, and the threads subordinate to the processes.

Each system listing includes the server name and the protocol details. The system that the debugger is running on is identified as .

Each process listing includes the internal decimal process index that the debugger uses, the hexadecimal process ID, and the name of the application that is associated with the process.

Each thread listing includes the internal decimal thread index that the debugger uses and the hexadecimal thread ID.

Using the Processes and Threads Window

In the Processes and Threads window, the current or active system, process, and thread appear in bold type. To make a new system, process, or thread active, select its line in the window.

The Processes and Threads window has a shortcut menu with additional commands. To access the menu, select and hold (or right-click) the title bar or select the icon near the upper-right corner of the window (

Move to new dock closes the Processes and Threads window and opens it in a new dock.

Always floating causes the window to remain undocked even if it is dragged to a docking location.

Move with frame causes the window to move when the WinDbg frame is moved, even if the window is undocked.

Additional Information

For other methods of displaying or controlling systems, see Debugging Multiple Targets. For other methods of displaying or controlling processes and threads, see Controlling Processes and Threads.

Controlling Processes and Threads

When you are performing user-mode debugging, you activate, display, freeze, unfreeze, suspend, and unsuspend processes and threads.

The current or active process is the process that is currently being debugged. Similarly, the current or active thread is the thread that the debugger is currently controlling. The actions of many debugger commands are determined by the identity of the current process and thread. The current process also determines the virtual address mappings that the debugger uses.

When debugging begins, the current process is the one that the debugger is attached to or that caused the exception that broke into the debugger. Similarly, the current thread is the one that was active when the debugger attached to the process or that caused the exception. However, you can use the debugger to change the current process and thread and to freeze or unfreeze individual threads.

In kernel-mode debugging, processes and threads are not controlled by the methods that are described in this section. For more information about how processes and threads are manipulated in kernel mode, see Changing Contexts.

Displaying Processes and Threads

To display process and thread information, you can use the following methods:

Setting the Current Process and Thread

To change the current process or thread, you can use the following methods:

Freezing and Suspending Threads

The debugger can change the execution of a thread by suspending the thread or by freezing the thread. These two actions have somewhat different effects.

Each thread has a suspend count that is associated with it. If this count is one or larger, the system does not run the thread. If the count is zero or lower, the system runs the thread when appropriate.

Typically, each thread has a suspend count of zero. When the debugger attaches to a process, it increments the suspend counts of all threads in that process by one. If the debugger detaches from the process, it decrements all suspend counts by one. When the debugger executes the process, it temporarily decrements all suspend counts by one.

You can control the suspend count of any thread from the debugger by using the following methods:

n (Suspend Thread) command increments the specified thread’s suspend count by one.

m (Resume Thread) command decrements the specified thread’s suspend count by one.

The most common use for these commands is to raise a specific thread’s suspend count from one to two. When the debugger executes or detaches from the process, the thread then has a suspend count of one and remains suspended, even if other threads in the process are executing.

You can suspend threads even when you are performing noninvasive debugging.

The debugger can also freeze a thread. This action is similar to suspending the thread in some ways. However, «frozen» is only a debugger setting. Nothing in the Windows operating system recognizes that anything is different about this thread.

By default, all threads are unfrozen. When the debugger causes a process to execute, threads that are frozen do not execute. However, if the debugger detaches from the process, all threads unfreeze.

To freeze and unfreeze individual threads, you can use the following methods:

f (Freeze Thread) command freezes the specified thread.

u (Unfreeze Thread) command unfreezes the specified thread.

In any event, threads that belong to the target process never execute when the debugger has broken into the target. The suspend count of a thread affects the thread’s behavior only when the debugger executes the process or detaches. The frozen status affects the thread’s behavior only when the debugger executes the process.

Threads and Processes in Other Commands

You can add thread specifiers or process specifiers before many other commands. For more information, see the individual command topics.

You can add the

e (Thread-Specific Command) qualifier before many commands and extension commands. This qualifier causes the command to be executed with respect to the specified thread. This qualifier is especially useful if you want to apply a command to more than one thread. For example, the following command repeats the !gle extension command for every thread that is being debugged.

Multiple Systems

The debugger can attach to multiple targets at the same time. When these processes include dump files or include live targets on more than one computer, the debugger references a system, process, and thread for each action. For more information about this kind of debugging, see Debugging Multiple Targets.

Processes, Threads, and Apartments

A process is a collection of virtual memory space, code, data, and system resources. A thread is code that is to be serially executed within a process. A processor executes threads, not processes, so each application has at least one process, and a process always has at least one thread of execution, known as the primary thread. A process can have multiple threads in addition to the primary thread.

Processes communicate with one another through messages, using Microsoft’s Remote Procedure Call (RPC) technology to pass information to one another. There is no difference to the caller between a call coming from a process on a remote machine and a call coming from another process on the same machine.

When a thread begins to execute, it continues until it is killed or until it is interrupted by a thread with higher priority (by a user action or the kernel’s thread scheduler). Each thread can run separate sections of code, or multiple threads can execute the same section of code. Threads executing the same block of code maintain separate stacks. Each thread in a process shares that process’s global variables and resources.

The thread scheduler determines when and how often to execute a thread, according to a combination of the process’s priority class attribute and the thread’s base priority. You set a process’s priority class attribute by calling the SetPriorityClass function , and you set a thread’s base priority with a call to SetThreadPriority.

Multithreaded applications must avoid two threading problems: deadlocks and races. A deadlock occurs when each thread is waiting for the other to do something. The COM call control helps prevent deadlocks in calls between objects. A race condition occurs when one thread finishes before another on which it depends, causing the former to use an uninitialized value because the latter has not yet supplied a valid one. COM supplies some functions specifically designed to help avoid race conditions in out-of-process servers. (See Out-of-Process Server Implementation Helpers.)

The Apartment and the COM Threading Architecture

While COM supports the single-thread-per-process model prevalent before the introduction of multiple threads of execution, you can write code to take advantage of multiple threads, resulting in more efficient applications, by allowing one thread to be executed while another thread waits for some time-consuming operation to complete.

Using multiple threads is not a guarantee of better performance. In fact, because thread factoring is a difficult problem, using multiple threads often causes performance problems. The key is to use multiple threads only if you are very sure of what you are doing.

In general, the simplest way to view the COM threading architecture is to think of all the COM objects in the process as divided into groups called apartments. A COM object lives in exactly one apartment, in the sense that its methods can legally be directly called only by a thread that belongs to that apartment. Any other thread that wants to call the object must go through a proxy.

- Single-threaded apartments consist of exactly one thread, so all COM objects that live in a single-threaded apartment can receive method calls only from the one thread that belongs to that apartment. All method calls to a COM object in a single-threaded apartment are synchronized with the windows message queue for the single-threaded apartment’s thread. A process with a single thread of execution is simply a special case of this model.

- Multithreaded apartments consist of one or more threads, so all COM objects that live in an multithreaded apartment can receive method calls directly from any of the threads that belong to the multithreaded apartment. Threads in a multithreaded apartment use a model called free-threading. Calls to COM objects in a multithreaded apartment are synchronized by the objects themselves.

For a description of communication between single-threaded apartments and multithreaded apartments within the same process, see Single-Threaded and Multithreaded Communication.

A process can have zero or more single-threaded apartments and zero or one multithreaded apartment.

In a process, the main apartment is the first to be initialized. In a single-threaded process, this is the only apartment. Call parameters are marshaled between apartments, and COM handles the synchronization through messaging. If you designate multiple threads in a process to be free-threaded, all free threads reside in a single apartment, parameters are passed directly to any thread in the apartment, and you must handle all synchronization. In a process with both free-threading and apartment threading, all free threads reside in a single apartment and all other apartments are single-threaded apartments. A process that does COM work is a collection of apartments with, at most, one multithreaded apartment but any number of single-threaded apartments.

The threading models in COM provide the mechanism for clients and servers that use different threading architectures to work together. Calls among objects with different threading models in different processes are naturally supported. From the perspective of the calling object, all calls to objects outside a process behave identically, no matter how the object being called is threaded. Likewise, from the perspective of the object being called, arriving calls behave identically, regardless of the threading model of the caller.

Interaction between a client and an out-of-process object is straightforward, even when they use different threading models because the client and object are in different processes. COM, interposed between the client and the server, can provide the code for the threading models to interoperate, using standard marshaling and RPC. For example, if a single-threaded object is called simultaneously by multiple free-threaded clients, the calls will be synchronized by COM by placing corresponding window messages in the server’s message queue. The object’s apartment will receive one call each time it retrieves and dispatches messages. However, some care must be taken to ensure that in-process servers interact properly with their clients. (See In-Process Server Threading Issues.)

The most important issue in programming with a multithreaded model is to make your code thread-safe so that messages intended for a particular thread go only to that thread and access to threads is protected.

For more information, see the following topics: