- GPU in Windows Subsystem for Linux (WSL)

- Enable Developers

- Access Advanced AI

- Reduce Obstacles

- WHY USE NVIDIA GPUS ON WINDOWS for AI?

- Get Started Developing GPUs Quickly

- Simplifying Deep Learning

- Accelerate Analytics and Data Science

- CUDA Everywhere

- GPU Accelerated Computing with C and C++

- GPU Accelerated Computing with C and C++

- Additional Resources

- CODE Samples

- Webinars and Technical Presentations

- Availability

- DEVELOPER BLOG

- An Even Easier Introduction to CUDA

- Starting Simple

- Memory Allocation in CUDA

- Profile it!

- Picking up the Threads

- Out of the Blocks

- Summing Up

- Exercises

- Where To From Here?

GPU in Windows Subsystem for Linux (WSL)

Microsoft Windows is a ubiquitous platform for enterprise, business, and personal computing systems. However, industry AI tools, models, frameworks, and libraries are predominantly available on Linux OS. Now all users of AI — whether they are experienced professionals, or students and beginners just getting started — can benefit from innovative GPU-accelerated infrastructure, software, and container support on Windows.

The NVIDIA CUDA on WSL Public Preview brings NVIDIA CUDA and advanced AI together with the ubiquitous Microsoft Windows platform to deliver advanced machine learning capabilities across numerous industry segments and application domains.

Interested parties will need to join the appropriate user programs, and will download specific components from both NVIDIA and Microsoft to set-up the complete WSL environment.

NVIDIA drivers for WSL with CUDA and DirectML support are available as preview for Microsoft Windows Insider Program members who have registered for the NVIDIA Developer Program.

Enable Developers

GPU support is the number one requested feature from worldwide WSL users — including data scientists, ML engineers, and even novice developers.

Access Advanced AI

The most advanced and innovative AI frameworks and libraries are already integrated with NVIDIA CUDA support, including industry leading frameworks like PyTorch and TensorFlow.

Reduce Obstacles

The overhead and duplication of investments in multiple OS compute platforms can be prohibitive — AI users, developers, and data scientists need quick access to run Linux software on their productive Windows platforms.

WHY USE NVIDIA GPUS ON WINDOWS for AI?

If you are a Microsoft Windows user who wants access to state of the art AI technology, NVIDIA enables GPU-accelerated AI development, running advanced Linux-based ML applications on Microsoft Windows by leveraging the WSL application layer.

GPUs have a robust history of accelerating AI applications for both training and inference. NVIDIA provides a wide variety of proven machine learning solutions, and are validated to work with numerous industry frameworks. We leverage our extensive AI experience and domain knowledge to deliver solutions that accelerate your learning, adoption, and results.

Join the NVIDIA Developer Program and come take advantage of our developer tools, training, platforms, and integrations.

Get Started Developing GPUs Quickly

The CUDA Toolkit provides everything developers need to get started building GPU accelerated applications — including compiler toolchains, Optimized libraries, and a suite of developer tools. Use CUDA within WSL and CUDA containers to get started quickly. Features and capabilities will be added to the Preview version of the CUDA Toolkit over the life of the preview program.

Simplifying Deep Learning

NVIDIA provides access to over a dozen deep learning frameworks and SDKs, including support for TensorFlow, PyTorch, MXNet, and more.

Additionally, you can even run pre-built framework containers with Docker and the NVIDIA Container Toolkit in WSL. Frameworks, pre-trained models and workflows are available from NGC.

Accelerate Analytics and Data Science

RAPIDS is an open source NVIDIA suite of software libraries to accelerate data science and analytics pipelines on GPUs.

Reduce training time and increase model accuracy by iterating faster with proven, pre-built libraries.

CUDA Everywhere

Numerous NVIDIA platforms in different form factors and at different price points exist for hosting your work environment, including GPU-enabled graphics cards, laptops, and more.

“The Microsoft — NVIDIA collaboration around WSL enables masses of expert and new users to learn, experiment with, and adopt premier GPU-accelerated AI platforms without leaving the familiarity of their everyday MS Windows environment.”

Kam VedBrat, Partner Group Program Manager for Windows AI Platform, Microsoft Corp.

GPU Accelerated Computing with C and C++

GPU Accelerated Computing with C and C++

Using the CUDA Toolkit you can accelerate your C or C++ applications by updating the computationally intensive portions of your code to run on GPUs. To accelerate your applications, you can call functions from drop-in libraries as well as develop custom applications using languages including C, C++, Fortran and Python. Below you will find some resources to help you get started using CUDA.

Install the free CUDA Toolkit on a Linux, Mac or Windows system with one or more CUDA-capable GPUs. Follow the instructions in the CUDA Quick Start Guide to get up and running quickly.

Or, watch the short video below and follow along.

If you do not have a GPU, you can access one of the thousands of GPUs available from cloud service providers including Amazon AWS, Microsoft Azure and IBM SoftLayer. The NVIDIA-maintained CUDA Amazon Machine Image (AMI) on AWS, for example, comes pre-installed with CUDA and is available for use today.

For more detailed installation instructions, refer to the CUDA installation guides. For help with troubleshooting, browse and participate in the CUDA Setup and Installation forum.

You are now ready to write your first CUDA program. The article, Even Easier Introduction to CUDA, introduces key concepts through simple examples that you can follow along.

The video below walks through an example of how to write an example that adds two vectors.

The Programming Guide in the CUDA Documentation introduces key concepts covered in the video including CUDA programming model, important APIs and performance guidelines.

NVIDIA provides hands-on training in CUDA through a collection of self-paced and instructor-led courses. The self-paced online training, powered by GPU-accelerated workstations in the cloud, guides you step-by-step through editing and execution of code along with interaction with visual tools. All you need is a laptop and an internet connection to access the complete suite of free courses and certification options.

The CUDA C Best Practices Guide presents established parallelization and optimization techniques and explains programming approaches that can greatly simplify programming GPU-accelerated applications.

Additional Resources

CODE Samples

- CUDA samples in documentation

- Adding two vectors together

- 1D Stencil example: shared memory & synchronized threads

- Optimizing a Jacobi Point Iterative method

Webinars and Technical Presentations

Availability

The CUDA Toolkit is a free download from NVIDIA and is supported on Windows, Mac, and most standard Linux distributions.

So, now you’re ready to deploy your application?

Register today for free access to NVIDIA TESLA GPUs in the cloud.

DEVELOPER BLOG

An Even Easier Introduction to CUDA

CUDA C++ is just one of the ways you can create massively parallel applications with CUDA. It lets you use the powerful C++ programming language to develop high performance algorithms accelerated by thousands of parallel threads running on GPUs. Many developers have accelerated their computation- and bandwidth-hungry applications this way, including the libraries and frameworks that underpin the ongoing revolution in artificial intelligence known as Deep Learning.

So, you’ve heard about CUDA and you are interested in learning how to use it in your own applications. If you are a C or C++ programmer, this blog post should give you a good start. To follow along, you’ll need a computer with an CUDA-capable GPU (Windows, Mac, or Linux, and any NVIDIA GPU should do), or a cloud instance with GPUs (AWS, Azure, IBM SoftLayer, and other cloud service providers have them). You’ll also need the free CUDA Toolkit installed.

Let’s get started!

Starting Simple

We’ll start with a simple C++ program that adds the elements of two arrays with a million elements each.

First, compile and run this C++ program. Put the code above in a file and save it as add.cpp , and then compile it with your C++ compiler. I’m on a Mac so I’m using clang++ , but you can use g++ on Linux or MSVC on Windows.

(On Windows you may want to name the executable add.exe and run it with .\add .)

As expected, it prints that there was no error in the summation and then exits. Now I want to get this computation running (in parallel) on the many cores of a GPU. It’s actually pretty easy to take the first steps.

First, I just have to turn our add function into a function that the GPU can run, called a kernel in CUDA. To do this, all I have to do is add the specifier __global__ to the function, which tells the CUDA C++ compiler that this is a function that runs on the GPU and can be called from CPU code.

These __global__ functions are known as kernels, and code that runs on the GPU is often called device code, while code that runs on the CPU is host code.

Memory Allocation in CUDA

To compute on the GPU, I need to allocate memory accessible by the GPU. Unified Memory in CUDA makes this easy by providing a single memory space accessible by all GPUs and CPUs in your system. To allocate data in unified memory, call cudaMallocManaged() , which returns a pointer that you can access from host (CPU) code or device (GPU) code. To free the data, just pass the pointer to cudaFree() .

I just need to replace the calls to new in the code above with calls to cudaMallocManaged() , and replace calls to delete [] with calls to cudaFree.

Finally, I need to launch the add() kernel, which invokes it on the GPU. CUDA kernel launches are specified using the triple angle bracket syntax >>. I just have to add it to the call to add before the parameter list.

Easy! I’ll get into the details of what goes inside the angle brackets soon; for now all you need to know is that this line launches one GPU thread to run add() .

Just one more thing: I need the CPU to wait until the kernel is done before it accesses the results (because CUDA kernel launches don’t block the calling CPU thread). To do this I just call cudaDeviceSynchronize() before doing the final error checking on the CPU.

Here’s the complete code:

CUDA files have the file extension .cu . So save this code in a file called add.cu and compile it with nvcc , the CUDA C++ compiler.

This is only a first step, because as written, this kernel is only correct for a single thread, since every thread that runs it will perform the add on the whole array. Moreover, there is a race condition since multiple parallel threads would both read and write the same locations.

Note: on Windows, you need to make sure you set Platform to x64 in the Configuration Properties for your project in Microsoft Visual Studio.

Profile it!

I think the simplest way to find out how long the kernel takes to run is to run it with nvprof , the command line GPU profiler that comes with the CUDA Toolkit. Just type nvprof ./add_cuda on the command line:

Above is the truncated output from nvprof , showing a single call to add . It takes about half a second on an NVIDIA Tesla K80 accelerator, and about the same time on an NVIDIA GeForce GT 740M in my 3-year-old Macbook Pro.

Let’s make it faster with parallelism.

Picking up the Threads

Now that you’ve run a kernel with one thread that does some computation, how do you make it parallel? The key is in CUDA’s >> syntax. This is called the execution configuration, and it tells the CUDA runtime how many parallel threads to use for the launch on the GPU. There are two parameters here, but let’s start by changing the second one: the number of threads in a thread block. CUDA GPUs run kernels using blocks of threads that are a multiple of 32 in size, so 256 threads is a reasonable size to choose.

If I run the code with only this change, it will do the computation once per thread, rather than spreading the computation across the parallel threads. To do it properly, I need to modify the kernel. CUDA C++ provides keywords that let kernels get the indices of the running threads. Specifically, threadIdx.x contains the index of the current thread within its block, and blockDim.x contains the number of threads in the block. I’ll just modify the loop to stride through the array with parallel threads.

The add function hasn’t changed that much. In fact, setting index to 0 and stride to 1 makes it semantically identical to the first version.

Save the file as add_block.cu and compile and run it in nvprof again. For the remainder of the post I’ll just show the relevant line from the output.

That’s a big speedup (463ms down to 2.7ms), but not surprising since I went from 1 thread to 256 threads. The K80 is faster than my little Macbook Pro GPU (at 3.2ms). Let’s keep going to get even more performance.

Out of the Blocks

CUDA GPUs have many parallel processors grouped into Streaming Multiprocessors, or SMs. Each SM can run multiple concurrent thread blocks. As an example, a Tesla P100 GPU based on the Pascal GPU Architecture has 56 SMs, each capable of supporting up to 2048 active threads. To take full advantage of all these threads, I should launch the kernel with multiple thread blocks.

By now you may have guessed that the first parameter of the execution configuration specifies the number of thread blocks. Together, the blocks of parallel threads make up what is known as the grid. Since I have N elements to process, and 256 threads per block, I just need to calculate the number of blocks to get at least N threads. I simply divide N by the block size (being careful to round up in case N is not a multiple of blockSize ).

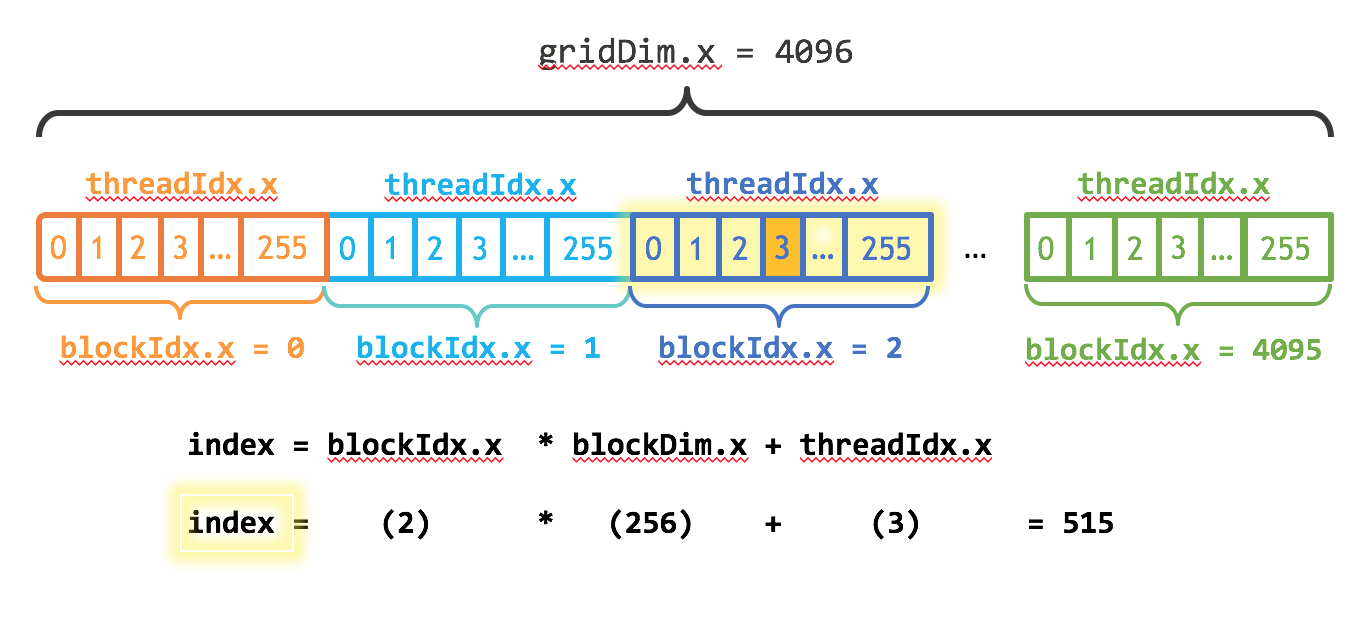

I also need to update the kernel code to take into account the entire grid of thread blocks. CUDA provides gridDim.x , which contains the number of blocks in the grid, and blockIdx.x , which contains the index of the current thread block in the grid. Figure 1 illustrates the the approach to indexing into an array (one-dimensional) in CUDA using blockDim.x , gridDim.x , and threadIdx.x . The idea is that each thread gets its index by computing the offset to the beginning of its block (the block index times the block size: blockIdx.x * blockDim.x ) and adding the thread’s index within the block ( threadIdx.x ). The code blockIdx.x * blockDim.x + threadIdx.x is idiomatic CUDA.

The updated kernel also sets stride to the total number of threads in the grid ( blockDim.x * gridDim.x ). This type of loop in a CUDA kernel is often called a grid-stride loop.

Save the file as add_grid.cu and compile and run it in nvprof again.

That’s another 28x speedup, from running multiple blocks on all the SMs of a K80! We’re only using one of the 2 GPUs on the K80, but each GPU has 13 SMs. Note the GeForce in my laptop has 2 (weaker) SMs and it takes 680us to run the kernel.

Summing Up

Here’s a rundown of the performance of the three versions of the add() kernel on the Tesla K80 and the GeForce GT 750M.

| Laptop (GeForce GT 750M) | Server (Tesla K80) | |||

|---|---|---|---|---|

| Version | Time | Bandwidth | Time | Bandwidth |

| 1 CUDA Thread | 411ms | 30.6 MB/s | 463ms | 27.2 MB/s |

| 1 CUDA Block | 3.2ms | 3.9 GB/s | 2.7ms | 4.7 GB/s |

| Many CUDA Blocks | 0.68ms | 18.5 GB/s | 0.094ms | 134 GB/s |

As you can see, we can achieve very high bandwidth on GPUs. The computation in this post is very bandwidth-bound, but GPUs also excel at heavily compute-bound computations such as dense matrix linear algebra, deep learning, image and signal processing, physical simulations, and more.

Exercises

To keep you going, here are a few things to try on your own. Please post about your experience in the comments section below.

- Browse the CUDA Toolkit documentation. If you haven’t installed CUDA yet, check out the Quick Start Guide and the installation guides. Then browse the Programming Guideand the Best Practices Guide. There are also tuning guides for various architectures.

- Experiment with printf() inside the kernel. Try printing out the values of threadIdx.x and blockIdx.x for some or all of the threads. Do they print in sequential order? Why or why not?

- Print the value of threadIdx.y or threadIdx.z (or blockIdx.y ) in the kernel. (Likewise for blockDim and gridDim ). Why do these exist? How do you get them to take on values other than 0 (1 for the dims)?

- If you have access to a Pascal-based GPU, try running add_grid.cu on it. Is performance better or worse than the K80 results? Why? (Hint: read about Pascal’s Page Migration Engine and the CUDA 8 Unified Memory API.) For a detailed answer to this question, see the post Unified Memory for CUDA Beginners.

Where To From Here?

I hope that this post has whet your appetite for CUDA and that you are interested in learning more and applying CUDA C++ in your own computations. If you have questions or comments, don’t hesitate to reach out using the comments section below.

I plan to follow up this post with further CUDA programming material, but to keep you busy for now, there is a whole series of older introductory posts that you can continue with (and that I plan on updating / replacing in the future as needed):

There is also a series of CUDA Fortran posts mirroring the above, starting with An Easy Introduction to CUDA Fortran.

You might also be interested in signing up for the online course on CUDA programming from Udacity and NVIDIA.

There is a wealth of other content on CUDA C++ and other GPU computing topics here on the NVIDIA Parallel Forall developer blog, so look around!