- Thread local storage linux

- First, the data type

- Second, one-time initialization

- Three, thread local data API

- Fourth, in-depth understanding of thread local storage mechanism

- V. Summary

- Six, __thread defines local variables

- Seven, the realization of thread local variables under windows

- A Deep dive into (implicit) Thread Local Storage

- Introduction

- The Assembly

- The Thread Register

- Thread Specific Context

- The Initialisation of TCB or TLS

- The Internals of ELF TLS

- The Initialisation of DTV and static TLS

- TLS access in executables and shared objects

- Case 1: TLS variable locally defined and used within an executable

- Case 2: TLS variable externally defined in a shared object but used in a executable

Thread local storage linux

When using C/C++ for multi-threaded programming in the Linux system, the most common problem we encounter is multi-threaded read and write of the same variable. In most cases, such problems are handled by the lock mechanism, but this pair of programs The performance has a great impact. Of course, for those data types that the system natively supports atomic operations, we can use atomic operations to process, which can improve the performance of the program to a certain extent. So for those custom data types whose systems do not support atomic operations, how can they be thread safe without using locks? This article will briefly explain the method of handling this type of thread safety from the perspective of thread local storage.

First, the data type

Second, one-time initialization

Before explaining the thread-specific data, let us first understand the one-time initialization. Multi-threaded programs sometimes have such a requirement: no matter how many threads are created, the initialization of some data can only happen once. For example, in a C++ program, a class can only have one instance object in the entire process life cycle. In the case of multiple threads, in order to allow the object to be safely initialized, the one-time initialization mechanism is particularly important. . -This implementation is often referred to as the singleton pattern (Singleton) in the design pattern. Linux provides the following functions to achieve one-time initialization:

Three, thread local data API

The function pthread_key_create() creates a new key for thread local data, and points to the newly created key buffer through key. Because all threads can use the returned new key, the parameter key can be a global variable (in C++ multi-threaded programming, global variables are generally not used, but a separate class is used to encapsulate thread local data, each variable uses a Independent pthread_key_t). The destructor points to a custom function whose format is as follows:

As long as the value associated with the key is not NULL when the thread terminates, the function pointed to by the destructor will be automatically called. If there are multiple threads storing variables locally in a thread, the calling order of the destructor function corresponding to each variable is uncertain. Therefore, the design of the destructor function of each variable should be independent of each other.

The function pthread_key_delete() does not check whether a thread is currently using the thread local data variable, nor does it call the cleanup function destructor, but only releases it for the next call to pthread_key_create(). In the Linux thread, it will also set the thread data items associated with it to NULL.

Since the system has a limit on the number of pthread_key_t types in each process, an unlimited number of pthread_key_t variables cannot be created in the process. In Linux, you can use PTHREAD_KEY_MAX (defined in the limits.h file) or the system call sysconf (_SC_THREAD_KEYS_MAX) to determine how many keys the current system supports. The default in Linux is 1024 keys, which is enough for most programs. If there are multiple thread-local storage variables in a thread, you can usually encapsulate these variables into a data structure, and then associate the encapsulated data structure with a thread-local variable, which can reduce the use of key values.

The function pthread_setspecific() is used to store a copy of value in a data structure and associate it with the calling thread and key. The parameter value usually points to a piece of memory allocated by the caller. When the thread terminates, the pointer is passed as a parameter to the destructor function associated with the key. When a thread is created, all thread local storage variables are initialized to NULL, so you must first call pthread_getspecific() function to confirm whether it is associated with the corresponding key before using such variables for the first time. If not, then pthread_getspecific () will allocate a memory and save the pointer to the memory block through the pthread_setspecific() function.

The value of the parameter value may not be a memory area allocated to the caller, but any variable value that can be cast to void*. In this case, the previous pthread_key_create() function should change the parameter

The destructor is set to NULL.

The function pthread_getspecific() is just the opposite of pthread_setspecific(), which is to take out the value set by pthread_setspecific(). It is best to convert void* to a pointer of the original data type before using the retrieved value.

Fourth, in-depth understanding of thread local storage mechanism

A deep understanding of the implementation of thread local storage helps to use its API.

In a typical implementation, the following array is included: a global (process-level) array used to store key value information stored locally by the thread The index of this global array is marked as pthread_keys, and its format is roughly as follows:

Each element of the array is a structure with two fields, the first field marks whether the array element is in use, and the second field is used to store a copy of the destructor function for this key and thread local storage variable, namely destructor function.

Each thread also contains an array that holds pointers to thread-specific data blocks allocated for each thread (pointers stored by calling the pthread_setspecific() function, that is, the value in the parameters).

V. Summary

The use of global variables or static variables is a common cause of non-thread safety in multi-threaded programming. In a multi-threaded program, one of the common means of ensuring non-thread safety is to use mutual exclusion locks for protection. This method brings concurrent performance degradation, and only one thread can read and write data. If global or static variables can be avoided in the program, then these programs are thread-safe and performance can be greatly improved. If some data can only be accessed by one thread, this type of data can be processed using a thread local storage mechanism. Although the use of this mechanism will have a certain impact on the efficiency of program execution, but for the use of lock mechanism , These performance impacts will be negligible. Linux C++ thread local storage simple implementation can refer tohttps://github.com/ApusApp/Swift/blob/master/swift/base/threadlocal.hFor more detailed and efficient implementation, please refer to ThreadLocal implementation in Facebook’s folly library. The higher performance thread local storage mechanism is to use __thread.

Six, __thread defines local variables

There is also a more efficient thread-local storage method in Linux, which is to use the keyword __thread to define variables. __thread is GCC’s built-in thread local storage facility (Thread-Local Storage). Its implementation is very efficient. It is faster than pthread_key_t, its storage performance is comparable to global variables, and it is also simpler to use. To create thread local variables, simply add __thread instructions to the declaration of global or static variables. Listed as:

Any variable with __thread, each thread has a copy of the variable, and do not interfere with each other. The variables in the thread local storage will always exist until the thread terminates, and this storage will be automatically released when the thread terminates. __thread is not available for all data types, because it only supports the POD (Plain old data structure) [1] type, does not support the class type-it can not automatically call the constructor and destructor. At the same time, __thread can be used to modify global variables and static variables in functions, but cannot be used to modify local variables of functions or ordinary member variables of classes. In addition, the initialization of __thread variables can only use compile-time constants, for example:

In addition to the above, there are a few points to note about the declaration and use of thread-local storage variables:

- If the variable keyword static or extern is used in the variable declaration, the keyword __thread must immediately follow.

- Like general global variables or static variables, thread local variables can be set with an initialization value when they are declared.

You can use C language to get the address character (&) to get the address of the thread local variable.

__thread use examples can refer tohttps://github.com/ApusApp/Swift/blob/master/swift/base/logging.cppThe implementation and unit test of those non-POD data types, if you want to use the thread local storage mechanism, you can use the pthread_key_t encapsulated class to deal with, the specific way can refer tohttps://github.com/ApusApp/Swift/blob/master/swift/base/threadlocal.hImplementation and unit testing

Seven, the realization of thread local variables under windows

Use the __declspec keyword under Windows to declare the thread variable. For example, the following code declares an integer thread local variable and initializes it with a value:

Note that using the VC keyword to achieve TLS:

The following principles must be observed when declaring statically bound thread local objects and variables:

- The thread attribute can only be applied to data declarations and definitions. It cannot be used for function declaration or definition. For example, the following code will generate a compiler error:

- The thread modifier can only be specified on data items with static scope. Includes global data objects (including static and extern), local static objects, and static data members of C++ classes. You cannot declare automatic data objects with the thread attribute. The following code will generate a compiler error:

- The declaration and definition of thread-local objects must all specify the thread attribute. For example, the following code will generate an error:

- The thread attribute cannot be used as a type modifier. For example, the following code will generate a compiler error:

- C++ classes cannot use the thread attribute. However, you can use the thread attribute to instantiate C++ class objects. For example, the following code will generate a compiler error:

- The address of a thread-local object is not considered a constant, and any expression involving such an address is not considered a constant. In Standard C, the effect of this approach is to prohibit the use of the address of a thread local variable as an object or pointer initializer. For example, the C compiler marks the following code as an error:

However, this restriction does not apply to C++. Because C++ allows all objects to be initialized dynamically, objects can be initialized with expressions that use the address of thread local variables. The way to do this is the same as the way to implement the thread local object structure. For example, the code shown above does not generate an error when compiled as a C++ source file. Please note: The address of the thread local variable is only valid if the thread in which the address is obtained still exists.

- Standard C allows the use of expressions that refer to itself to initialize objects or variables, but only for non-statically scoped objects. Although C++ generally allows expressions that refer to itself to be used to dynamically initialize objects, this type of initialization is not allowed for thread-local objects. E.g:

Please note that the sizeof expression containing the object being initialized does not establish a reference to itself and is legal in C and C++.

Источник

A Deep dive into (implicit) Thread Local Storage

Thread Local Storage (henceforth TLS) is pretty cool, it may appear to be simple at first glance but a good and efficient TLS implementation requires concerted effort from compiler, linker, dynamic linker, kernel, and language runtime.

On Linux, an excellent treatment to this topic is Ulrich Drepper’s ELF Handling For Thread-Local Storage, this blog post is my take on the same topic but with a different emphasis on how the details are presented.

We’ll limit the scope to x86-64, with a primary focus on Linux ELF, as it’s most well documented, but will also touch on other hardware and platforms. The same principle applies to the other architectures anyway.

Buckle up, it’s a long ride!

Introduction

Ordinary explicit TLS (with the help of pthread_key_create , pthread_setspecific , and pthread_getspecific ) is pretty easy to reason about, it can be thought of as some kind of two dimensional hash map: tls_map[tid]Thread local storage linux , where tid is thread ID, and key is the hash map key to a particular thread local variable.

However, implicit TLS 1 , like the one shown in the following C code, seems a bit more magical, its usage is a little too easy, makes you wonder if there’s some sleight of hand going on to make this happen.

The Assembly

Like everything in C, the easiest way to see through the “magic” is to disassemble them.

The magical line is mov %fs:0xfffffffffffffffc,%eax , for people who don’t read assembly, this essentially means moving the 32-bit value stored at the memory address %fs:0xfffffffffffffffc to the register %eax .

Actually even if one is well versed with assembly, this line is still somewhat unusual. It’s somewhat uncommon to see instructions involving segment register FS/GS in modern x86 assembly.

It looks like, at least in this case, disassembling the code gives us more mysteries than it solves. The strange use of segment register %fs and a large constant 0xfffffffffffffffc seem particularly ominous and mysterious.

The Thread Register

While some other CPU architectures have dedicated register to hold thread specific context (whatever that means), x86 enjoys no such luxury. Infamously only having small number of general purpose registers, x86 requires programmers to be very prudent when it comes to planning register uses.

Intel 80386 introduced FS and GS as user defined segment registers without stipulating what they were for. However later with the rise of multithreaded programming, people saw an opportunity in repurposing FS and GS and started to use them as thread register.

Note that this is x86 specific, and we won’t go into the details of memory segmentation, but suffice to say that on x86-64, non-kernel programs make use of FS or GS segment register to access per thread context including TLS (GS for Windows and macOS, FS for Linux and possibly the other Unix derivatives).

Now armed with the knowledge that %fs is most likely pointing to some sort of thread specific context, let’s try again to decode the instruction mov %fs:0xfffffffffffffffc,%eax . This mov instruction uses segmentation addressing, the large constant 0xfffffffffffffffc is just -4 in two’s complement form. %fs:0xfffffffffffffffc just means:

Where thread_context_ptr is whatever the address %fs points to. For other architectures, one can substitute %fs with their equivalent thread register.

After the execution, the register EAX contains the value of main_tls_var .

On x86-64, user land programs (Ring 3) can retrieve FS and GS, but they are not allowed to change the addresses stored in FS and GS. It’s typically the operating systems’ job (Ring 1) to provide facility to manipulate them indirectly.

On the x86-64 kernel, the address stored at FS is managed by a Model Specific Register (MSR) called MSR_FS_BASE 2 . The kernel provides a syscall arch_prctl with which user land programs can use to change FS and GS for the currently running thread.

Digging around a bit more, it can also be seen that when the kernel does context switch, the switch code loads the next task’s FS to the CPU’s MSR. Confirming that whatever FS points to is on a per thread/task basis.

Based on what we’ve known so far, we may have this hypothesis that the runtime must be using some form of FS manipulation routine (such as arch_prctl ) to bind the TLS to the current thread. And the kernel keeps track of this binding by swap in the right FS value when doing context switch.

Thread Specific Context

So far we’ve been hand waving the term thread specific context, so what really is this context thing?

Different platforms have different names for it, for Linux and glibc, this is called Thread Control Block (TCB), on Windows it’s called Thread Information Block (TIB) or Thread Environment Block (TEB).

We will focus on TCB in this post, for more information about TIB/TEB, see the first part of Ken Johnson’s excellent blog series on Windows TLS.

Regardless how it’s called, thread specific context is a data structure that contains information and metadata to facilitate the management of a thread and its local storage (i.e. TLS).

So what is this TCB data structure?

On x86-64 Linux + glibc, for all intents and purposes, TCB is struct pthread (some times called thread descriptor), it’s a glibc internal data structure related but not equivalent to POSIX Threads.

At this point we now know what the FS points to and vaguely what TCB is, but there are still a lot of questions to be answered. First and foremost, who sets up and allocates the TCB? We the code author certainly did no such thing anywhere in the original source.

The Initialisation of TCB or TLS

As it turns out, TCB or TLS setup is done somewhat differently for statically linked executables and dynamically linked executables. The reason will become apparent later. For now let’s focus on how dynamically linked executables, as they are by far the most common way people distribute software.

Adding more complication to the mix, TLS is also initialised differently for the main thread and the other threads that begin later in the execution. It makes sense if you stop and think about it, as the main thread is spawned by the kernel, whereas the start of non-main threads are triggered later by the programs.

When it comes to dynamically linked ELF programs, it’s useful to know that once they are loaded and mapped into memory, the kernel would then take its hands off and pass the execution baton to the dynamic linker (ld.so on Linux, dyld on macOS).

Since ld.so is part of glibc, the next step is to open up the guts of glibc and fish around to see if we can dig up anything juicy, but where to look?

Remembering the hypothesis we made earlier about the runtime would use arch_prctl to change FS register, let’s grep the glibc source for arch_prctl then.

After wading through several false positive hits, we arrive at a macro definition TLS_INIT_TP , which uses inline assembly to trigger arch_prctl syscall directly and is responsible for updating the FS register to point to TCB.

With this macro as an anchor and generous use of grep, it starts to become clear that the main thread’s TLS is setup in the function init_tls , which calls _dl_allocate_tls_storage to allocate the TCB or struct pthread , eventually invokes the aforementioned macro TLS_INIT_TP to bind the pthread TCB to the main thread.

This finding confirms our previous hypothesis that the dynamic linker runtime allocates and sets up the TCB or struct pthread and then uses arch_prctl to bind the TLS to at least the main thread.

We will look at how TLS is setup in the other threads later, before that there’s still this glaring question that we’ve been ignoring till now: Where does that mysterious -4 come from?

The Internals of ELF TLS

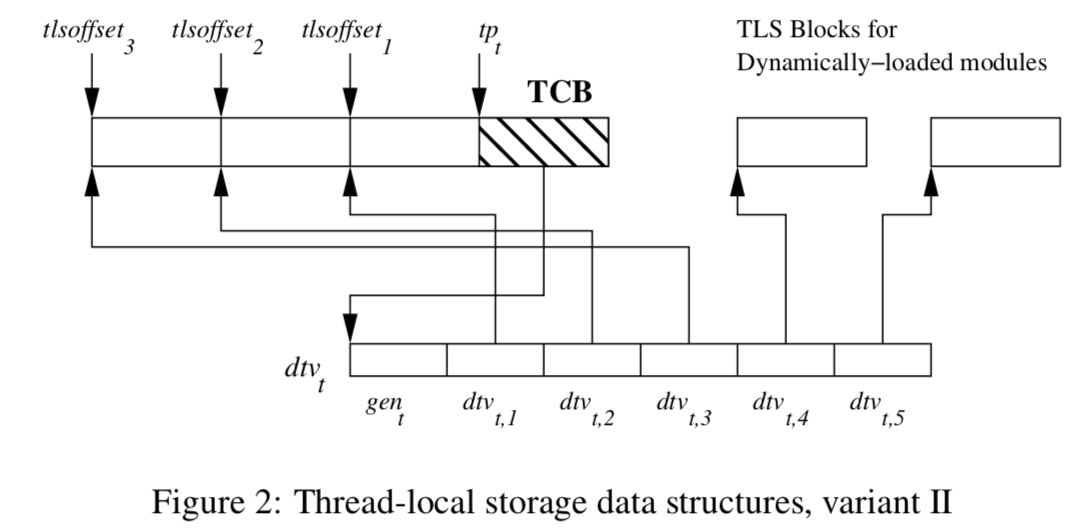

Depending on the platforms and architectures, there are two variants of TLS/TCB structure, but for x86-64, we will only consider variant 2:

Despite its simplicity, the diagram actually packs quite a lot of information, let’s decode it:

tpt is the thread register aka thread pointer (i.e. what FS points to) for thread t, and dtvt is Dynamic Thread Vector which can be thought of as a two dimensional array that can address any TLS variable by a module ID and a TLS variable offset. A TLS variable offset is local within a module and bound to each TLS variable at compile time. A module in glibc can refer to either an executable or a dynamically shared object, a module ID therefore is an index number for a loaded ELF object in a process.

Note that for a given running process the module ID for the main executable will always be 1, whereas the shared objects don’t know their module IDs until they are loaded and assigned by the linker 3 .

As for dtvt,1, dtvt,2, etc, each of them contains TLS information for a loaded shared object (with module ID 1, 2, etc…) and points to a TLS block that contains the TLS variables within a particular dynamic object. A specific TLS variable can be extracted out of this TLS block if its offset is given.

You might have noticed that the first few elements for the DTV array point to the white portion before the TCB, whereas the last couple point to the other TLS blocks labeled for Dynamically-Loaded Modules. Dynamically-loaded modules here don’t mean any dynamic shared objects, they only refer to the shared objects that are loaded by explicitly calling dlopen .

The white portion before the shaded TCB can be subdivided into TLS blocks (one block corresponds to one loaded module, delineated with tlsoffset1, tlsoffset2, etc..), containing all the TLS variables from the executable and the shared objects that are loaded before the start of the main() function. This portion is called static TLS in glibc parlance, they are called “static” to distinguish them from the TLS for modules loaded via dlopen .

The TLS variables for these “static” modules (i.e. DT_NEEDED entries) are reachable by a negative constant offset (constant through out the lifetime of the running process) from the thread register. For example, the instruction mov %fs:0xfffffffffffffffc falls under this category.

Since the module ID for the executable itself is always 1, dtvt,1 (i.e. dtv[1] ) always points to the TLS block of the executable itself (module id 1). Additionally, as it can be seen from the diagram, the linker will also always place the executable’s TLS block right next to the TCB, presumably to keep the executable’s TLS variables in a predictable location and relatively hot in the cache.

The dtvt is a member variable of tcbheader_t , which in turn is first member variable of TCB pthread .

DTV has a deceptively simple data structure, but it’s an unfortunate victim of many C trickeries 4 (e.g. negative pointer arithmetics, type aliasing, badly named member variables, etc…).

The first element of the dtv array in TCB is a generation counter gent, which serves as a version number. It helps inform the runtime when a resizing or reconstruction of the DTV is needed. Every time when the program does dlopen or dlfree , the global generation counter will get incremented. Whenever the runtime detects a use of a DVT and its gent doesn’t match the global generation number, the DTV will be updated again, its gent will be set to the current global generation number.

As we touched on above, the second element of dtv (i.e. dtv[1] ) is pointing to the main executable’s TLS block right next to the TCB in the static TLS.

The following is the quick overview of the dtv array:

One property of this static TLS and DTV configuration is that any variable in static TLS in a particular thread is reachable either via the thread’s DTV dtv[ti_module].pointer + ti_offset , or by a negative offset from the TCB (e.g. mov %fs:0xffffffffffxxxxxx %eax , where 0xffffffffffxxxxxx is the negative offset).

It’s important to note that using DTV dtv[ti_module].pointer + ti_offset is the most general way to access TLS variables regardless if the variables reside in static TLS or dynamic TLS. We will see why this is relevant later.

The Initialisation of DTV and static TLS

So far we’ve journeyed from assembly, to kernel, and to dynamic linker, we’ve covered a lot of ground, and it looks like we are getting closer to the understanding of the weird mov instruction, but the questions still remain: how is the static TLS constructed, and where does the -4 or 0xfffffffffffffffc come from?

We’ll explore the static TLS now and find out what the fuzz is all about the -4.

As part of its bookkeeping, the dynamic linker maintains an (intrusive) link list link_map , which keeps track of all the loaded modules and their metadata.

After the linker is done mapping the modules into the program’s address space, it would call init_tls , where it would use the link_map to fill in the information for dl_tls_dtv_slotinfo_list ; another global linked list that contains the metadata for modules that make use of TLS. It then proceeds to call _dl_determine_tlsoffset , which lets each loaded module know at what offset ( l_tls_offset ) in the static TLS it should place the module’s TLS block. Later on the function calls _dl_allocate_tls_storage to allocate (but not initialise) the static TLS and DTV.

Further along in the dynamic linker setup process, _dl_allocate_tls_init will be called to finally initialise the static TLS and DTV for the main thread.

The first part of the function does a sanity check for the size of DTV array, while the second part sets up the DTV with the appropriate offsets in the static TLS and copies the modules’ TLS DATA and BSS sections (tdata and tbss) to the static TLS blocks.

Here it goes, this is how TLS and DTV are setup! But who decides the -4 or 0xfffffffffffffffc is the right offset to get to our TLS variable main_tls_var ?

The answer lies in the rule we touched on in the previous section: the main executable’s TLS block is always placed right before the TCB. Essentially the compiler and the dynamic linker conspire together to come up with that number, because the executable’s TLS block and TLS offset are both known ahead of time.

The cooperation relies on the compiler knowing the following “facts” at build time, and the dynamic linker guaranteeing these “facts” are true at runtime:

- The FS register points to the TCB

- The TLS variables’ offsets set by the compiler won’t change during runtime

- The executable’s TLS block sits right before the TCB

TLS access in executables and shared objects

You might have noticed that so far we’ve only shown executables using TLS variables that are defined within the executables themselves, how would using them in another scenario differ?

The answer should be a little clearer by the end of the last section: It’s a No, due to the fact that TLS in main executable is treated differently by both compiler and the dynamic linker.

Depending on where a TLS variable is defined and accessed, there are four different cases to examine:

- TLS variable locally defined and used within an executable

- TLS variable externally defined in a shared object but used in a executable

- TLS variable locally defined in a shared object and used in the same shared object

- TLS variable externally defined in a shared object and used in an arbitrary shared object

Let’s investigate the four scenarios one by one.

Case 1: TLS variable locally defined and used within an executable

This case is what we’ve studied so far, quick recap: Accessing this type of TLS will be in the form of mov fs:0xffffffffffxxxxxx %xxx .

Case 2: TLS variable externally defined in a shared object but used in a executable

The code configuration for this scenario is as follows.

A TLS variable is defined in a library code

Источник