- Meaning of «[: too many arguments» error from if [] (square brackets)

- 6 Answers 6

- Easy fix

- Also beware of the [: unary operator expected error

- How to get around the Linux «Too Many Arguments» limit

- 2 Answers 2

- bash: /usr/bin/rm: Argument list too long – Solution

- The Error

- What does “argument list too long” indicate?

- The Solution

- Tags: linux, server, sysadmins

- Download my free 101 Useful Linux Commands (PDF).

- Ubuntu

- [: too many arguments

- «Argument list too long»: How do I deal with it, without changing my command?

- 4 Answers 4

Meaning of «[: too many arguments» error from if [] (square brackets)

I couldn’t find any one simple straightforward resource spelling out the meaning of and fix for the following BASH shell error, so I’m posting what I found after researching it.

The error:

Google-friendly version: bash open square bracket colon too many arguments .

Context: an if condition in single square brackets with a simple comparison operator like equals, greater than etc, for example:

6 Answers 6

If your $VARIABLE is a string containing spaces or other special characters, and single square brackets are used (which is a shortcut for the test command), then the string may be split out into multiple words. Each of these is treated as a separate argument.

So that one variable is split out into many arguments:

The same will be true for any function call that puts down a string containing spaces or other special characters.

Easy fix

Wrap the variable output in double quotes, forcing it to stay as one string (therefore one argument). For example,

Simple as that. But skip to «Also beware. » below if you also can’t guarantee your variable won’t be an empty string, or a string that contains nothing but whitespace.

Or, an alternate fix is to use double square brackets (which is a shortcut for the new test command).

This exists only in bash (and apparently korn and zsh) however, and so may not be compatible with default shells called by /bin/sh etc.

This means on some systems, it might work from the console but not when called elsewhere, like from cron , depending on how everything is configured.

It would look like this:

If your command contains double square brackets like this and you get errors in logs but it works from the console, try swapping out the [[ for an alternative suggested here, or, ensure that whatever runs your script uses a shell that supports [[ aka new test .

Also beware of the [: unary operator expected error

If you’re seeing the «too many arguments» error, chances are you’re getting a string from a function with unpredictable output. If it’s also possible to get an empty string (or all whitespace string), this would be treated as zero arguments even with the above «quick fix», and would fail with [: unary operator expected

It’s the same ‘gotcha’ if you’re used to other languages — you don’t expect the contents of a variable to be effectively printed into the code like this before it is evaluated.

Here’s an example that prevents both the [: too many arguments and the [: unary operator expected errors: replacing the output with a default value if it is empty (in this example, 0 ), with double quotes wrapped around the whole thing:

(here, the action will happen if $VARIABLE is 0, or empty. Naturally, you should change the 0 (the default value) to a different default value if different behaviour is wanted)

Final note: Since [ is a shortcut for test , all the above is also true for the error test: too many arguments (and also test: unary operator expected )

Источник

How to get around the Linux «Too Many Arguments» limit

I have to pass 256Kb of text as an argument to the «aws sqs» command but am running into a limit in the command-line at around 140Kb. This has been discussed in many places that it been solved in the Linux kernel as of 2.6.23 kernel.

But cannot get it to work. I am using 3.14.48-33.39.amzn1.x86_64

Here’s a simple example to test:

And the foo script is just:

And the output for me is:

So, the question how do I modify the testCL script is it can pass 256Kb of data? Btw, I have tried adding ulimit -s 65536 to the script and it didn’t help.

And if this is plain impossible I can deal with that but can you shed light on this quote from my link above

«While Linux is not Plan 9, in 2.6.23 Linux is adding variable argument length. Theoretically you shouldn’t hit frequently «argument list too long» errors again, but this patch also limits the maximum argument length to 25% of the maximum stack limit (ulimit -s).»

2 Answers 2

I was finally able to pass

With the coupling of ARG_MAX to ulim -s / 4 came the introduction of MAX_ARG_STRLEN as max. length of an argument:

MAX_ARG_STRLEN is defined as 32 times the page size in linux/include/uapi/linux/binfmts.h :

The default page size is 4 KB so you cannot pass arguments longer than 128 KB.

I can’t try it now but maybe switching to huge page mode (page size 4 MB) if possible on your system solves this problem.

For more detailed information and references see this answer to a similar question on Unix & Linux SE.

(1) According to this answer one can change the page size of x86_64 Linux to 1 MB by enabling CONFIG_TRANSPARENT_HUGEPAGE and setting CONFIG_TRANSPARENT_HUGEPAGE_MADVISE to n in the kernel config.

(2) After recompiling my kernel with the above configuration changes getconf PAGESIZE still returns 4096. According to this answer CONFIG_HUGETLB_PAGE is also needed which I could pull in via CONFIG_HUGETLBFS . I am recompiling now and will test again.

(3) I recompiled my kernel with CONFIG_HUGETLBFS enabled and now /proc/meminfo contains the corresponding HugePages_* entries mentioned in the corresponding section of the kernel documentation. However, the page size according to getconf PAGESIZE is still unchanged. So while I should be able now to request huge pages via mmap calls, the kernel’s default page size determining MAX_ARG_STRLEN is still fixed at 4 KB.

(4) I modified linux/include/uapi/linux/binfmts.h to #define MAX_ARG_STRLEN (PAGE_SIZE * 64) , recompiled my kernel and now your code produces:

So now the limit moved from 128 KB to 256 KB as expected. I don’t know about potential side effects though. As far as I can tell, my system seems to run just fine.

Источник

bash: /usr/bin/rm: Argument list too long – Solution

Over time, the storage used on Linux systems you manage will grow. As a result, you will, at some point, try to delete, move, search, or otherwise manipulate thousands of files using commands such as rm , cp , ls , mv , and so on, which are all subject to this limitation. As such, you will eventually come across the “Argument list too long” error detailed below.

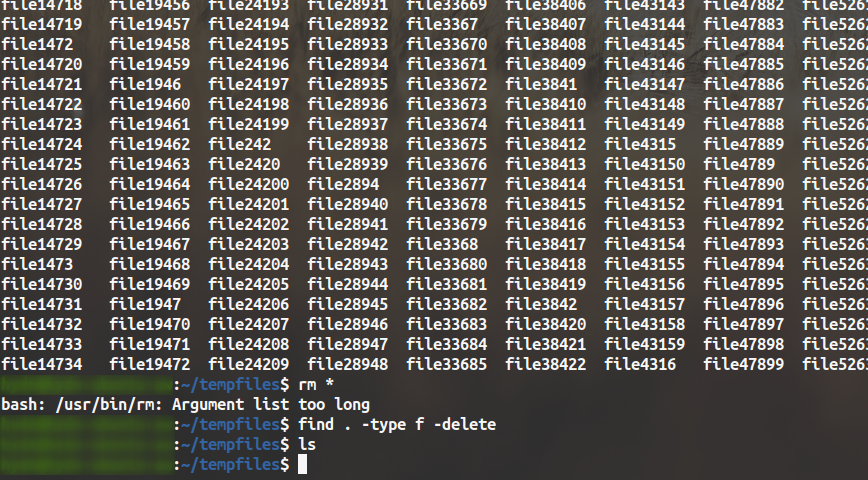

The Error

All regular system commands, such as rm , ls , mv , cp , and so on are subject to this limitation.

What does “argument list too long” indicate?

“Argument list too long” indicates when a user feeds too many arguments into a single command which hits the ARG_MAX limit. The ARG_MAX defines the maximum length of arguments to the exec function.

An argument, also called a command-line argument, can be defined as the input given to a command, to help control that command line process. Arguments are entered into the terminal or console after typing the command. Multiple arguments can be used together; they will be processed in the order they typed, left to right.

This limit for the length of a command is imposed by the operating system. You can check the limit for maximum arguments on your Linux system using this command:

Which will return something like this:

The “argument list too long” error means that you’ve exceeded the maximum command-line length allowed for arguments in a command.

The Solution

There are a number of solutions to this problem (bash: /usr/bin/rm: Argument list too long).

Remove the folder itself then recreate it.

If you are trying to delete ALL files and folders in a directory, then instead of using a wild card “*” for removal (i.e. rm *), you can try the following:

If you still need that directory, then recreate with the mkdir command.

Mass delete files using the find command:

You can use the find command to find every file and then delete them:

Or to delete only specific file types (i.e., .txt files) or otherwise, use something like:

Tags: linux, server, sysadmins

Download my free 101 Useful Linux Commands (PDF).

Also, I’ll notify you when new Linux articles are published. — Subscribe now and receive my free PDF.

(Average of 1 or 2 emails per month, sent only on Mondays.)

Источник

Ubuntu

[: too many arguments

line 5: [: too many arguments

line 5: [: too many arguments

I read about that error here, but could not figure the cause of my error.

-eq is used for numbers not strings.

Try using double quotes and the equal sign:

Expanding a bit on what Jim has already said.

Your first problem (too many arguments caused by improperly quoting your operands) can be fixed by changing:

so you will be comparing the actual file sizes in bytes (instead of possibly in tenths of kilobytes, megabytes, gigabytes, terabytes, or petabytes).

Your fourth problem (assuming that the file size will change immediately between two adjacent invocations of the above du command at the start of your script can be fixed by moving the sleep 5 from the end of your loop to the start of your loop.

That gets us to a modified script that looks something like:

But, I have absolutely no idea why you want to run the command xdotool getactivewindow key Ctrl every five seconds while the size of a seemingly unrelated file is changing and not run it at all if the file has stopped growing before your script is started.

I hope this helps. But, since I don’t understand what you’re trying to do, I may have misread everything you’re trying to do.

du gives file sizes in «blocks», but that’s certainly more precise than megabytes.

Here having multiple commands between while and do is an alternative to the true / break .

The last exit status (before the do ) counts.

Expanding a bit on what Jim has already said.

Your first problem (too many arguments caused by improperly quoting your operands) can be fixed by changing:

so you will be comparing the actual file sizes in bytes (instead of possibly in tenths of kilobytes, megabytes, gigabytes, terabytes, or petabytes).

Your fourth problem (assuming that the file size will change immediately between two adjacent invocations of the above du command at the start of your script can be fixed by moving the sleep 5 from the end of your loop to the start of your loop.

That gets us to a modified script that looks something like:

But, I have absolutely no idea why you want to run the command xdotool getactivewindow key Ctrl every five seconds while the size of a seemingly unrelated file is changing and not run it at all if the file has stopped growing before your script is started.

I hope this helps. But, since I don’t understand what you’re trying to do, I may have misread everything you’re trying to do.

I simply want to know if a download is occurring in Firefox.

If download is occurring then do not go into a suspend state.

- As this is in the Ubuntu sub-forum, ps has a -b (size in bytes) option; If you want the size of a file in bytes, surely the most accessible (and therefore most portable) option is to use wc -c As you are using bash, surely the [[ . ]] construct is better as you don’t have to quote variables; If using numeric comparison in bash, using the (( . )) construct is more readable.

I simply want to know if a download is occurring in Firefox.

If download is occurring then do not go into a suspend state.

Absolutely nothing that you are doing in the script you have shown us in this thread will tell you anything about whether or not Firefox is downloading a file unless it happens to be downloading a single file named /home/andy/Downloads/myfile.iso unless there is something going on behind the scenes such that the command xdotool getactivewindow key Ctrl has the side effect of causing /home/andy/Downloads/myfile.iso to change size if Firefox is downloading a file.

Does running the command xdotool getactivewindow key Ctrl cause the size of /home/andy/Downloads/myfile.iso to change?

Note that MadeInGermany is correct about du -s reporting file sizes as a number of blocks (512-byte blocks if the version of du on your version of Ubuntu adheres to the standards; but quite likely on most versions of Linux systems you’ll get counts of a larger sized block). If you want to detect small increments of change, please do not use wc -c pathname as suggested by apmcd47; that will increase the CPU an IO load on your system significantly if you are downloading a large file. Using ls -n pathname would be a much better choice. It will report changes in file size to the number of bytes in the file and only has to do a stat() on the file to get its size; using wc -c pathname is usually implemented by wc opening, reading, and counting every byte in pathname . (Note that ls -n output is like ls -l output except it prints the file owner’s user ID and file group’s group ID instead of looking up and printing the file owner’s user name and file group’s group name; therefore, it is slightly faster and uses fewer system resources to get the job done. If you were using a UNIX system instead of a Linux system, I’d suggest using ls -og which avoids printing user and group names or IDs completely.)

Источник

«Argument list too long»: How do I deal with it, without changing my command?

When I run a command like ls */*/*/*/*.jpg , I get the error

I know why this happens: it is because there is a kernel limit on the amount of space for arguments to a command. The standard advice is to change the command I use, to avoid requiring so much space for arguments (e.g., use find and xargs ).

What if I don’t want to change the command? What if I want to keep using the same command? How can I make things «just work», without getting this error? What solutions are available?

4 Answers 4

On Linux, the maximum amount of space for command arguments is 1/4th of the amount of available stack space. So, a solution is to increase the amount of space available for the stack.

Short version: run something like

Longer version: The default amount of space available for the stack is something like 8192 KB. You can see the amount of space available, as follows:

Choose a larger number, and set the amount of space available for the stack. For instance, if you want to try allowing up to 65536 KB for the stack, run this:

You may need to play around with how large this needs to be, using trial-and-error. In many cases, this is a quick-and-dirty solution that will eliminate the need to modify the command and work out the syntax of find , xargs , etc. (though I realize there are other benefits to doing so).

I believe that this is Linux-specific. I suspect it probably won’t help on any other Unix operating system (not tested).

Instead of ls */*/*/*/*.jpg , try:

xargs (1) knows, what the maximum number of arguments is on the system, and will break up its standard input to call the specified command-line multiple times with no more arguments than that limit, whatever it is (you can also set it lower than the OS’ maximum using the -n option).

For example, suppose, the limit is 3 arguments and you have five files. In that case xargs will execute ls twice:

Often this is perfectly suitable, but not always — for example, you can not rely on ls (1) sorting all of the entries for you properly, because each separate ls -invocation will sort only the subset of entries given to it by xargs .

Though you can bump the limit as suggested by others, there will still be a limit — and some day your JPG-collection will outgrow it again. You should prepare your script(s) to deal with an infinite number.

This Linux Journal article gives 4 solutions. Only the fourth solution does not involve changing the command:

Method #4 involves manually increasing the number of pages that are allocated within the kernel for command-line arguments. If you look at the include/linux/binfmts.h file, you will find the following near the top:

In order to increase the amount of memory dedicated to the command-line arguments, you simply need to provide the MAX_ARG_PAGES value with a higher number. Once this edit is saved, simply recompile, install and reboot into the new kernel as you would do normally.

On my own test system I managed to solve all my problems by raising this value to 64. After extensive testing, I have not experienced a single problem since the switch. This is entirely expected since even with MAX_ARG_PAGES set to 64, the longest possible command line I could produce would only occupy 256KB of system memory—not very much by today’s system hardware standards.

The advantages of Method #4 are clear. You are now able to simply run the command as you would normally, and it completes successfully. The disadvantages are equally clear. If you raise the amount of memory available to the command line beyond the amount of available system memory, you can create a D.O.S. attack on your own system and cause it to crash. On multiuser systems in particular, even a small increase can have a significant impact because every user is then allocated the additional memory. Therefore always test extensively in your own environment, as this is the safest way to determine if Method #4 is a viable option for you.

I agree that the limitation is seriously annoying.

Источник