- Configure the file system cache in Windows NT

- Использование Локальные дисковые пространства с кэшем чтения в памяти CSV Using Storage Spaces Direct with the CSV in-memory read cache

- Общие вопросы планирования Planning considerations

- Настройка кэша чтения в памяти Configuring the in-memory read cache

- Understanding the cache in Storage Spaces Direct

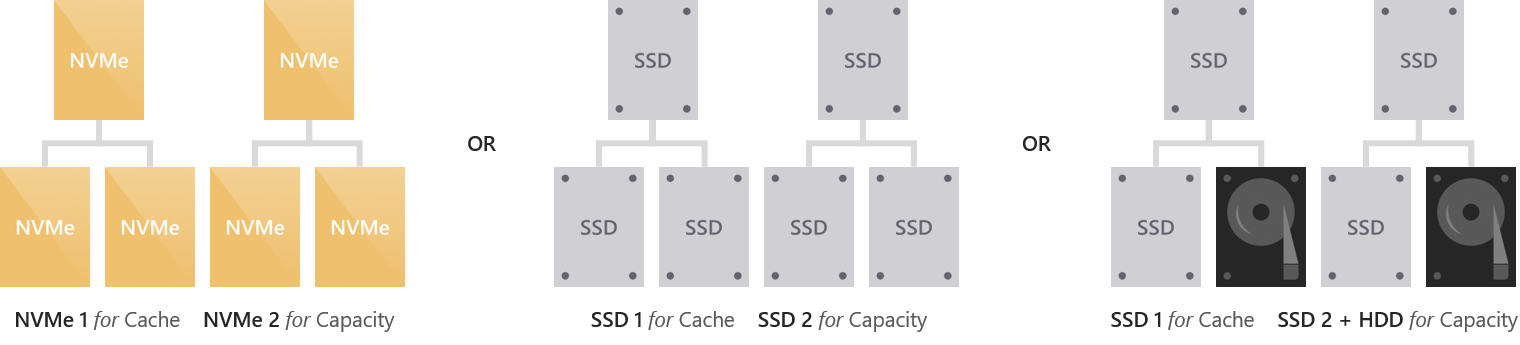

- Drive types and deployment options

- All-flash deployment possibilities

- Hybrid deployment possibilities

- Cache drives are selected automatically

- Cache behavior is set automatically

- Write-only caching for all-flash deployments

- Read/write caching for hybrid deployments

- Caching in deployments with drives of all three types

- Summary

- Server-side architecture

- Drive bindings are dynamic

- Handling cache drive failures

- Relationship to other caches

- Manual configuration

- Specify cache drive model

- Example

- Manual deployment possibilities

- Set cache behavior

- Example

- Sizing the cache

Configure the file system cache in Windows NT

Windows has a file caching mechanism which is tightly integrated with the memory manager. It helps disk performance by keeping the most recent files in memory, whether is is EXE or DLL files loaded by the memory manager or data files loaded by the running applications.

The filesystem cache can work in two different modes:

- The default mode is that the filesystem cache only grows to a certain limit (8 MByte).

- The other mode is that the file system cache can grow until it has taken all memory (Up to 1 GByte depending on available memory).

One should not change from the default mode on standard workstations as a single application can trigger the file system cache to take all available RAM (Ex. by copying a large file), and the memory of other applications will be paged to disk (Even more I/O)

To allow the file system cache to make use of «all» available memory:

- WinNT4: Open Control Panel -> Network -> Change properties for «Server» service to use «Maximize Throughput for File Sharing» (Will also change Server service memory usage).

- Win2k: Open Control Panel -> Network and Dial-up Connections -> Right-click Local Area Connection and select Properties -> Press Properties for service «File and Print Sharing for Microsoft Networks» to set «Maximize Data Throughput for File Sharing» (Will also change Server service memory usage)..

- WinXP/Win2k3: Open Control Panel -> System-Applet -> Advanced-Tab -> Performance-Settings-Button -> Advanced-Tab and select «System Cache»-Option.

- Registry:

[HKEY_LOCAL_MACHINE \System \CurrentControlSet \Control \Session Manager \Memory Management]

LargeSystemCache=1 (Default Srv. = 1, Default Prof. = 0)

[HKEY_LOCAL_MACHINE \System \CurrentControlSet \Control \SessionManager \MemoryManagement]

SystemCacheDirtyPageThreshold = 100 (MByte; Default = 0; 0 = Half of physical memory)

More Info MS KB920739 (Doesn’t limit the size of the read cache)

Note the LargeSystemCache is allocated from kernel memory area, which is shared with the pagedpoolsize and systempages. So when limiting these from using max size, then it will allow the filesystem cache to reach its max size of 960 MByte (WinNT4 512 MByte), else it will be limited to 512 MByte.

Note not all applications will benefit from a large system cache. Many disk intensive applications (Like database systems) includes their own cache manager, and doesn’t make use of the operating system controlled file cache. For MS SQL and Exhange the optimal value is to disable the large system cache.

Note enabling the large system cache can give stability issues, because it will configure the kernel area to allocate max memory for the file cache, while sacrificing the size of memory pools and number of page table entries. If using Unified Memory Architecture (UMA)-based video hardware or an Accelerated Graphics Port (AGP), then it will require page table entries to address the video memory. ATI recommends one doesn’t enable large system cache to avoid data corruption. More Info MS KB895932

Использование Локальные дисковые пространства с кэшем чтения в памяти CSV Using Storage Spaces Direct with the CSV in-memory read cache

Область применения. Windows Server 2019, Windows Server 2016 Applies To: Windows Server 2019, Windows Server 2016

В этом разделе описывается использование системной памяти для повышения производительности Локальные дисковые пространства. This topic describes how to use system memory to boost the performance of Storage Spaces Direct.

Локальные дисковые пространства совместим с кэшем чтения в памяти общий том кластера (CSV). Storage Spaces Direct is compatible with the Cluster Shared Volume (CSV) in-memory read cache. Использование памяти системы для кэширования операций чтения может повысить производительность таких приложений, как Hyper-V, использующих операции ввода-вывода без буферизации для доступа к файлам VHD или VHDX. Using system memory to cache reads can improve performance for applications like Hyper-V, which uses unbuffered I/O to access VHD or VHDX files. (Небуферизованные IOs — это любые операции, которые не кэшируются диспетчером кэша Windows.) (Unbuffered IOs are any operations that are not cached by the Windows Cache Manager.)

Так как кэш в памяти является локальным сервером, он повышает локальность данных для Локальные дисковые пространства развертываний с помощью Hyper-in. последние операции чтения кэшируются в памяти на том же узле, где работает виртуальная машина, уменьшая частоту считывания по сети. Because the in-memory cache is server-local, it improves data locality for hyper-converged Storage Spaces Direct deployments: recent reads are cached in memory on the same host where the virtual machine is running, reducing how often reads go over the network. Это приводит к снижению задержки и повышению производительности хранилища. This results in lower latency and better storage performance.

Общие вопросы планирования Planning considerations

Кэш чтения в памяти наиболее эффективен для рабочих нагрузок с интенсивным чтением, таких как инфраструктура виртуальных рабочих столов (VDI). The in-memory read cache is most effective for read-intensive workloads, such as Virtual Desktop Infrastructure (VDI). И наоборот, если Рабочая нагрузка очень интенсивно записывается, кэш может ввести больше ресурсов, чем значение, и его следует отключить. Conversely, if the workload is extremely write-intensive, the cache may introduce more overhead than value and should be disabled.

Можно использовать до 80% общего объема физической памяти для кэша чтения в памяти CSV. You can use up to 80% of total physical memory for the CSV in-memory read cache.

Для развертываний с поддержкой Hyper-in, где расчеты и хранилище выполняются на одних и тех же серверах, будьте внимательны, чтобы оставить достаточно памяти для виртуальных машин. For hyper-converged deployments, where compute and storage run on the same servers, be careful to leave enough memory for your virtual machines. Для Scale-Out развертываний файлового сервера (SoFS) с меньшей конкуренцией за память это не относится. For converged Scale-Out File Server (SoFS) deployments, with less contention for memory, this doesn’t apply.

Некоторые средства микротестирования, такие как DISKSPD и парк виртуальных машин , могут привести к серьезным результатам с включенным кэшем чтения в памяти CSV, чем без него. Certain microbenchmarking tools like DISKSPD and VM Fleet may produce worse results with the CSV in-memory read cache enabled than without it. По умолчанию для виртуальной машины создается 1 10 гибибайт (гиб) VHDX на виртуальную машину — приблизительно 1 Тиб всего для 100 виртуальных машин, а затем выполняет единообразное считывание и запись в них. By default VM Fleet creates one 10 gibibyte (GiB) VHDX per virtual machine – approximately 1 TiB total for 100 VMs – and then performs uniformly random reads and writes to them. В отличие от реальных рабочих нагрузок, операции чтения не подчиняются ни одному из прогнозируемых или повторяющихся шаблонов, поэтому кэш в памяти не действует и требует просто нагрузки. Unlike real workloads, the reads don’t follow any predictable or repetitive pattern, so the in-memory cache is not effective and just incurs overhead.

Настройка кэша чтения в памяти Configuring the in-memory read cache

Кэш чтения в памяти CSV доступен в Windows Server 2019 и Windows Server 2016 с теми же функциональными возможностями. The CSV in-memory read cache is available in Windows Server 2019 and Windows Server 2016 with the same functionality. В Windows Server 2019 он включен по умолчанию с распределением по 1 гиб. In Windows Server 2019, it’s on by default with 1 GiB allocated. В Windows Server 2016 она отключена по умолчанию. In Windows Server 2016, it’s off by default.

| Версия ОС OS version | Размер кэша CSV по умолчанию Default CSV cache size |

|---|---|

| Windows Server 2019 Windows Server 2019 | 1 гиб 1 GiB |

| Windows Server 2016 Windows Server 2016 | 0 (отключено) 0 (disabled) |

| Windows Server 2012 R2 Windows Server 2012 R2 | включено — пользователь должен указать размер. enabled — user must specify size |

| Windows Server 2012 Windows Server 2012 | 0 (отключено) 0 (disabled) |

Чтобы узнать, сколько памяти выделено с помощью PowerShell, выполните: To see how much memory is allocated using PowerShell, run:

Возвращаемое значение находится в мебибитес (MiB) на сервер. The value returned is in mebibytes (MiB) per server. Например, 1024 представляет 1 гибибайт (гиб). For example, 1024 represents 1 gibibyte (GiB).

Чтобы изменить объем выделяемой памяти, измените это значение с помощью PowerShell. To change how much memory is allocated, modify this value using PowerShell. Например, чтобы выделить 2 гиб для каждого сервера, выполните: For example, to allocate 2 GiB per server, run:

Чтобы изменения вступили в силу немедленно, приостановите и возобновите тома CSV или переместите их между серверами. For changes to take effect immediately, pause then resume your CSV volumes, or move them between servers. Например, этот фрагмент PowerShell используется для перемещения каждого CSV-файла на другой узел сервера и обратно: For example, use this PowerShell fragment to move each CSV to another server node and back again:

Understanding the cache in Storage Spaces Direct

Applies to: Windows Server 2019, Windows Server 2016

Storage Spaces Direct features a built-in server-side cache to maximize storage performance. It is a large, persistent, real-time read and write cache. The cache is configured automatically when Storage Spaces Direct is enabled. In most cases, no manual management whatsoever is required. How the cache works depends on the types of drives present.

The following video goes into details on how caching works for Storage Spaces Direct, as well as other design considerations.

Storage Spaces Direct design considerations

(20 minutes)

Drive types and deployment options

Storage Spaces Direct currently works with four types of storage devices:

| Type of drive | Description |

|---|---|

| PMem refers to persistent memory, a new type of low latency, high performance storage. |

| NVMe (Non-Volatile Memory Express) refers to solid-state drives that sit directly on the PCIe bus. Common form factors are 2.5″ U.2, PCIe Add-In-Card (AIC), and M.2. NVMe offers higher IOPS and IO throughput with lower latency than any other type of drive we support today except PMem. |

| SSD refers to solid-state drives, which connect via conventional SATA or SAS. |

| HDD refers to rotational, magnetic hard disk drives, which offer vast storage capacity. |

These can be combined in various ways, which we group into two categories: «all-flash» and «hybrid».

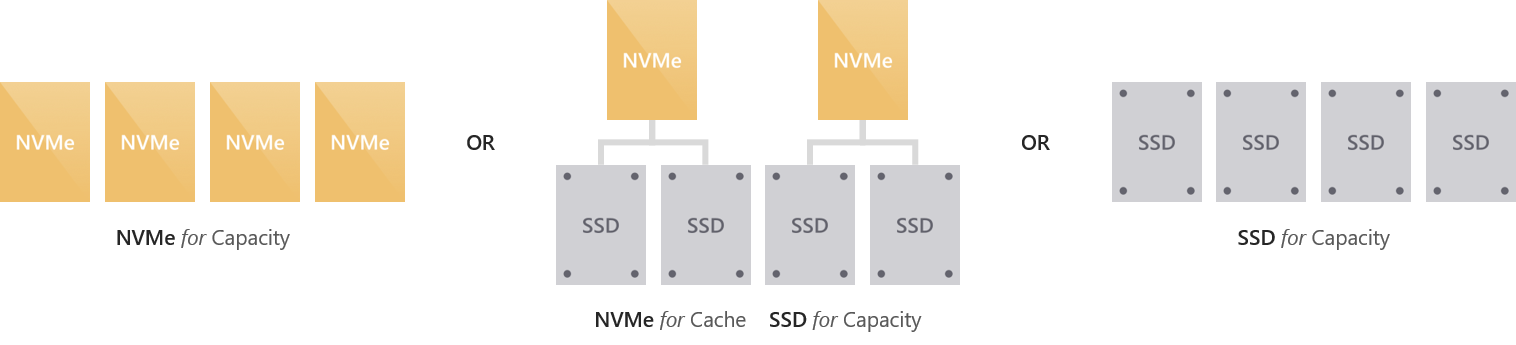

All-flash deployment possibilities

All-flash deployments aim to maximize storage performance and do not include rotational hard disk drives (HDD).

Hybrid deployment possibilities

Hybrid deployments aim to balance performance and capacity or to maximize capacity and do include rotational hard disk drives (HDD).

Cache drives are selected automatically

In deployments with multiple types of drives, Storage Spaces Direct automatically uses all drives of the «fastest» type for caching. The remaining drives are used for capacity.

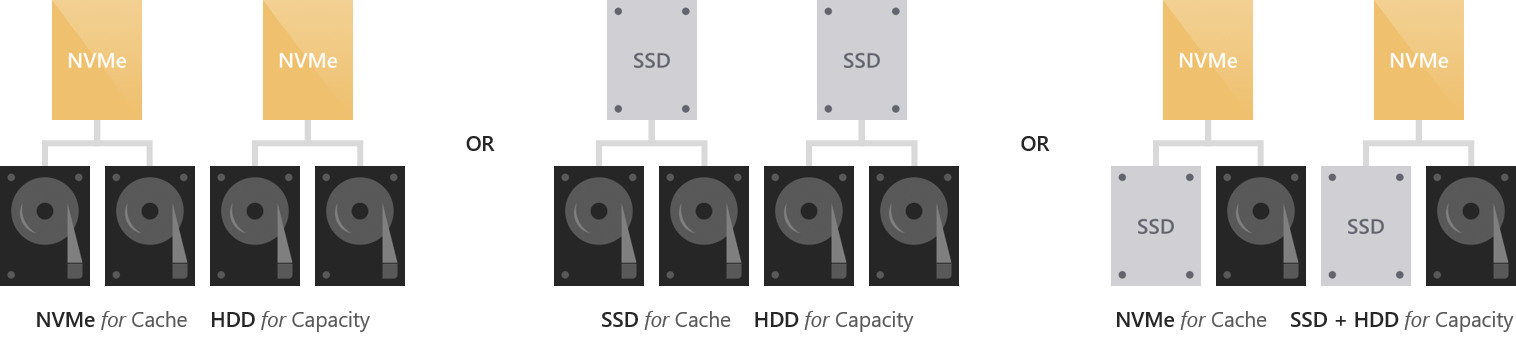

Which type is «fastest» is determined according to the following hierarchy.

For example, if you have NVMe and SSDs, the NVMe will cache for the SSDs.

If you have SSDs and HDDs, the SSDs will cache for the HDDs.

Cache drives do not contribute usable storage capacity. All data stored in the cache is also stored elsewhere, or will be once it de-stages. This means the total raw storage capacity of your deployment is the sum of your capacity drives only.

When all drives are of the same type, no cache is configured automatically. You have the option to manually configure higher-endurance drives to cache for lower-endurance drives of the same type – see the Manual configuration section to learn how.

In all-NVMe or all-SSD deployments, especially at very small scale, having no drives «spent» on cache can improve storage efficiency meaningfully.

Cache behavior is set automatically

The behavior of the cache is determined automatically based on the type(s) of drives that are being cached for. When caching for solid-state drives (such as NVMe caching for SSDs), only writes are cached. When caching for hard disk drives (such as SSDs caching for HDDs), both reads and writes are cached.

Write-only caching for all-flash deployments

When caching for solid-state drives (NVMe or SSDs), only writes are cached. This reduces wear on the capacity drives because many writes and re-writes can coalesce in the cache and then de-stage only as needed, reducing the cumulative traffic to the capacity drives and extending their lifetime. For this reason, we recommend selecting higher-endurance, write-optimized drives for the cache. The capacity drives may reasonably have lower write endurance.

Because reads do not significantly affect the lifespan of flash, and because solid-state drives universally offer low read latency, reads are not cached: they are served directly from the capacity drives (except when the data was written so recently that it has not yet been de-staged). This allows the cache to be dedicated entirely to writes, maximizing its effectiveness.

This results in write characteristics, such as write latency, being dictated by the cache drives, while read characteristics are dictated by the capacity drives. Both are consistent, predictable, and uniform.

Read/write caching for hybrid deployments

When caching for hard disk drives (HDDs), both reads and writes are cached, to provide flash-like latency (often

10x better) for both. The read cache stores recently and frequently read data for fast access and to minimize random traffic to the HDDs. (Because of seek and rotational delays, the latency and lost time incurred by random access to an HDD is significant.) Writes are cached to absorb bursts and, as before, to coalesce writes and re-writes and minimize the cumulative traffic to the capacity drives.

Storage Spaces Direct implements an algorithm that de-randomizes writes before de-staging them, to emulate an IO pattern to disk that seems sequential even when the actual IO coming from the workload (such as virtual machines) is random. This maximizes the IOPS and throughput to the HDDs.

Caching in deployments with drives of all three types

When drives of all three types are present, the NVMe drives provides caching for both the SSDs and the HDDs. The behavior is as described above: only writes are cached for the SSDs, and both reads and writes are cached for the HDDs. The burden of caching for the HDDs is distributed evenly among the cache drives.

Summary

This table summarizes which drives are used for caching, which are used for capacity, and what the caching behavior is for each deployment possibility.

| Deployment | Cache drives | Capacity drives | Cache behavior (default) |

|---|---|---|---|

| All NVMe | None (Optional: configure manually) | NVMe | Write-only (if configured) |

| All SSD | None (Optional: configure manually) | SSD | Write-only (if configured) |

| NVMe + SSD | NVMe | SSD | Write-only |

| NVMe + HDD | NVMe | HDD | Read + Write |

| SSD + HDD | SSD | HDD | Read + Write |

| NVMe + SSD + HDD | NVMe | SSD + HDD | Read + Write for HDD, Write-only for SSD |

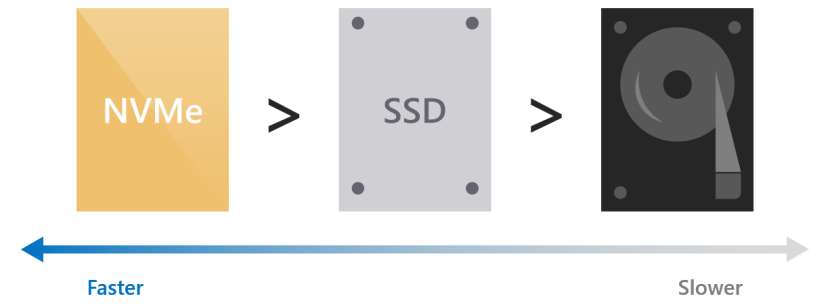

Server-side architecture

The cache is implemented at the drive level: individual cache drives within one server are bound to one or many capacity drives within the same server.

Because the cache is below the rest of the Windows software-defined storage stack, it does not have nor need any awareness of concepts such as Storage Spaces or fault tolerance. You can think of it as creating «hybrid» (part flash, part disk) drives which are then presented to Windows. As with an actual hybrid drive, the real-time movement of hot and cold data between the faster and slower portions of the physical media is nearly invisible to the outside.

Given that resiliency in Storage Spaces Direct is at least server-level (meaning data copies are always written to different servers; at most one copy per server), data in the cache benefits from the same resiliency as data not in the cache.

For example, when using three-way mirroring, three copies of any data are written to different servers, where they land in cache. Regardless of whether they are later de-staged or not, three copies will always exist.

Drive bindings are dynamic

The binding between cache and capacity drives can have any ratio, from 1:1 up to 1:12 and beyond. It adjusts dynamically whenever drives are added or removed, such as when scaling up or after failures. This means you can add cache drives or capacity drives independently, whenever you want.

We recommend making the number of capacity drives a multiple of the number of cache drives, for symmetry. For example, if you have 4 cache drives, you will experience more even performance with 8 capacity drives (1:2 ratio) than with 7 or 9.

Handling cache drive failures

When a cache drive fails, any writes which have not yet been de-staged are lost to the local server, meaning they exist only on the other copies (in other servers). Just like after any other drive failure, Storage Spaces can and does automatically recover by consulting the surviving copies.

For a brief period, the capacity drives which were bound to the lost cache drive will appear unhealthy. Once the cache rebinding has occurred (automatic) and the data repair has completed (automatic), they will resume showing as healthy.

This scenario is why at minimum two cache drives are required per server to preserve performance.

You can then replace the cache drive just like any other drive replacement.

You may need to power down to safely replace NVMe that is Add-In Card (AIC) or M.2 form factor.

Relationship to other caches

There are several other unrelated caches in the Windows software-defined storage stack. Examples include the Storage Spaces write-back cache and the Cluster Shared Volume (CSV) in-memory read cache.

With Storage Spaces Direct, the Storage Spaces write-back cache should not be modified from its default behavior. For example, parameters such as -WriteCacheSize on the New-Volume cmdlet should not be used.

You may choose to use the CSV cache, or not – it’s up to you. It does not conflict with the cache described in this topic in any way. In certain scenarios it can provide valuable performance gains. For more information, see How to Enable CSV Cache.

Manual configuration

For most deployments, manual configuration is not required. In case you do need it, see the following sections.

If you need to make changes to the cache device model after setup, edit the Health Service’s Support Components Document, as described in Health Service overview.

Specify cache drive model

In deployments where all drives are of the same type, such as all-NVMe or all-SSD deployments, no cache is configured because Windows cannot distinguish characteristics like write endurance automatically among drives of the same type.

To use higher-endurance drives to cache for lower-endurance drives of the same type, you can specify which drive model to use with the -CacheDeviceModel parameter of the Enable-ClusterS2D cmdlet. Once Storage Spaces Direct is enabled, all drives of that model will be used for caching.

Be sure to match the model string exactly as it appears in the output of Get-PhysicalDisk.

Example

First, get a list of physical disks:

Here’s some example output:

Then enter the following command, specifying the cache device model:

You can verify that the drives you intended are being used for caching by running Get-PhysicalDisk in PowerShell and verifying that their Usage property says «Journal».

Manual deployment possibilities

Manual configuration enables the following deployment possibilities:

Set cache behavior

It is possible to override the default behavior of the cache. For example, you can set it to cache reads even in an all-flash deployment. We discourage modifying the behavior unless you are certain the default does not suit your workload.

To override the behavior, use Set-ClusterStorageSpacesDirect cmdlet and its -CacheModeSSD and -CacheModeHDD parameters. The CacheModeSSD parameter sets the cache behavior when caching for solid-state drives. The CacheModeHDD parameter sets cache behavior when caching for hard disk drives. This can be done at any time after Storage Spaces Direct is enabled.

You can use Get-ClusterStorageSpacesDirect to verify the behavior is set.

Example

First, get the Storage Spaces Direct settings:

Here’s some example output:

Then, do the following:

Here’s some example output:

Sizing the cache

The cache should be sized to accommodate the working set (the data being actively read or written at any given time) of your applications and workloads.

This is especially important in hybrid deployments with hard disk drives. If the active working set exceeds the size of the cache, or if the active working set drifts too quickly, read cache misses will increase and writes will need to be de-staged more aggressively, hurting overall performance.

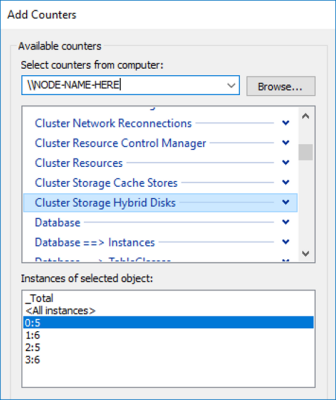

You can use the built-in Performance Monitor (PerfMon.exe) utility in Windows to inspect the rate of cache misses. Specifically, you can compare the Cache Miss Reads/sec from the Cluster Storage Hybrid Disk counter set to the overall read IOPS of your deployment. Each «Hybrid Disk» corresponds to one capacity drive.

For example, 2 cache drives bound to 4 capacity drives results in 4 «Hybrid Disk» object instances per server.

There is no universal rule, but if too many reads are missing the cache, it may be undersized and you should consider adding cache drives to expand your cache. You can add cache drives or capacity drives independently whenever you want.