- Руководство по обеспечению высокой производительности Windows Server 2016 Performance Tuning Guidelines for Windows Server 2016

- В данном руководстве In this guide

- Server Hardware Performance Considerations

- Processor Recommendations

- Cache Recommendations

- Memory (RAM) and Paging Storage Recommendations

- Peripheral Bus Recommendations

- Disk Recommendations

- Network and Storage Adapter Recommendations

- Certified adapter usage

- 64-bit capability

- Copper and fiber adapters

- Dual- or quad-port adapters

- Interrupt moderation

- Receive Side Scaling (RSS) support

- Offload capability and other advanced features such as message-signaled interrupt (MSI)-X

- Dynamic interrupt and deferred procedure call (DPC) redirection

- Power and performance tuning

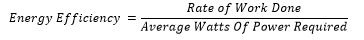

- Calculating server energy efficiency

- Measuring system energy consumption

- Diagnosing energy efficiency issues

- Using power plans in Windows Server

- Tuning processor power management parameters

- Processor performance boost mode

- Minimum and maximum processor performance state

- Processor performance increase and decrease of thresholds and policies

- Processor performance core parking maximum and minimum cores

- Processor performance core parking utility distribution

Руководство по обеспечению высокой производительности Windows Server 2016 Performance Tuning Guidelines for Windows Server 2016

Если в организации выполняется серверная система, параметры сервера по умолчанию могут не соответствовать потребностям бизнеса. When you run a server system in your organization, you might have business needs not met using default server settings. Например, может потребоваться наименьшее потребление электроэнергии, минимальная задержка или максимальная пропускная способность сервера. For example, you might need the lowest possible energy consumption, or the lowest possible latency, or the maximum possible throughput on your server. Это руководство содержит ряд рекомендаций, которые можно использовать для настройки параметров сервера в Windows Server 2016 и повышения производительности или экономии электроэнергии, особенно учитывая изменение характера рабочих нагрузки со временем. This guide provides a set of guidelines that you can use to tune the server settings in Windows Server 2016 and obtain incremental performance or energy efficiency gains, especially when the nature of the workload varies little over time.

Очень важно, чтобы при настройке учитывалось оборудование, рабочая нагрузка, бюджет питания и целевые показатели производительности сервера. It is important that your tuning changes consider the hardware, the workload, the power budgets, and the performance goals of your server. В этом руководстве описывается каждый параметр и его возможное влияние. Это поможет вам принять обоснованное решение о его влиянии на систему, рабочую нагрузку, производительность и целевые показатели энергопотребления. This guide describes each setting and its potential effect to help you make an informed decision about its relevance to your system, workload, performance, and energy usage goals.

Параметры реестра и параметры настройки в разных версиях Windows Server существенно различаются. Registry settings and tuning parameters changed significantly between versions of Windows Server. Обязательно применяйте последние рекомендации по настройке, чтобы избежать непредвиденных результатов. Be sure to use the latest tuning guidelines to avoid unexpected results.

В данном руководстве In this guide

В этом руководстве рекомендации по обеспечению высокой производительности Windows Server 2016 разделены на три категории: This guide organizes performance and tuning guidance for Windows Server 2016 across three tuning categories:

Server Hardware Performance Considerations

The following section lists important items that you should consider when you choose server hardware. Following these guidelines can help remove performance bottlenecks that might impede the server’s performance.

Processor Recommendations

Choose 64-bit processors for servers. 64-bit processors have significantly more address space, and are required for Windows ServerВ 2016. No 32-bit editions of the operating system will be provided, but 32-bit applications will run on the 64-bit Windows ServerВ 2016 operating system.

To increase the computing resources in a server, you can use a processor with higher-frequency cores, or you can increase the number of processor cores. If CPU is the limiting resource in the system, a core with 2x frequency typically provides a greater performance improvement than two cores with 1x frequency.

Multiple cores are not expected to provide a perfect linear scaling, and the scaling factor can be even less if hyper-threading is enabled because hyper-threading relies on sharing resources of the same physical core.

Match and scale the memory and I/O subsystem with the CPU performance, and vice versa.

Do not compare CPU frequencies across manufacturers and generations of processors because the comparison can be a misleading indicator of speed.

For Hyper-V, make sure that the processor supports SLAT (Second Level Address Translation). It is implemented as Extended Page Tables (EPT) by Intel and Nested Page Tables (NPT) by AMD. You can verify this feature is present by using SystemInfo.exe on your server.

Cache Recommendations

Choose large L2 or L3 processor caches. On newer architectures, such as Haswell or Skylake, there is a unified Last Level Cache (LLC) or an L4. The larger caches generally provide better performance, and they often play a bigger role than raw CPU frequency.

Memory (RAM) and Paging Storage Recommendations

Some systems may exhibit reduced storage performance when running a new install of Windows Server 2016 versus Windows Server 2012 R2. A number of changes were made during the development of Windows Server 2016 to improve security and reliability of the platform. Some of those changes, such as enabling Windows Defender by default, result in longer I/O paths that can reduce I/O performance in specific workloads and patterns. Microsoft does not recommend disabling Windows Defender as it is an important layer of protection for your systems.

Increase the RAM to match your memory needs. When your computer runs low on memory and it needs more immediately, Windows uses hard disk space to supplement system RAM through a procedure called paging. Too much paging degrades the overall system performance. You can optimize paging by using the following guidelines for page file placement:

Isolate the page file on its own storage device, or at least make sure it doesn’t share the same storage devices as other frequently accessed files. For example, place the page file and operating system files on separate physical disk drives.

Place the page file on a drive that is fault-tolerant. If a non-fault-tolerant disk fails, a system crash is likely to occur. If you place the page file on a fault-tolerant drive, remember that fault-tolerant systems are often slower to write data because they write data to multiple locations.

Use multiple disks or a disk array if you need additional disk bandwidth for paging. Do not place multiple page files on different partitions of the same physical disk drive.

Peripheral Bus Recommendations

In Windows ServerВ 2016, the primary storage and network interfaces should be PCI Express (PCIe) so servers with PCIe buses are recommended. To avoid bus speed limitations, use PCIe x8 and higher slots for 10+ GB Ethernet adapters.

Disk Recommendations

Choose disks with higher rotational speeds to reduce random request service times (

2 ms on average when you compare 7,200- and 15,000-RPM drives) and to increase sequential request bandwidth. However, there are cost, power, and other considerations associated with disks that have high rotational speeds.

2.5-inch enterprise-class disks can service a significantly larger number of random requests per second compared to equivalent 3.5-inch drives.

Store frequently accessed data, especially sequentially accessed data, near the beginning of a disk because this roughly corresponds to the outermost (fastest) tracks.

Consolidating small drives into fewer high-capacity drives can reduce overall storage performance. Fewer spindles mean reduced request service concurrency; and therefore, potentially lower throughput and longer response times (depending on the workload intensity).

The use of SSD and high speed flash disks is useful for read mostly disks with high I/O rates or latency sensitive I/O. Boot disks are good candidates for the use of SSD or high speed flash disks as they can improve boot times significantly.

NVMe SSDs offer superior performance with greater command queue depths, more efficient interrupt processing, and greater efficiency for 4KB commands. This particularly benefits scenarios that requires heavy simultaneous I/O.

Network and Storage Adapter Recommendations

The following section lists the recommended characteristics for network and storage adapters for high-performance servers. These settings can help prevent your networking or storage hardware from being a bottleneck when they are under heavy load.

Certified adapter usage

Use an adapter that has passed the Windows Hardware Certification test suite.

64-bit capability

Adapters that are 64-bit-capable can perform direct memory access (DMA) operations to and from high physical memory locations (greater than 4 GB). If the driver does not support DMA greater than 4 GB, the system double-buffers the I/O to a physical address space of less than 4В GB.

Copper and fiber adapters

Copper adapters generally have the same performance as their fiber counterparts, and both copper and fiber are available on some Fibre Channel adapters. Certain environments are better suited to copper adapters, whereas other environments are better suited to fiber adapters.

Dual- or quad-port adapters

Multiport adapters are useful for servers that have a limited number of PCI slots.

To address SCSI limitations on the number of disks that can be connected to a SCSI bus, some adapters provide two or four SCSI buses on a single adapter card. Fibre Channel adapters generally have no limits to the number of disks that are connected to an adapter unless they are hidden behind a SCSI interface.

Serial Attached SCSI (SAS) and Serial ATA (SATA) adapters also have a limited number of connections because of the serial nature of the protocols, but you can attach more disks by using switches.

Network adapters have this feature for load-balancing or failover scenarios. Using two single-port network adapters usually yields better performance than using a single dual-port network adapter for the same workload.

PCI bus limitation can be a major factor in limiting performance for multiport adapters. Therefore, it is important to consider placing them in a high-performing PCIe slot that provides enough bandwidth.

Interrupt moderation

Some adapters can moderate how frequently they interrupt the host processors to indicate activity or its completion. Moderating interrupts can often result in reduced CPU load on the host, but, unless interrupt moderation is performed intelligently; the CPU savings might increase latency.

Receive Side Scaling (RSS) support

RSS enables packet receive-processing to scale with the number of available computer processors. This is particularly important with 10 GB Ethernet and faster.

Offload capability and other advanced features such as message-signaled interrupt (MSI)-X

Offload-capable adapters offer CPU savings that yield improved performance.

Dynamic interrupt and deferred procedure call (DPC) redirection

In Windows ServerВ 2016, Numa I/O enables PCIe storage adapters to dynamically redirect interrupts and DPCs and can help any multiprocessor system by improving workload partitioning, cache hit rates, and on-board hardware interconnect usage for I/O-intensive workloads.

Power and performance tuning

Energy efficiency is increasingly important in enterprise and data center environments, and it adds another set of tradeoffs to the mix of configuration options.

Windows ServerВ 2016 is optimized for excellent energy efficiency with minimum performance impact across a wide range of customer workloads. Processor Power Management (PPM) Tuning for the Windows Server Balanced Power Plan describes the workloads used for tuning the default parameters in Windows Server 2016, and provides suggestions for customized tunings.

This section expands on energy-efficiency tradeoffs to help you make informed decisions if you need to adjust the default power settings on your server. However, the majority of server hardware and workloads should not require administrator power tuning when running Windows ServerВ 2016.

Calculating server energy efficiency

When you tune your server for energy savings, you must also consider performance. Tuning affects performance and power, sometimes in disproportionate amounts. For each possible adjustment, consider your power budget and performance goals to determine whether the trade-off is acceptable.

You can calculate your server’s energy efficiency ratio for a useful metric that incorporates power and performance information. Energy efficiency is the ratio of work that is done to the average power that is required during a specified amount of time.

You can use this metric to set practical goals that respect the tradeoff between power and performance. In contrast, a goal of 10 percent energy savings across the data center fails to capture the corresponding effects on performance and vice versa.

Similarly, if you tune your server to increase performance by 5 percent, and that results in 10 percent higher energy consumption, the total result might or might not be acceptable for your business goals. The energy efficiency metric allows for more informed decision making than power or performance metrics alone.

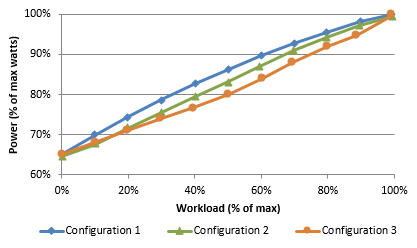

Measuring system energy consumption

You should establish a baseline power measurement before you tune your server for energy efficiency.

If your server has the necessary support, you can use the power metering and budgeting features in Windows ServerВ 2016 to view system-level energy consumption by using Performance Monitor.

One way to determine whether your server has support for metering and budgeting is to review the Windows Server Catalog. If your server model qualifies for the new Enhanced Power Management qualification in the Windows Hardware Certification Program, it is guaranteed to support the metering and budgeting functionality.

Another way to check for metering support is to manually look for the counters in Performance Monitor. Open Performance Monitor, select Add Counters, and then locate the Power Meter counter group.

If named instances of power meters appear in the box labeled Instances of Selected Object, your platform supports metering. The Power counter that shows power in watts appears in the selected counter group. The exact derivation of the power data value is not specified. For example, it could be an instantaneous power draw or an average power draw over some time interval.

If your server platform does not support metering, you can use a physical metering device connected to the power supply input to measure system power draw or energy consumption.

To establish a baseline, you should measure the average power required at various system load points, from idle to 100 percent (maximum throughput) to generate a load line. The following figure shows load lines for three sample configurations:

You can use load lines to evaluate and compare the performance and energy consumption of configurations at all load points. In this particular example, it is easy to see what the best configuration is. However, there can easily be scenarios where one configuration works best for heavy workloads and one works best for light workloads.

You need to thoroughly understand your workload requirements to choose an optimal configuration. Don’t assume that when you find a good configuration, it will always remain optimal. You should measure system utilization and energy consumption on a regular basis and after changes in workloads, workload levels, or server hardware.

Diagnosing energy efficiency issues

PowerCfg.exe supports a command-line option that you can use to analyze the idle energy efficiency of your server. When you run PowerCfg.exe with the /energy option, the tool performs a 60-second test to detect potential energy efficiency issues. The tool generates a simple HTML report in the current directory.

To ensure an accurate analysis, make sure that all local apps are closed before you run PowerCfg.exe.В

Shortened timer tick rates, drivers that lack power management support, and excessive CPU utilization are a few of the behavioral issues that are detected by the powercfg /energy command. This tool provides a simple way to identify and fix power management issues, potentially resulting in significant cost savings in a large datacenter.

Using power plans in Windows Server

Windows ServerВ 2016 has three built-in power plans designed to meet different sets of business needs. These plans provide a simple way for you to customize a server to meet power or performance goals. The following table describes the plans, lists the common scenarios in which to use each plan, and gives some implementation details for each plan.

| Plan | Description | Common applicable scenarios | Implementation highlights |

|---|---|---|---|

| Balanced (recommended) | Default setting. Targets good energy efficiency with minimal performance impact. | General computing | Matches capacity to demand. Energy-saving features balance power and performance. |

| High Performance | Increases performance at the cost of high energy consumption. Power and thermal limitations, operating expenses, and reliability considerations apply. | Low latency apps and app code that is sensitive to processor performance changes | Processors are always locked at the highest performance state (including «turbo» frequencies). All cores are unparked. Thermal output may be significant. |

| Power Saver | Limits performance to save energy and reduce operating cost. Not recommended without thorough testing to make sure performance is adequate. | Deployments with limited power budgets and thermal constraints | Caps processor frequency at a percentage of maximum (if supported), and enables other energy-saving features. |

These power plans exist in Windows for alternating current (AC) and direct current (DC) powered systems, but we will assume that servers are always using an AC power source.

For more info on power plans and power policy configurations, see Power Policy Configuration and Deployment in Windows.

Some server manufactures have their own power management options available through the BIOS settings. If the operating system does not have control over the power management, changing the power plans in Windows will not affect system power and performance.

Tuning processor power management parameters

Each power plan represents a combination of numerous underlying power management parameters. The built-in plans are three collections of recommended settings that cover a wide variety of workloads and scenarios. However, we recognize that these plans will not meet every customer’s needs.

The following sections describe ways to tune some specific processor power management parameters to meet goals not addressed by the three built-in plans. If you need to understand a wider array of power parameters, see Power Policy Configuration and Deployment in Windows.

Processor performance boost mode

Intel Turbo Boost and AMD Turbo CORE technologies are features that allow processors to achieve additional performance when it is most useful (that is, at high system loads). However, this feature increases CPU core energy consumption, so Windows Server 2016 configures Turbo technologies based on the power policy that is in use and the specific processor implementation.

Turbo is enabled for High Performance power plans on all Intel and AMD processors and it is disabled for Power Saver power plans. For Balanced power plans on systems that rely on traditional P-state-based frequency management, Turbo is enabled by default only if the platform supports the EPB register.

The EPB register is only supported in Intel Westmere and later processors.

For Intel Nehalem and AMD processors, Turbo is disabled by default on P-state-based platforms. However, if a system supports Collaborative Processor Performance Control (CPPC), which is a new alternative mode of performance communication between the operating system and the hardware (defined in ACPI 5.0), Turbo may be engaged if the Windows operating system dynamically requests the hardware to deliver the highest possible performance levels.

To enable or disable the Turbo Boost feature, the Processor Performance Boost Mode parameter must be configured by the administrator or by the default parameter settings for the chosen power plan. Processor Performance Boost Mode has five allowable values, as shown in TableВ 5.

For P-state-based control, the choices are Disabled, Enabled (Turbo is available to the hardware whenever nominal performance is requested), and Efficient (Turbo is available only if the EPB register is implemented).

For CPPC-based control, the choices are Disabled, Efficient Enabled (Windows specifies the exact amount of Turbo to provide), and Aggressive (Windows asks for «maximum performance» to enable Turbo).

In Windows Server 2016, the default value for Boost Mode is 3.

| Name | P-state-based behavior | CPPC behavior |

|---|---|---|

| 0 (Disabled) | Disabled | Disabled |

| 1 (Enabled) | Enabled | Efficient Enabled |

| 2 (Aggressive) | Enabled | Aggressive |

| 3 (Efficient Enabled) | Efficient | Efficient Enabled |

| 4 (Efficient Aggressive) | Efficient | Aggressive |

The following commands enable Processor Performance Boost Mode on the current power plan (specify the policy by using a GUID alias):

You must run the powercfg -setactive command to enable the new settings. You do not need to reboot the server.

To set this value for power plans other than the currently selected plan, you can use aliases such as SCHEME_MAX (Power Saver), SCHEME_MIN (High Performance), and SCHEME_BALANCED (Balanced) in place of SCHEME_CURRENT. Replace «scheme current» in the powercfg -setactive commands previously shown with the desired alias to enable that power plan.

For example, to adjust the Boost Mode in the Power Saver plan and make that Power Saver is the current plan, run the following commands:

Minimum and maximum processor performance state

Processors change between performance states (P-states) very quickly to match supply to demand, delivering performance where necessary and saving energy when possible. If your server has specific high-performance or minimum-power-consumption requirements, you might consider configuring the Minimum Processor Performance State parameter or the Maximum Processor Performance State parameter.

The values for the Minimum Processor Performance State and Maximum Processor Performance State parameters are expressed as a percentage of maximum processor frequency, with a value in the range 0 – 100.

If your server requires ultra-low latency, invariant CPU frequency (e.g., for repeatable testing), or the highest performance levels, you might not want the processors switching to lower-performance states. For such a server, you can cap the minimum processor performance state at 100 percent by using the following commands:

If your server requires lower energy consumption, you might want to cap the processor performance state at a percentage of maximum. For example, you can restrict the processor to 75 percent of its maximum frequency by using the following commands:

Capping processor performance at a percentage of maximum requires processor support. Check the processor documentation to determine whether such support exists, or view the Performance Monitor counter % of maximum frequency in the Processor group to see if any frequency caps were applied.

Processor performance increase and decrease of thresholds and policies

The speed at which a processor performance state increases or decreases is controlled by multiple parameters. The following four parameters have the most visible impact:

Processor Performance Increase Threshold defines the utilization value above which a processor’s performance state will increase. Larger values slow the rate of increase for the performance state in response to increased activities.

Processor Performance Decrease Threshold defines the utilization value below which a processor’s performance state will decrease. Larger values increase the rate of decrease for the performance state during idle periods.

Processor Performance Increase Policy and Processor Performance Decrease Policy determine which performance state should be set when a change happens. «Single» policy means it chooses the next state. «Rocket» means the maximum or minimal power performance state. «Ideal» tries to find a balance between power and performance.

For example, if your server requires ultra-low latency while still wanting to benefit from low power during idle periods, you could quicken the performance state increase for any increase in load and slow the decrease when load goes down. The following commands set the increase policy to «Rocket» for a faster state increase, and set the decrease policy to «Single». The increase and decrease thresholds are set to 10 and 8 respectively.

Processor performance core parking maximum and minimum cores

Core parking is a feature that was introduced in Windows ServerВ 2008В R2. The processor power management (PPM) engine and the scheduler work together to dynamically adjust the number of cores that are available to run threads. The PPM engine chooses a minimum number of cores for the threads that will be scheduled.

Cores that are parked generally do not have any threads scheduled, and they will drop into very low power states when they are not processing interrupts, DPCs, or other strictly affinitized work. The remaining cores are responsible for the remainder of the workload. Core parking can potentially increase energy efficiency during lower usage.

For most servers, the default core-parking behavior provides a reasonable balance of throughput and energy efficiency. On processors where core parking may not show as much benefit on generic workloads, it can be disabled by default.

If your server has specific core parking requirements, you can control the number of cores that are available to park by using the Processor Performance Core Parking Maximum Cores parameter or the Processor Performance Core Parking Minimum Cores parameter in Windows ServerВ 2016.

One scenario that core parking isn’t always optimal for is when there are one or more active threads affinitized to a non-trivial subset of CPUs in a NUMA node (that is, more than 1 CPU, but less than the entire set of CPUs on the node). When the core parking algorithm is picking cores to unpark (assuming an increase in workload intensity occurs), it may not always pick the cores within the active affinitized subset (or subsets) to unpark, and thus may end up unparking cores that won’t actually be utilized.

The values for these parameters are percentages in the range 0 – 100. The Processor Performance Core Parking Maximum Cores parameter controls the maximum percentage of cores that can be unparked (available to run threads) at any time, while the Processor Performance Core Parking Minimum Cores parameter controls the minimum percentage of cores that can be unparked. To turn off core parking, set the Processor Performance Core Parking Minimum Cores parameter to 100 percent by using the following commands:

To reduce the number of schedulable cores to 50 percent of the maximum count, set the Processor Performance Core Parking Maximum Cores parameter to 50 as follows:

Processor performance core parking utility distribution

Utility Distribution is an algorithmic optimization in Windows ServerВ 2016 that is designed to improve power efficiency for some workloads. It tracks unmovable CPU activity (that is, DPCs, interrupts, or strictly affinitized threads), and it predicts the future work on each processor based on the assumption that any movable work can be distributed equally across all unparked cores.

Utility Distribution is enabled by default for the Balanced power plan for some processors. It can reduce processor power consumption by lowering the requested CPU frequencies of workloads that are in a reasonably steady state. However, Utility Distribution is not necessarily a good algorithmic choice for workloads that are subject to high activity bursts or for programs where the workload quickly and randomly shifts across processors.

For such workloads, we recommend disabling Utility Distribution by using the following commands: