- Как с максимальным качеством вывести звук из компьютера под ОС Windows

- Установка драйвера ASIO

- Первичная настройка Foobar2000

- Программная регулировка громкости

- Финальные штрихи

- Low Latency Audio

- Overview

- Definitions

- Windows Audio Stack

- Audio Stack Improvements in Windows 10

- API Improvements

- AudioGraph

- Windows Audio Session API (WASAPI)

- Samples

Как с максимальным качеством вывести звук из компьютера под ОС Windows

Сохранить и прочитать потом —

Ваш персональный компьютер может располагать CD-приводом или не быть им оснащенным – так или иначе он способен хранить в своей памяти библиотеку аудиофайлов или же получать их путем стриминга с каких-либо ресурсов. В любом случае музыкальный сигнал формата PCM (или реже DSD) может быть выведен для цифроаналогового преобразования сторонними средствами. Чаще всего это делается посредством USB-соединения ПК с внешней звуковой картой или аудио-ЦАП. Музыку можно слушать и с выхода на наушники самого компьютера (его динамики рассматривать не будем), но в таком случае результат будет оставлять желать лучшего. Причин тому несколько, но самая главная – встроенная звуковая карта попросту не обеспечивает качества, соответствующего статусу Hi-Fi. Поэтому самый очевидный способ – доверить эту процедуру специализированному компоненту.

Но простое подключение USB-кабелем еще не гарантирует точной побитовой передачи аудио на внешний ЦАП. Этот режим работы должен быть соответствующим образом настроен.

Установка драйвера ASIO

Для чего нужен режим ASIO? При воспроизведении музыки вы должны изолировать аудиопоток от программных микшеров Windows. В данном случае вам они совершенно не нужны, поскольку могут выполнять дополнительный пересчет данных, причем не очень качественный с точки зрения канонов Hi-Fi. Протокол ASIO призван сделать путь между программным плеером и ЦАП максимально коротким. Поэтому сегодня любой уважающий себя производитель звуковых карт или ЦАП снабжает свою продукцию соответствующими ASIO-драйверами. После их установки можно приступать к настройке своего программного плеера. Они выполняются один раз и в дальнейшей доводке не нуждаются.

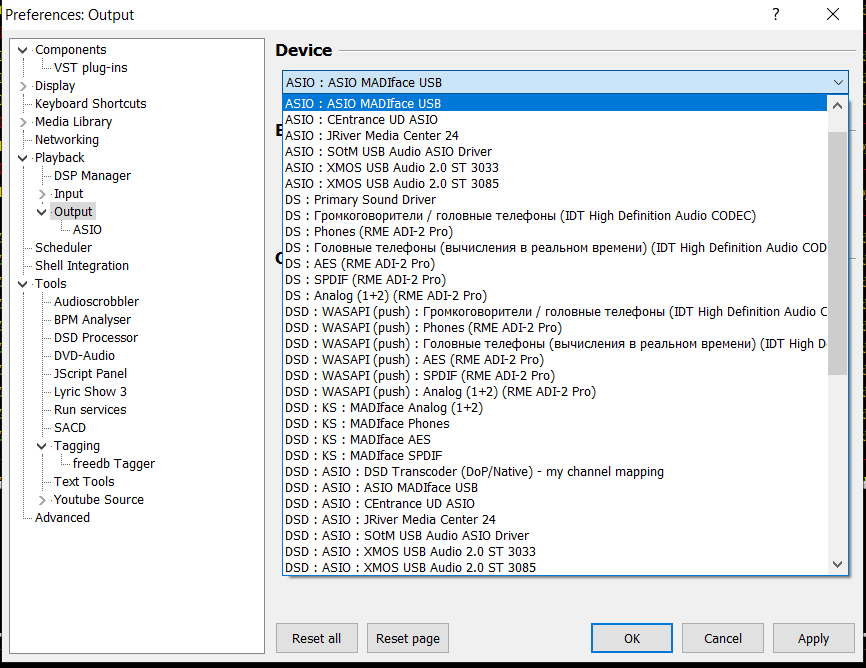

Первичная настройка Foobar2000

Для примера рассмотрим один из самых популярных плееров Foobar2000. Он занимает минимум места, бесплатен и при этом достаточно продвинут для сложной DSP-обработки аудиосигнала. Но сейчас мы поговорим не обо всех возможностях Foobar2000, а о его первичной настройке для работы в ASIO-режиме с вашей звуковой картой.

В платных аудиоплеерах наподобие Audiorvana или JRiver совместимость с ASIO прошита в исходном дистрибутиве, и плеер сам подхватывает доступные ASIO-подключения. Для Foobar2000 понадобится установка модуля ASIO support, которую нужно дополнительная скачать по адресу https://www.foobar2000.org/components/view/foo_out_asio. После этого в разделе плеера Preferences выбираем самую первую строку Components. Нажимаем Install и указываем программе на сохраненный файлик foo_out_asio.fb2k-component. После чего перезагружаем плеер, чтобы изменения вступили в силу.

Программная регулировка громкости

Многие пользователи предпочитают регулировать громкость непосредственно в программном плеере. Это весьма удобно, например, в случае прослушивания с помощью настольных систем с активными мониторами.

Если ваш аудиотракт работает в режиме ASIO, уменьшить громкость с помощью ползунка в углу экрана (на панели задач Windows) уже не получится. Придется использовать собственный регулятор уровня сигнала плеера (Volume Сontrol). При этом имейте в виду, что в случае программного изменения громкости побитовая передача данных оригинала на ЦАП не сохраняется. Это возможно только при максимальном положении громкости на отметке 100%.

Финальные штрихи

В подразделе Output->ASIO можно увидеть два отмеченных галочкой режима повышения производительности. Use 64-bit ASIO drivers и Run with high process priority. Обычно они включены по умолчанию, но нелишним будет их проверить.

Также можно проверить состояние консоли ASIO. Иногда доступ к ней возможен прямо в плеере, а порой ее ярлычок находится на упомянутой панели задач вместе со значками языка раскладки и др. Разрядность должна быть установлена на значениях 24 или 32 бит. Не стоит включать так называемый dither – эта опция нужна лишь для очень старых приемников аудиосигнала, чья разрядность ограничена 16 бит.

Что касается размера буфера ASIO, то если ваша система не испытывает выпадения сигнала или иных сбоев звука, лучше оставить значение по умолчанию. Его уменьшение повысит вероятность появления артефактов, а увеличение, соответственно, понизит. Ряд аудиофилов утверждает, что наилучшего звука можно добиться с минимальным значением размера буфера ASIO. Измерения характеристик аудиопотока этого предположения не подтверждают, но лучший прибор – наш слух, так что вы сможете определиться с оптимальным размером буфера ASIO самостоятельно.

Low Latency Audio

This topic discusses audio latency changes in WindowsВ 10. It covers API options for application developers as well as changes in drivers that can be made to support low latency audio.

Overview

Audio latency is the delay between that time that sound is created and when it is heard. Having low audio latency is very important for several key scenarios, such as the following.

- Pro Audio

- Music Creation

- Communications

- Virtual Reality

- Games

WindowsВ 10 includes changes to reduce the audio latency. The goals of this document are to:

- Describe the sources of audio latency in Windows.

- Explain the changes that reduce audio latency in the WindowsВ 10 audio stack.

- Provide a reference on how application developers and hardware manufacturers can take advantage of the new infrastructure, in order to develop applications and drivers with low audio latency. This topic covers these items:

- The new AudioGraph API for interactive and media creation scenarios.

- Changes in WASAPI to support low latency.

- Enhancements in the driver DDIs.

Definitions

| Term | Description |

|---|---|

| Render latency | Delay between the time that an application submits a buffer of audio data to the render APIs, until the time that it is heard from the speakers. |

| Capture latency | Delay between the time that a sound is captured from the microphone, until the time that it is sent to the capture APIs that are being used by the application. |

| Roundtrip latency | Delay between the time that a sound is captured from the microphone, processed by the application and submitted by the application for rendering to the speakers. It is roughly equal to render latency + capture latency. |

| Touch-to-app latency | Delay between the time that a user taps the screen until the time that the signal is sent to the application. |

| Touch-to-sound latency | Delay between the time that a user taps the screen, the event goes to the application and a sound is heard via the speakers. It is equal to render latency + touch-to-app latency. |

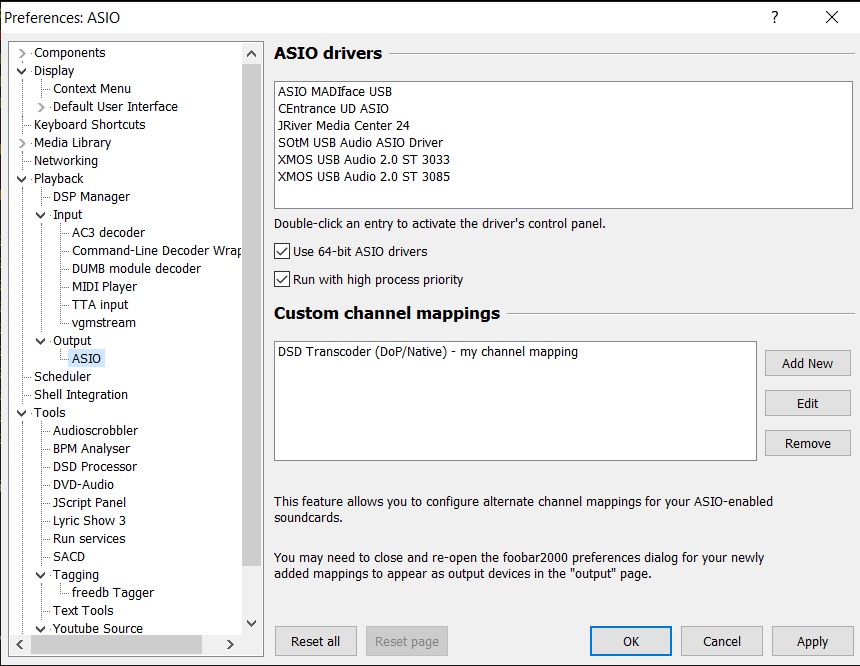

Windows Audio Stack

The following diagram shows a simplified version of the Windows audio stack.

Here is a summary of the latencies in the render path:

The application writes the data into a buffer

The Audio Engine reads the data from the buffer and processes it. It also loads audio effects in the form of Audio Processing Objects (APOs). For more information about APOs, see Windows Audio Processing Objects.

The latency of the APOs varies based on the signal processing within the APOs.

Before Windows 10, the latency of the Audio Engine was equal to

12ms for applications that use floating point data and

6ms for applications that use integer data

In Windows 10, the latency has been reduced to 1.3ms for all applications

The Audio Engine writes the processed data to a buffer.

Before Windows 10, this buffer was always set to

Starting with Windows 10, the buffer size is defined by the audio driver (more details on this are described later in this topic).

The Audio driver reads the data from the buffer and writes them to the H/W.

The H/W also has the option to process the data again (in the form of additional audio effects).

The user hears audio from the speaker.

Here is a summary of latency in the capture path:

Audio is captured from the microphone.

The H/W has the option to process the data (i.e. to add audio effects).

The driver reads the data from the H/W and writes the data into a buffer.

Before Windows 10, this buffer was always set to 10ms.

Starting with Windows 10, the buffer size is defined by the audio driver (more details on this below).

The Audio Engine reads the data from the buffer and processes them. It also loads audio effects in the form of Audio Processing Objects (APOs).

The latency of the APOs varies based on the signal processing within the APOs.

Before Windows 10, the latency of the Audio Engine was equal to

6ms for applications that use floating point data and

0ms for applications that use integer data.

In Windows 10, the latency has been reduced to

0ms for all applications.

The application is signaled that data is available to be read, as soon as the audio engine finishes with its processing. The audio stack also provides the option of Exclusive Mode. In that case, the data bypasses the Audio Engine and goes directly from the application to the buffer where the driver reads it from. However, if an application opens an endpoint in Exclusive Mode, then there is no other application that can use that endpoint to render or capture audio.

Another popular alternative for applications that need low latency is to use the ASIO (Audio Stream Input/Output) model, which utilizes exclusive mode. After a user installs a 3rd party ASIO driver, applications can send data directly from the application to the ASIO driver. However, the application has to be written in such a way that it talks directly to the ASIO driver.

Both alternatives (exclusive mode and ASIO) have their own limitations. They provide low latency, but they have their own limitations (some of which were described above). As a result, Audio Engine has been modified, in order to lower the latency, while retaining the flexibility.

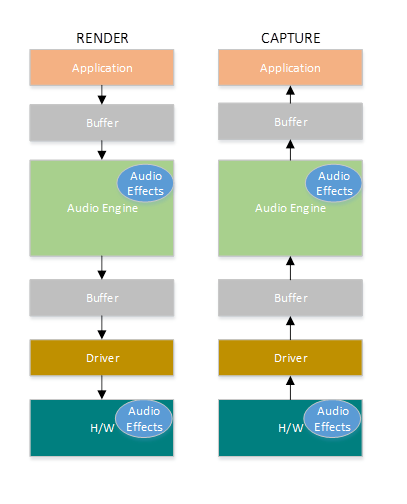

Audio Stack Improvements in Windows 10

WindowsВ 10 has been enhanced in three areas to reduce latency:

- All applications that use audio will see a 4.5-16ms reduction in round-trip latency (as was explained in the section above) without any code changes or driver updates, compared to Windows 8.1. a. Applications that use floating point data will have 16ms lower latency. b. Applications that use integer data will have 4.5ms lower latency.

- Systems with updated drivers will provide even lower round-trip latency: a. Drivers can use new DDIs to report the supported sizes of the buffer that is used to transfer data between the OS and the H/W. This means that data transfers do not have to always use 10ms buffers (as they did in previous OS versions). Instead, the driver can specify if it can use small buffers, e.g. 5ms, 3ms, 1ms, etc. b. Applications that require low latency can use new audio APIs (AudioGraph or WASAPI), in order to query the buffer sizes that are supported by the driver and select the one that will be used for the data transfer to/from the H/W.

- When an application uses buffer sizes below a certain threshold to render and capture audio, the OS enters a special mode, where it manages its resources in a way that avoids interference between the audio streaming and other subsystems. This will reduce the interruptions in the execution of the audio subsystem and minimize the probability of audio glitches. When the application stops streaming, the OS returns to its normal execution mode. The audio subsystem consists of the following resources: a. The audio engine thread that is processing low latency audio. b. All the threads and interrupts that have been registered by the driver (using the new DDIs that are described in the section about driver resource registration). c. Some or all of the audio threads from the applications that request small buffers, as well as from all applications that share the same audio device graph (e.g. same signal processing mode) with any application that requested small buffers:

- AudioGraph callbacks on the streaming path.

- If the application uses WASAPI, then only the work items that were submitted to the Real-Time Work Queue API or MFCreateMFByteStreamOnStreamEx and were tagged as «Audio» or «ProAudio».

API Improvements

The following two WindowsВ 10 APIs provide low latency capabilities:

This is how an application developer can determine which of the two APIs to use:

- Favor AudioGraph, wherever possible for new application development.

- Only use WASAPI, if:

- You need additional control than that provided by AudioGraph.

- You need lower latency than that provided by AudioGraph.

The measurement tools section of this topic, shows specific measurements from a Haswell system using the inbox HDAudio driver.

The following sections will explain the low latency capabilities in each API. As it was noted in the previous section, in order for the system to achieve the minimum latency, it needs to have updated drivers that support small buffer sizes.

AudioGraph

AudioGraph is a new Universal Windows Platform API in Windows 10 that is aimed at realizing interactive and music creation scenarios with ease. AudioGraph is available in several programming languages (C++, C#, JavaScript) and has a simple and feature-rich programming model.

In order to target low latency scenarios, AudioGraph provides the AudioGraphSettings::QuantumSizeSelectionMode property. This property can any of the following values shown in the table below:

| Value | Description |

|---|---|

| SystemDefault | Sets the buffer to the default buffer size ( 10ms) |

| LowestLatency | Sets the buffer to the minimum value that is supported by the driver |

| ClosestToDesired | Sets the buffer size to be either equal either to the value defined by the DesiredSamplesPerQuantum property or to a value that is as close to DesiredSamplesPerQuantum as is supported by the driver. |

The AudioCreation sample (available for download on GitHub: https://github.com/Microsoft/Windows-universal-samples/tree/master/Samples/AudioCreation) shows how to use AudioGraph for low latency. The following code snippet shows how to set the minimum buffer size:

Windows Audio Session API (WASAPI)

Starting in WindowsВ 10 , WASAPI has been enhanced to:

- Allow an application to discover the range of buffer sizes (i.e. periodicity values) that are supported by the audio driver of a given audio device. This makes it possible for an application to choose between the default buffer size (10ms) or a small buffer ( Browse my computer for driver software ->Let me pick from a list of device drivers in this computer ->Select High Definition Audio Device and select Next.

- If a window titled «Update driver warning» appears, select Yes.

- Select close.

- If you are asked to reboot the system, select Yes to reboot.

- After reboot, the system will be using the inbox Microsoft HDAudio driver and not the 3rd-party codec driver. Remember which driver you were using before, so that you can fallback to that driver, if you want to use the optimal settings for your audio codec.

The differences in the latency between WASAPI and AudioGraph are due to the following reasons:

- AudioGraph adds one buffer of latency in the capture side, in order to synchronize render and capture (which is not provided by WASAPI). This addition simplifies the code for applications written using AudioGraph.

- There is an additional buffer of latency in AudioGraph’s render side when the system is using > 6ms buffers.

- AudioGraph does not have the option to disable capture audio effects

Samples

- WASAPI Audio sample: https://github.com/Microsoft/Windows-universal-samples/tree/master/Samples/WindowsAudioSession

- AudioCreation sample (AudioGraph): https://github.com/Microsoft/Windows-universal-samples/tree/master/Samples/AudioCreation

- Sysvad driver sample: https://github.com/Microsoft/Windows-driver-samples/tree/master/audio/sysvad

1. Wouldn’t it be better, if all applications use the new APIs for low latency? Doesn’t low latency always guarantee a better user experience for the user?

Not necessarily. Low latency has its tradeoffs:

- Low latency means higher power consumption. If the system uses 10ms buffers, it means that the CPU will wake up every 10ms, fill the data buffer and go to sleep. However, if the system uses 1ms buffers, it means that the CPU will wake up every 1ms. In the second scenario, this means that the CPU will wake up more often and the power consumption will increase. This will decrease battery life.

- Most applications rely on audio effects to provide the best user experience. For example, media players want to provide high-fidelity audio. Communication applications want to minimum echo and noise. Adding these types of audio effects to a stream increases its latency. These applications are more interested in audio quality than in audio latency.

In summary, each application type has different needs regarding audio latency. If an application does not need low latency, then it should not use the new APIs for low latency.

2. Will all systems that update to Windows 10 be automatically update to support small buffers? Also, will all systems support the same minimum buffer size?

No. In order for a system to support small buffers, it needs to have updated drivers. It is up to the OEMs to decide which systems will be updated to support small buffers. Also, newer systems are more likey to support smaller buffers than older systems (i.e. the latency in new systems will most likely be lower than older systems).

3. If a driver supports small buffer sizes ( —>